我正在编写一些解析日志文件的代码,但需要注意的是这些文件是压缩的,必须在运行时解压缩.这段代码是一段性能敏感的代码,所以我正在尝试各种方法来找到合适的代码.无论我使用多少个线程,我的内存基本上与程序所需的内存一样多.

我发现了一种似乎表现相当不错的方法,我试图理解为什么它提供更好的性能.

这两种方法都有一个读取器线程,一个从管道gzip进程读取并写入大缓冲区.然后在请求下一个日志行时对该缓冲区进行延迟解析,返回基本上是指向缓冲区中不同字段所在位置的指针结构.

代码在D中,但它与C或C非常相似.

共享变量:

shared(bool) _stream_empty = false;;

shared(ulong) upper_bound = 0;

shared(ulong) curr_index = 0;

解析代码:

//Lazily parse the buffer

void construct_next_elem() {

while(1) {

// Spin to stop us from getting ahead of the reader thread

buffer_empty = curr_index >= upper_bound -1 &&

_stream_empty;

if(curr_index >= upper_bound && !_stream_empty) {

continue;

}

// Parsing logic .....

}

}

方法1:

Malloc是一个足够大的缓冲区,可以预先保存解压缩文件.

char[] buffer; // Same as vector方法2:

使用匿名内存映射作为缓冲区

MmFile buffer;

buffer = new MmFile(null,MmFile.Mode.readWrite,// PROT_READ || PROT_WRITE

buffer_length,null); // MAP_ANON || MAP_PRIVATE

读者主题:

ulong buffer_length = get_gzip_length(file_path);

pipe = pipeProcess(["gunzip","-c",file_path],Redirect.stdout);

stream = pipe.stdout();

static void stream_data() {

while(!l.stream.eof()) {

// Splice is a reference inside the buffer

char[] splice = buffer[upper_bound..upper_bound + READ_SIZE];

ulong read = stream.rawRead(splice).length;

upper_bound += read;

}

// Clean up

}

void start_stream() {

auto t = task!stream_data();

t.executeInNewThread();

construct_next_elem();

}

我从方法1中获得了明显更好的性能,即使在数量级上也是如此

User time (seconds): 112.22

System time (seconds): 38.56

Percent of CPU this job got: 151%

Elapsed (wall clock) time (h:mm:ss or m:ss): 1:39.40

Average shared text size (kbytes): 0

Average unshared data size (kbytes): 0

Average stack size (kbytes): 0

Average total size (kbytes): 0

Maximum resident set size (kbytes): 3784992

Average resident set size (kbytes): 0

Major (requiring I/O) page faults: 0

Minor (reclaiming a frame) page faults: 5463

Voluntary context switches: 90707

Involuntary context switches: 2838

Swaps: 0

File system inputs: 0

File system outputs: 0

Socket messages sent: 0

Socket messages received: 0

Signals delivered: 0

Page size (bytes): 4096

Exit status: 0

与

User time (seconds): 275.92

System time (seconds): 73.92

Percent of CPU this job got: 117%

Elapsed (wall clock) time (h:mm:ss or m:ss): 4:58.73

Average shared text size (kbytes): 0

Average unshared data size (kbytes): 0

Average stack size (kbytes): 0

Average total size (kbytes): 0

Maximum resident set size (kbytes): 3777336

Average resident set size (kbytes): 0

Major (requiring I/O) page faults: 0

Minor (reclaiming a frame) page faults: 944779

Voluntary context switches: 89305

Involuntary context switches: 9836

Swaps: 0

File system inputs: 0

File system outputs: 0

Socket messages sent: 0

Socket messages received: 0

Signals delivered: 0

Page size (bytes): 4096

Exit status: 0

使用方法2获取更多页面错误.

有人可以帮助我阐明为什么使用mmap会有如此明显的性能下降?

如果有人知道有任何更好的方法来解决这个问题,我很乐意听到.

编辑 – – –

改变方法2做:

char * buffer = cast(char*)mmap(cast(void*)null,buffer_length,PROT_READ | PROT_WRITE,MAP_ANON | MAP_PRIVATE,-1,0);

现在使用简单的MmFile获得3倍的性能提升.我试图弄清楚什么可能导致性能如此明显不同,它本质上只是mmap的包装.

仅使用直接char * mmap vs Mmfile的Perf数字,减少页面错误的方式:

User time (seconds): 109.99

System time (seconds): 36.11

Percent of CPU this job got: 151%

Elapsed (wall clock) time (h:mm:ss or m:ss): 1:36.20

Average shared text size (kbytes): 0

Average unshared data size (kbytes): 0

Average stack size (kbytes): 0

Average total size (kbytes): 0

Maximum resident set size (kbytes): 3777896

Average resident set size (kbytes): 0

Major (requiring I/O) page faults: 0

Minor (reclaiming a frame) page faults: 2771

Voluntary context switches: 90827

Involuntary context switches: 2999

Swaps: 0

File system inputs: 0

File system outputs: 0

Socket messages sent: 0

Socket messages received: 0

Signals delivered: 0

Page size (bytes): 4096

Exit status: 0

最佳答案

您正在获取页面错误和减速,因为默认情况下,mmap只在您尝试访问页面时加载页面.

读取另一方面知道您正在按顺序阅读,因此它会在您提出要求之前提前提取页面.

看看madvise调用 – 它旨在向内核发出如何打算访问mmap文件的信号,并允许你为mmap内存的不同部分设置不同的策略 – 例如你有一个你希望保留在内存中的索引块[MADV_WILLNEED],但内容是随机和按需访问的[MADV_RANDOM],或者你是在顺序扫描中循环内存[MADV_SEQUENTIAL]

但是,操作系统完全可以忽略您设置的策略,所以YMMW

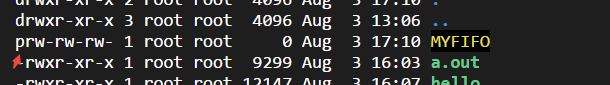

linux常用进程通信方式包括管道(pipe)、有名管道(FIFO)、...

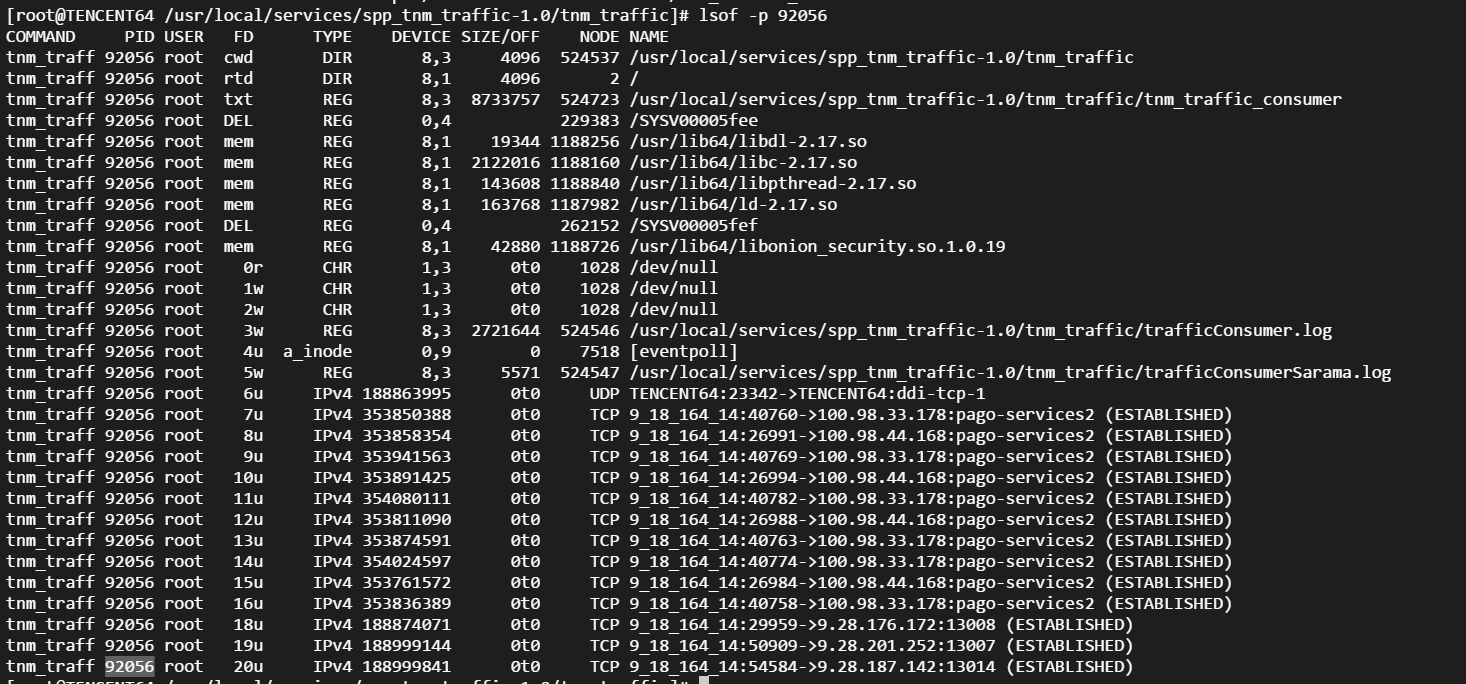

linux常用进程通信方式包括管道(pipe)、有名管道(FIFO)、... Linux性能观测工具按类别可分为系统级别和进程级别,系统级别...

Linux性能观测工具按类别可分为系统级别和进程级别,系统级别... 本文包含作者工作中常用到的一些命令,用于诊断网络、磁盘占满...

本文包含作者工作中常用到的一些命令,用于诊断网络、磁盘占满... linux的平均负载表示运行态和就绪态及不可中断状态(正在io)的...

linux的平均负载表示运行态和就绪态及不可中断状态(正在io)的... CPU上下文频繁切换会导致系统性能下降,切换分为进程切换、线...

CPU上下文频繁切换会导致系统性能下降,切换分为进程切换、线...