目录

Openstack云平台的搭建与部署................................... 3

Keywords:Openstack、Cloud Computing、Iaas....................... 3

一、 绪论.................................................... 4

1.1 选题背景.................................................... 4

1.2 研究的目的和意义............................................ 4

二、 相关技术................................................ 4

2.1 环境配置.................................................... 4

2.2 数据库MariaDB和消息队列的安装.............................. 6

2.3 安装Keystone(控制节点)................................... 8

2.4 安装Glance................................................ 12

2.5 安装Nova(控制节点)...................................... 16

2.6 安装Neutron............................................... 22

2.7 安装DashBoard............................................. 24

2.8 部署计算节点............................................... 25

2.9 创建实例................................................... 29

2.10 配置cinder............................................... 32

2.11 配置swift................................................ 39

三、 系统需求分析........................................... 55

3.1 可行性分析................................................. 55

3.2 系统功能分析............................................... 55

3.3 系统非功能设计............................................. 58

四、 系统设计............................................... 59

五、 系统实现............................................... 62

5.1 登录DashBoard............................................. 62

5.2 租户用户管理............................................... 63

5.3 创建网络................................................... 63

5.4 查看镜像................................................... 64

5.5 启动云主机................................................. 65

六、 结束语................................................. 65

七、 致谢................................................... 66

Openstack云平台的搭建与部署

[摘 要]

OpenStack是一个开源的云计算管理平台项目,由几个主要的组件组合起来完成具体工作。OpenStack支持几乎所有类型的云环境,项目目标是提供实施简单、可大规模扩展、丰富、标准统一的云计算管理平台。OpenStack通过各种互补的服务提供了基础设施即服务(IaaS)的解决方案,每个服务提供API以进行集成。

[关键词]

Openstack、云计算、Iaas

Openstack cloud platform construction and deployment

Openstack is an open-source cloud computing management platform project,which is composed of several major components to complete the specific work. Openstack supports almost all types of cloud environments,and the goal of the project is to provide a cloud computing management platform with simple implementation,large-scale expansion,rich and unified standards. Openstack provides infrastructure as a service (IAAs) solutions through a variety of complementary services,each providing an API for integration

Keywords:Openstack、Cloud Computing、Iaas

随着计算科学和商业计算的发展,使得软件模型和架构越来越快地发生变化,同时促进网格计算、并行计算、分布式计算迅速发展成为云计算。云计算主要包括基础设施即服务(aS), 平台即服务(PaaS),软件即服务(SaaS), 并通过这些技术将计算资源统一管理和调度。 作为一-种 新的计算模型,云计算凭借其低成本、高效率得到了快速发展,也促进了近几年开源云计算架构的不断发展和完善。

OpenStack是-一个开放源的云计算项目和工具集,并且提供了关于基础设施即服务(laaS) 的解决方案。OpenStack不仅可以快速部署全虚拟化环境,而且可以通过此环境来建立多个互联的虚拟服务器,并能够使用户快速部署应用在虚拟机上。

研究目的:简化云的部署过程并为其带来良好的可扩展性

研究意义:虚拟化技术突破了时间、空间的界限,使用Openstack搭建云平台实现了对于镜像、网络等按需分配,同时,云系统上的资源数据十分庞大,更新速度快还提供监控等功能,方便使用和管理,有助于推动网络时代的发展。

2.1 环境配置

在VMware上创建三台虚拟机,分别是controller(4G),computer(4G)和cinder(3G),为其分配网卡,分别是eth8,eth1,eth3,并为其设置好网络ip,具体情况如下图所示:

分别在三台虚拟机上进行如下操作:

- 修改主机名

hostnamectl set-hostname controller(对应的主机名)

- 修改hosts文件

vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.1.1.11 controller

10.1.1.12 computer1

10.1.1.13 cinder

- 安装ntp

yum -y install ntp -y

修改配置文件/etc/ntp.conf

cp -a /etc/ntp.conf /etc/ntp.conf_bak

控制节点

vi /etc/ntp.conf

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

server 127.127.1.0

fudge 127.127.1.0 stratum 0

其他节点修改如下

vi /etc/ntp.conf

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

server 9.1.1.11 prefer

- 启动服务并设置开机启动

systemctl restart ntpd

验证:ntpq -p

- 关闭selinux

sed -i "s/\=enforcing/\=disabled/g" /etc/selinux/config

setenforce 0

- 关闭防火墙

chkconfig firewalld off

service firewalld stop

- 安装旗本所需软件

yum install -y vim net-tools

yum install openstack-selinux –y

yum install openstack-selinux \

python-openstackclient yum-plugin-priorities -y

yum install -y openstack-utils

2.2 数据库MariaDB和消息队列的安装

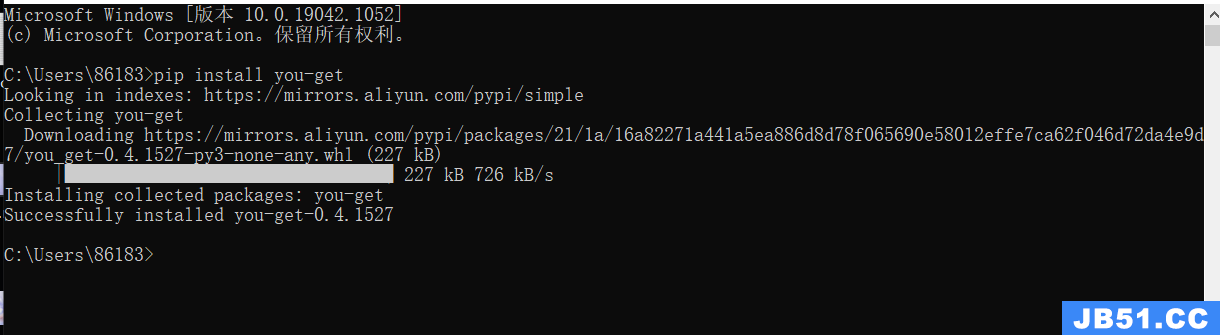

安装mariadb时,由于是国外源,可能会比较慢,需要更改为国内源即可解决:

vim /etc/yum.repos.d/MariaDB.repo

[mariadb]

name = MariaDB

baseurl=https://mirrors.ustc.edu.cn/mariadb/yum/10.1/centos7-amd64

gpgkey=https://mirrors.ustc.edu.cn/mariadb/yum/RPM-GPG-KEY-MariaDB

gpgcheck=1

搭建Mariadb

- 安装Maridb数据库

yum install -y MariaDB-server MariaDB-client

- 配置Maridb

vim /etc/my.cnf.d/mariadb-openstack.cnf

在 mysqld 区块添加如下内容:

[mysqld]

default-storage-engine=inodb

innodb_file_per_table

collation-server = utf8_general_ci

init-connect = 'SET NAMES utf8'

character-set-server = utf8

bind-address = 10.1.1.11

- 启动数据库及设置Maridb开机启动

systemctl enable mariadb.service

systemctl restart mariadb.service

systemctl status mariadb.service

systemctl list-unit-files |grep mariadb.service

- 配置Maridb,给Maridb设置密码

mysql_secure_installation

先按回车,然后按Y,设置 mysql 密码,然后一直按 y 结束这里我们设置的密码是 123456

安装RabbitMQ

- 每个节点都安装erlang

yum install -y erlang

- 每个节点都安装RabbitMQ

yum install -y rabbitmq-server

- 每个节点都启动rabbitmq 及设置开机启动

systemctl enable rabbitmq-server.service

systemctl restart rabbitmq-server.service

systemctl status rabbitmq-server.service

systemctl list-unit-files |grep rabbitmq-server.service

- 创建 openstack,注意将 PASSWOED 替换为自己的合适密码

rabbitmqctl add_user openstack 123456

- 将openstack用户赋予权限

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

rabbitmqctl set_user_tags openstack administrator

rabbitmqctl list_users

- 看下监听端口rabbitmq用的5672端口

netstat -ntlp |grep 5672

- 查看RabbitMQ插件

/usr/lib/rabbitmq/bin/rabbitmq-plugins list

- 打开RabbitMQ相关插件

/usr/lib/rabbitmq/bin/rabbitmq-plugins enable rabbitmq_management mochiweb webmachine rabbitmq_web_dispatch amqp_client rabbitmq_management_agent

- 查看Rabbitmq状态

用浏览器登录http://9.1.1.11:15672 输入 openstack/123456 也可以查看状态信息

2.3 安装Keystone(控制节点)

(1)创建 keystone 数据库

mysql -uroot -p123456

Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 11

Server version: 10.1.20-MariaDB MariaDB Server

Copyright (c) 2000,2016,Oracle,MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> create database keystone;

Query OK,1 row affected (0.00 sec)

MariaDB [(none)]> grant all privileges on keystone.* to keystone@'localhost' identified by '123456';

Query OK,0 rows affected (0.00 sec)

MariaDB [(none)]> grant all privileges on keystone.* to keystone@'' identified by '123456'; Query OK,0 rows affected (0.00 sec)

MariaDB [(none)]> flush privileges; Query OK,0 rows affected (0.00 sec)

MariaDB [(none)]> exit

Bye

(2)安装软件包

yum -y install openstack-keystone \

openstack-utils python-openstackclient httpd mod_wsgi mod_ssl

(3)修改配置文件

cp -a /etc/keystone/keystone.conf /etc/keystone/keystone.conf_bak

vi /etc/keystone/keystone.conf

memcache_servers = 10.1.1.11:11211 [database]

connection = mysql+pymysql://keystone:123456@10.1.1.11/keystone [token]

provider = fernet driver = memcache

(4)生成数据库表

su -s /bin/bash keystone -c "keystone-manage db_sync"

(5)初始化秘钥

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

(6)定义本机 IP

export controller=10.1.1.11

将keystone,adminpassword 替换为自己的密码

keystone-manage bootstrap --bootstrap-password 123456 \

--bootstrap-admin-url http://$controller:35357/v3/ \

--bootstrap-internal-url http://$controller:35357/v3/ \

--bootstrap-public-url http://$controller:5000/v3/ \

--bootstrap-region-id RegionOne

(7)编 辑 /etc/httpd/conf/httpd.conf

cp -a /etc/httpd/conf/httpd.conf /etc/httpd/conf/httpd.conf_bak ServerName controller

(8)创建软连接

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/ # systemctl start httpd

systemctl enable httpd

(9)创建和加载环境变量

vim ~/keystonerc

export OS_USERNAME=admin export OS_PASSWORD=123456

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://10.1.1.11:35357/v3

export OS_IDENTITY_API_VERSION=3

chmod 600 ~/keystonerc

source ~/keystonerc

(10)创建 service

openstack project create --domain default \

--description "Service Project" service

(11)创建项目

# openstack project create --domain default \

--description "Demo Project" demo

- 创建用户

openstack user create --domain default \

--password-prompt demo

(13)创建角色

openstack role create user

(14)赋予用户角色

openstack role add --project demo --user demo user

(15)验证

取消环境变量

unset OS_AUTH_URL OS_PASSWORD

验证 admin

openstack --os-auth-url http://10.1.1.11:35357/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name admin --os-username admin

验证demo

openstack --os-auth-url http://10.1.1.11:5000/v3 \

--os-project-domain-name Default --os-user-domain-name Default \

--os-project-name demo --os-username demo

(16)写入系统变量中

echo "source ~/keystonerc " >>

~/.bash_profile # source

~/.bash_profile

2.4 安装Glance

(1)创建 glance 用户

openstack user create --domain default --project service --password 123456 glance

(2)赋予 glance 用户 admin 权限

openstack role add --project service --user glance admin

(3)创建 glance 服务

openstack service create --name glance --description "OpenStack Image service" image

(4)定义 controller管理网IP

export controller=10.1.1.11

(5)创建public的endpoint

openstack endpoint create --region RegionOne image public http://$controller:9292

(6)创建 internal 的 endpoint

openstack endpoint create --region RegionOne image internal http://$controller:9292

(7)创建 admin 的 endpoint

openstack endpoint create --region RegionOne image admin http://$controller:9292

(8)创建数据库

mysql -uroot -p123456

MariaDB [(none)]> create database glance;

MariaDB [(none)]> grant all privileges on glance.* to glance@'localhost' identified by '123456';

MariaDB [(none)]> grant all privileges on glance.* to glance@' ' identified by '123456'; MariaDB [(none)]> flush privileges;

MariaDB [(none)]> exit

(9)安装软件包

yum-y install openstack-glance

(10)修改配置文件

mv /etc/glance/glance-api.conf /etc/glance/glance-api.conf.org

vi /etc/glance/glance-api.conf

[DEFAULT]

bind_host = 0.0.0.0

notification_driver = noop

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[database]

connection = mysql+pymysql://glance:123456@10.1.1.11/glance

[keystone_authtoken]

auth_uri = http://10.1.1.11:5000

auth_url = http://10.1.1.11:35357

memcached_servers = 10.1.1.11:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone

(11)修改配置文件 glance-registry.conf

mv /etc/glance/glance-registry.conf /etc/glance/glance-registry.conf.org

vi /etc/glance/glance-registry.conf

[DEFAULT]

bind_host = 0.0.0.0

notification_driver = noop

[database]

connection = mysql+pymysql://glance:123456@10.1.1.11/glance

[keystone_authtoken]

auth_uri = http://10.1.1.11:5000

auth_url = http://10.1.1.11:35357

memcached_servers = 10.1.1.11:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance password = 123456

[paste_deploy]

flavor = keystone

(12)修改文件权限

chmod 640

/etc/glance/glance-api.conf /etc/glance/glance-registry.conf

chown root:glance /etc/glance/glance-api.conf \ /etc/glance/glance-registry.conf

- 创建数据库表结构

su -s /bin/bash glance -c "glance-manage db_sync"

- 启动服务并设置开机启动

systemctl start openstack-glance-api openstack-glance-registry

systemctl enable openstack-glance-api openstack-glance-registry

- 验证

引用环境变量

source admin-openrc

下载镜像

wget \ http://download.cirros-cloud.net/0.3.5/cirros-0.3.5-x86_64-disk.img

加载镜像

openstack image create "cirros" \

--file cirros-0.3.4-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--public

查看镜像

Openstack image list

2.5 安装Nova(控制节点)

- 创建数据库 ova_api,nova,and nova_cell0 databases:

mysql -u root -p123456

CREATE DATABASE nova_placement ;

CREATE DATABASE nova_cell0 ;

CREATE DATABASE nova ;

CREATE DATABASE nova_api ;

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' \

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'' \

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_placement.* \

TO 'nova'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_placement.* TO 'nova'@'' \

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'' \

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'' IDENTIFIED BY '123456';

(2)创建用户

openstack user create --domain default --password 123456 nova

(3)赋予 admin 权限

openstack role add --project service --user nova admin

(4)创建service

openstack service create --name nova --description "OpenStack Compute" compute

(5)创建 endpoint

openstack endpoint create --region RegionOne compute public http://10.1.1.11:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://10.1.1.11:8774/v2.1

openstack endpoint create --region RegionOne placement admin http://10.1.1.11:8778

(6)创建 placement用户

openstack user create --domain default --password 123456 placement

(7)赋予admin权限

openstack role add --project service --user placement admin

(8)创建服务

openstack service create --name placement --description "Placement API" placement

(9)创建endpoint

openstack endpoint create --region RegionOne placement public http://10.1.1.11:8778

openstack endpoint create --region RegionOne placement internal http://10.1.1.11:8778

openstack endpoint create --region RegionOne placement admin http://10.1.1.11:8778

(10)安装软件包

yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler openstack-nova-placement-api -y

(11)修改配置文件/etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

[api_database]

connection = mysql+pymysql://nova:123456@10.1.1.11/nova_api [database]

connection = mysql+pymysql://nova:123456@10.1.1.11/nova

#消息队列

[DEFAULT]

transport_url = rabbit://openstack:123456@10.1.1.11

#keystone 认证

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://10.1.1.11:5000

auth_url = http://10.1.1.11:35357

memcached_servers = 10.1.1.11:11211

auth_type = password

project_domain_name = default

user_domain_name = default project_name = service

username = nova

password = 123456

[DEFAULT]

my_ip = 10.1.1.11

#管理网 IP

[DEFAULT]

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc]

enabled = true

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

[glance]

api_servers = http://10.1.1.11:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://10.1.1.11:35357/v3

username = placement

password = 123456

(12)写入配置文件

vi /etc/httpd/conf.d/00-nova-placement-api.conf

Listen 8778

<VirtualHost *:8778>

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

WSGIDaemonProcess nova-placement-api processes=3 threads=1 user=nova

group=nova

WSGIScriptAlias / /usr/bin/nova-placement-api

<IfVersion >= 2.4>

ErrorLogFormat "%M"

</IfVersion>

ErrorLog /var/log/nova/nova-placement-api.log

#SSLEngine On

#SSLCertificateFile ...

#SSLCertificateKeyFile ...

<Directory /usr/bin>

Require all granted

</Directory>

</VirtualHost>

Alias /nova-placement-api /usr/bin/nova-placement-api

<Location /nova-placement-api>

SetHandler wsgi-script

Options +ExecCGI

WSGIProcessGroup nova-placement-api

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

</Location>

(13)重启服务

systemctl restart httpd

(14)验证监听

ss -tanlp | grep 8778

(15)同步数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova

nova-manage cell_v2 list_cells

(16)启动服务并设置开机启动

systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service \ openstack-nova-scheduler.service \

openstack-nova-conductor.service\

openstack-nova-novncproxy.service

systemctl start openstack-nova-api.service \

openstack-nova-consoleauth.service\

openstack-nova-scheduler.service \

openstack-nova-conductor.service\

openstack-nova-novncproxy.service

2.6 安装Neutron

(1)创建 neutron 数据库

mysql -u root -p123456

CREATE DATABASE neutron;

(2)创建数据库用户并赋予权限

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY '123456';

(3)创建 neutron 用户及赋予 admin 权限

source /root/admin-openrc.sh

openstack user create --domain default neutron --password 123456

openstack role add --project service --user neutron admin

(4)创建 network 服务

openstack service create --name neutron --description "OpenStack Networking" network

(5)创建 endpoint

openstack endpoint create --region RegionOne network public http://controller:9696

openstack endpoint create --region RegionOne network internal http://controller:9696

openstack endpoint create --region RegionOne network admin http://controller:9696

(6)安装 neutron 相关软件

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge

ebtables -y

(7)配置 neutron 配置文件/etc/neutron/neutron.conf

(8)配置/etc/neutron/plugins/ml2/ml2_conf.ini

(9)配置/etc/neutron/plugins/ml2/linuxbridge_agent.ini

(10)配置 /etc/neutron/l3_agent.ini

(11)配置/etc/neutron/dhcp_agent.ini

(12)重新配置/etc/nova/nova.conf,配置这步的目的是让 compute 节点能使用上neutron 网络

(13)将 dhcp-option-force=26,1450 写/etc/neutron/dnsmasq-neutron.conf

echo "dhcp-option-force=26,1450" >/etc/neutron/dnsmasq-neutron.conf

(14)配置/etc/neutron/metadata_agent.ini

(15)创建软链接

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

(16)同步数据库

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf -- config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

(17)重启 nova 服务,因为刚才改了 nova.conf

systemctl restart openstack-nova-api.service

systemctl status openstack-nova-api.service

(18)重启 neutron 服务并设置开机启动

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron

dhcp-agent.service neutron-metadata-agent.service

systemctl restart neutron-server.service neutron-linuxbridge-agent.service neutron

dhcp-agent.service neutron-metadata-agent.service

systemctl status neutron-server.service neutron-linuxbridge-agent.service neutron

dhcp-agent.service neutron-metadata-agent.service

(19)启动 neutron-l3-agent.service 并设置开机启动

systemctl enable neutron-l3-agent.service

systemctl start neutron-l3-agent.service

systemctl status neutron-l3-agent.service

(20)执行验证

source /root/admin-openrc

neutron ext-list

neutron agent-list

2.7 安装DashBoard

(1)安装 dashboard 相关软件包

yum install openstack-dashboard -y

(2)修改配置文件/etc/openstack-dashboard/local_settings

vim /etc/openstack-dashboard/local_settings

(3)启动 dashboard 服务并设置开机启动

systemctl enable httpd.service memcached.service

systemctl restart httpd.service memcached.service

systemctl status httpd.service memcached.service

到此,Controller 节点搭建完毕,打开 firefox 浏览器即可访问 http://9.1.1.11/dashboard/ ,可进入 openstack 界面!

2.8 部署计算节点

安装nova(计算节点)

(1)安装nova软件包

首先卸载旧版本 qemu-kvm

yum –y remove qemu-kvm

下载源码

wget https://download.qemu.org/qemu-3.1.0-rc0.tar.xz

安装依赖包

yum -y install gcc gcc-c++ automake libtool zlib-devel glib2-devel bzip2-devel libuuid devel spice-protocol spice-server-devel usbredir-devel libaio-devel

编译安装

tar -xvJf qemu-3.1.0-rc0.tar.xz

cd qemu-3.1.0-rc0

./configure

make && make install

编译完成之后 做链接

ln -s /usr/local/bin/qemu-system-x86_64 /usr/bin/qemu-kvm

ln -s /usr/local/bin/qemu-system-x86_64 /usr/libexec/qemu-kvm

ln -s /usr/local/bin/qemu-img /usr/bin/qemu-img

查看当前 qemu 版本

qemu-img --version

qemu-kvm –version

yum install libvirt-client

yum install openstack-nova-compute –y

修改配置文件/etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:123456@10.1.1.11

my_ip = 10.1.1.12

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://10.1.1.11:5000

auth_url = http://10.1.1.11:35357

memcached_servers = 10.1.1.11:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123456

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://10.1.1.11:6080/vnc_auto.html

[glance]

api_servers = http://10.1.1.11:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://10.1.1.11:35357/v3

username = placement

password = 123456

(2)检测是否支持虚拟化

egrep -c '(vmx|svm)' /proc/cpuinfo

(3)启动服务并设置开机启动

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service

控制节点

(4)验证

openstack compute service list--service nova-compute

(5)发现计算节点

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

(6)验证

查看服务

openstack compute service list

在 Identity Service 中列出 API 的 endpoint,以验证与 Identity 服务的连接正常

openstack catalog list

查看镜像服务

openstack image list

验证 cells and placement API 是否正常

nova-status upgrade check

安装 Neutron(计算节点)

(1)安装相关软件包

yum install openstack-neutron-linuxbridge ebtables ipset -y

(2)配置 neutron.conf

(3)配置/etc/neutron/plugins/ml2/linuxbridge_agent.ini

(4)配置 nova.conf

- 重启和 enable neutron-linuxbridge-agent 服务

systemctl restart libvirtd.service openstack-nova-compute.service

systemctl enable neutron-linuxbridge-agent.service

systemctl restart neutron-linuxbridge-agent.service

systemctl status libvirtd.service openstack-nova-compute.service neutron-linuxbridge agent.service

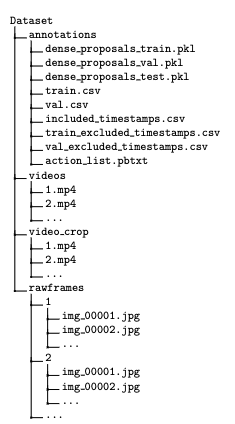

2.9 创建实例

执行环境变量,创建flat模式的public网络,创建public网络子网,创建private的私有网络,private-subnet 的私有网络子网,创建三个不同名称的私有网络,创建路由。

source ~/keystonerc

neutron --debug net-create --shared provider --router:external True --

provider:network_type flat --provider:physical_network provider

neutron subnet-create provider 9.1.1.0/24 --name provider-sub --allocation-pool

start=9.1.1.50,end=9.1.1.90 --dns-nameserver 8.8.8.8 --gateway 9.1.1.254

neutron net-create private --provider:network_type vxlan --router:external False --shared

neutron subnet-create private --name private-subnet --gateway 192.168.1.1 192.168.1.0/24

neutron net-create private-office --provider:network_type vxlan --router:external False -- shared

neutron subnet-create private-office --name office-net --gateway 192.168.2.1 192.168.2.0/24

neutron net-create private-sale --provider:network_type vxlan--router:external False -- shared

neutron subnet-create private-sale --name sale-net --gateway 192.168.3.1 192.168.3.0/24

neutron net-create private-technology --provider:network_type vxlan --router:external False --shared

neutron subnet-create private-technology --name technology-net --gateway 192.168.4.1 192.168.4.0/24

2.10 配置cinder

安装计算节点

(1)创建数据库用户并赋予权限

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY ‘123456’;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY ‘123456’

(2)创建 cinder 用户并赋予 admin 权限

source /root/admin-openrc

openstack user create --domain default cinder --password 123456

openstack role add --project service --user cinder admin

(3)创建 volume 服务

openstack service create --name cinder --description "OpenStack Block Storage" volume

openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2

- 创建 endpoint

openstack endpoint create --region RegionOne volume public

http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne volume internal

http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne volume admin

http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev2 public

http://controller:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev2 internal

http://controller:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne volumev2 admin

http://controller:8776/v2/%\(tenant_id\)s

(5)安装 cinder 相关服务

yum install openstack-cinder -y

(6)配置 cinder 配置文件

(7)同步数据库

su -s /bin/sh -c "cinder-manage db sync" cinder

(8)在 controller 上启动 cinder 服务,并设置开机启动

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl status openstack-cinder-api.service openstack-cinder-scheduler.service

安装cinder节点

(9)安装 Cinder 节点,Cinder 节点这里我们需要额外的添加一个硬盘(/dev/sdb) 用作 cinder 的存储服务

yum install lvm2 -y

(10)启动服务并设置为开机自启

systemctl enable lvm2-lvmetad.service

systemctl start lvm2-lvmetad.service

systemctl status lvm2-lvmetad.service

(11)创建 lvm,这里的/dev/sdb 就是额外添加的硬盘

fdisk -l

pvcreate /dev/sdb

vgcreate cinder-volumes /dev/sdb

(12)编辑存储节点 lvm.conf 文件

vim /etc/lvm/lvm.conf

在 devices 下面添加 filter = [ "a/sda/","a/sdb/","r/.*/"]

然后重启下 lvm2 服务:

systemctl restart lvm2-lvmetad.service

systemctl status lvm2-lvmetad.service

(13)安装 openstack-cinder、targetcli

yum install openstack-cinder openstack-utils targetcli python-keystone ntpdate -y

(14)配置 cinder 配置文件

(15)启动 openstack-cinder-volume 和 target 并设置开机启动

systemctl enable openstack-cinder-volume.service target.service

systemctl restart openstack-cinder-volume.service target.service

systemctl status openstack-cinder-volume.service target.service

(16)验证 cinder 服务是否正常 (计算节点)

source /root/admin-openrc

cinder service-list

配置 cinder(computer 节点部署)

(1)计算节点要是想用 cinder,那么需要配置 nova 配置文件(注意!这一步是在计 算节点操作的)

openstack-config --set /etc/nova/nova.conf cinder os_region_name RegionOne

systemctl restart libvirtd.service openstack-nova-compute.service

(2)然后在 controller 上重启 nova 服务

systemctl restart openstack-nova-api.service

systemctl status openstack-nova-api.service

(3)验证(控制节点)

source /root/admin-openrc

neutron ext-list

neutron agent-list

2.11 配置swift

控制节点

(1)获得admin凭证

source keystonerc

(2)创建swift用户,给swift用户添加admin角色:

openstack user create --domain default --password=swift swift

openstack role add --project service --user swift admin

(3)创建swift服务条目,创建对象存储服务 API 端点,dashboard中看效果

openstack service create --name swift --description "OpenStack Object Storage" object-store

openstack endpoint create --region RegionOne object-store public http://controller:8080/v1/AUTH_%\(tenant_id\)s

openstack endpoint create --region RegionOne object-store internal http://controller:8080/v1/AUTH_%\(tenant_id\)s

openstack endpoint create --region RegionOne object-store admin http://controller:8080/v1

(4)安装软件包

yum install openstack-swift-proxy

python-swiftclient python-keystoneclient python-keystonemiddleware memcached -y

(5)从对象存储的仓库源中获取代理服务的配置文件

curl -o

/etc/swift/proxy-server.conf https://git.openstack.org/cgit/openstack/swift/plain/etc/proxy-server.conf-sample?h=stable/pike

- 修改配置文件(进入配置文件后,使

https://git.openstack.org/cgit/openstack/swift/plain/etc/proxy-server.conf-sample?h=stable/pike中的内容覆盖配置文件中原有的东西,然后进行修改)

vi /etc/swift/proxy-server.conf

在[DEFAULT]项,配置Swift对象存储服务组件使用的端口、用户和配置路径

[DEFAULT]

# bind_ip = 0.0.0.0

bind_port = 8080

# bind_timeout = 30

# backlog = 4096

swift_dir = /etc/swift

user = swift

在[pipeline:main]项,启用相关的模块

[pipeline:main]

pipeline = catch_errors gatekeeper healthcheck proxy-logging cache container_sync bulk ratelimit authtoken keystoneauth container-quotas account-quotas slo dlo versioned_writes proxy-logging proxy-server

在[app:proxy-server]项,启用自动账户创建

[app:proxy-server]

use = egg:swift#proxy

account_autocreate = true

在[filter:keystoneauth]项,配置操作用户角色

[filter:keystoneauth]

use = egg:swift#keystoneauth

operator_roles = admin,user

在[filter:authtoken]项,配置keystone身份认证服务组件访问

[filter:authtoken]

paste.filter_factory = keystonemiddleware.auth_token:filter_factory

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = swift

password = swift

delay_auth_decision = True

在[filter:cache]项,配置MemCached的访问路径

[filter:cache]

use = egg:swift#memcache

memcache_servers = controller:11211

存储节点配置

关闭虚拟机,在设置中新建一个磁盘

(1)安装服务工具包

yum install xfsprogs rsync -y

(2)数据盘配置 /dev/sdc

mkfs.xfs /dev/sdc #格式化xfs

mkdir -p /srv/node/sdb #创建挂载点目录结构

(3)编辑“/etc/fstab”文件并添加以下内容

/dev/sdc /srv/node/sdc xfs noatime,nodiratime,nobarrier,logbufs=8 0 2

(4)挂载硬盘

mount /srv/node/sdb

(5)查看硬盘

df -h

(6)文件配置rsync

vi /etc/rsyncd.conf

uid = swift

gid = swift

log file = /var/log/rsyncd.log

pid file = /var/run/rsyncd.pid

address = 9.1.1.13

[account]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/account.lock

[container]

max connections = 2

path = /srv/node/

read only = False#

lock file = /var/lock/container.lock

[object]

max connections = 2

path = /srv/node/

read only = False

lock file = /var/lock/object.lock

(7)启动 “rsyncd” 服务和配置它随系统启动

systemctl enable rsyncd.service

systemctl start rsyncd.service

(8)安装和配置Swift对象存储服务组件

安装软件包

yum install openstack-swift-account openstack-swift-container \

openstack-swift-object

从对象存储源仓库中获取accounting,container以及object服务配置文件

curl -o

/etc/swift/account-server.conf https://git.openstack.org/cgit/openstack/swift/plain/etc/account-server.conf-sample?h=stable/pike

curl -o

/etc/swift/container-server.conf https://git.openstack.org/cgit/openstack/swift/plain/etc/container-server.conf-sample?h=stable/pike

curl -o

/etc/swift/object-server.conf https://git.openstack.org/cgit/openstack/swift/plain/etc/object-server.conf-sample?h=stable/pike

(9)修改配置文件(使链接中的内容覆盖原有的配置文件,分别对其进行修改)

vi /etc/swift/account-server.conf

[DEFAULT]

bind_ip = 9.1.1.13

bind_port = 6002

# bind_timeout = 30

# backlog = 4096

user = swift

swift_dir = /etc/swift

devices = /srv/node

[pipeline:main]

pipeline = healthcheck recon account-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

vi /etc/swift/container-server.conf

[DEFAULT]

bind_ip = 9.1.1.13

bind_port = 6001

# bind_timeout = 30

# backlog = 4096

user = swift

swift_dir = /etc/swift

devices = /srv/node

[pipeline:main]

pipeline = healthcheck recon account-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

vi /etc/swift/object-server.conf

[DEFAULT]

bind_ip = 9.1.1.13

bind_port = 6000

# bind_timeout = 30

# backlog = 4096

user = swift

swift_dir = /etc/swift

devices = /srv/node

[pipeline:main]

pipeline = healthcheck recon account-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

recon_lock_path = /var/lock

(10)创建目录,更改权限

chown -R swift:swift /srv/node

mkdir -p /var/cache/swift

chown -R swift:swift /var/cache/swift

chmod -R 775 /var/cache/swift

控制节点:

(1)创建目录,更改权限创建,分发并初始化环(rings)

#在/etc/swift/目录创建账户Account Ring,只有一个硬盘,只能创建一个副本

#创建基本 account.builder 文件

swift-ring-builder account.builder create 10 1 1

#添加每个节点到 ring 中

swift-ring-builder account.builder add --region 1 --zone 1 --ip 9.1.1.13 --port 6002 --device sdc --weight 100

#验证 ring 的内容

swift-ring-builder account.builder

#平衡 ring

swift-ring-builder account.builder rebalance

#验证 ring 的内容

swift-ring-builder account.builder

#在/etc/swift/目录创建容器container.builder,只有一个硬盘,只能创建一个副本

#创建基本 container.builder 文件

swift-ring-builder container.builder create 10 1 1

#添加每个节点到 ring 中

swift-ring-builder container.builder add --region 1 --zone 1 --ip 9.1.1.13 --port 6001 --device sdc --weight 100

#验证 ring 的内容

swift-ring-builder account.builder

#平衡 ring

swift-ring-builder account.builder rebalance

#验证 ring 的内容

swift-ring-builder account.builder

#在/etc/swift/目录创建对象object.builder,只有一个硬盘,只能创建一个副本

swift-ring-builder object.builder create 10 1 1

#创建基本 object.builder 文件

#添加每个节点到 ring 中

swift-ring-builder container.builder add --region 1 --zone 1 --ip 9.1.1.13 --port 6000 --device sdc --weight 100

#验证 ring 的内容

swift-ring-builder account.builder

#平衡 ring

swift-ring-builder account.builder rebalance

#验证 ring 的内容

swift-ring-builder account.builder

(2)复制“account.ring.gz”,“container.ring.gz”和“object.ring.gz”文件到每个存储节点和其他运行了代理服务的额外节点的 /etc/swift 目录。

(3)配置/etc/swift/swift.conf

#从对象存储源仓库中获取 /etc/swift/swift.conf 文件

curl -o

/etc/swift/swift.conf https://git.openstack.org/cgit/openstack/swift/plain/etc/swift.conf-sample?h=stable/pike

vi /etc/swift/swift.conf

[swift-hash]

swift_hash_path_suffix = start

swift_hash_path_prefix = end

[storage-policy:0]

name = Policy-0

default = yes

#复制“swift.conf” 文件到每个存储节点和其他允许了代理服务的额外节点的 /etc/swift 目录,修改权限:

chown -R root:swift /etc/swift/*

(4)启动服务

#控制节点

systemctl enable openstack-swift-proxy.service memcached.service

systemctl start openstack-swift-proxy.service memcached.service

#存储节点

systemctl enable

openstack-swift-account.service openstack-swift-account-auditor.service openstack-swift-account-reaper.service openstack-swift-account-replicator.service

systemctl start

openstack-swift-account.service openstack-swift-account-auditor.service

openstack-swift-account-reaper.service openstack-swift-account-replicator.service

systemctl enable openstack-swift-container.service

openstack-swift-container-auditor.service openstack-swift-container-replicator.service

openstack-swift-container-updater.service

systemctl start openstack-swift-container.service

openstack-swift-container-auditor.service openstack-swift-container-replicator.service

openstack-swift-container-updater.service

systemctl enable

openstack-swift-object.service openstack-swift-object-auditor.service openstack-swift-object-replicator.service openstack-swift-object-updater.service

systemctl start

openstack-swift-object.service openstack-swift-object-auditor.service openstack-swift-object-replicator.service openstack-swift-object-updater.service

(5)验证

swift stat

3.1 可行性分析

基于OpenStack云平台中的虚拟化资源进行建模,把抽象的资源实例化来操作,分别在服务层面和资源层面进行描述,然后提出一种面向计算资源实时监测反馈综合负载均衡的调度算法,能够实时的分析各计算资源在云数据中心资源池中所占的比例和权重值来进行合理的分配和调度虛拟机实例,并通过在云仿真平台cloudsim上进行对比实验得以验证算法能有效地提高数据中心的负载均衡能力,系统能自动的对资源进行调整,提高了系统运行的稳定性和执行效率。

3.2 系统功能分析

用户通过登录页面,输入账号,密码就可以进入openstack管理页面。

路由管理:管理员->网络->路由,进入路由管理页面,就可以进行添加、删除、搜索和编辑路由。

网络管理:管理员->网络->网络,进入网络管理页面,就可以进行添加、删除、搜索和编辑网络。

虚拟机管理:管理员->计算->实例类型,进入实例管理页面,可以创建、删除、搜索和修改使用权等。点击实例,可以创建、删除和启动实例等。启动实例后,进入虚拟机,可以进行操作。

容器管理:项目->对象存储->容器,可以显示所拥有的容器及其其中的信息。

3.3 系统非功能设计

性能需求:

- 在VMware中正常安装虚拟机并可以使用。

- 三台虚拟机可以相互ping通并且都可以连通外网。

- 虚拟机可以使用基本功能。

环境需求:

- VMware使用15版本以上。

- 虚拟机使用Centos7版本。

- 电脑环境为Win10系统。

- 配置VMware网络并可以正常使用。

服务需求:

- 控制节点所需的服务为yum,keystone,glance等。

- 计算节点所需的服务为yum,rabbitmq,nova等。

- 存储节点所需的服务为yum,rabbitmq,swift等。

-

系统设计

- 系统功能模块详细设计

路由管理:安装完成一系列所需软件后,进入路由管理页面,点击新建路由,创建新的路由,然后点击输入路由名称,外部网络选择前面所配置的外部网络,点击新建路由完成创建。在页面有一系列的功能可以选择,按自己的需求选择自己所需要的功能进行操作。

网络管理:安装完成一系列所需软件后,在路由管理中,点击路由名称,可以新增接口,只选择子网就可以,IP地址可以不用选,这样可以将网络连接到外网上,进入网络管理可以看到网络拓扑图。上面显示了网络的信息,也展现了网络的状态等信息。

虚拟机管理:安装完成一系列所需软件后,进入管理页面创建实例,创建时,详情、源、实例类型、网络必须填,其他的可以不填。实例创建完成后,点击实例名称,然后进入控制台,可以看到虚拟机的控制台,登录就可以正常使用。

容器管理:安装完成一系列所需软件后,执行相关的文件上传命令,然后进入容器管理页面,可以查看容器的名称,点击可以查看容器的信息,同时旁边会显示容器里面的内容,即上传的文件信息。

5.1 登录DashBoard

进入浏览器,输入网址9.1.1.11:/dashboard,然后进行登陆,用户名为admin,密码为123456,然后可以进入管理系统。

5.2 租户用户管理

登录进入管理系统后,查看用户信息,可以显示所有的用户信息并可以进行操作。

5.3 创建网络

使用一系列的命令创建网络后,进入管理系统查看网络信息,创建路由后,添加路由接口,可以让选择的网络连接外网,进入网络拓扑中,可以查看到相应的网络拓扑图。

5.4 查看镜像

进入管理系统,查看所拥有的镜像。

5.5 启动云主机

进入实例,启动实例,进入控制台登录进入正常使用。

云计算是一种继网格计算,分布式计算发展之后新的商业计算模型和服务模式,由于云数据中心的资源异构性和多样性,部署架构之间的复杂性,如何将云计算数据中心虚拟共享资源有效地按用户需求动态管理和分配,并提高资源的使用效率从而为云计算广泛应用提供便利成为一个关键性的研究热点和问题。

本文基于当前最流行热门的开源云计算平台openstack,对其进行了简单的搭建和部署,完成了Keystone、Glance、Nova、Neutron等组件的安装,为云平台的进一步开发做好了准备。在安装的过程中,也遇到了许多问题,或是百度,或是问同学、老师,最后都得到了解决。学到了很多东西,同时也发现了自己的很多不足之初,在以后的学习生活中将会加以改正。

软件简介:蓝湖辅助工具,减少移动端开发中控件属性的复制和粘...

软件简介:蓝湖辅助工具,减少移动端开发中控件属性的复制和粘... 现实生活中,我们听到的声音都是时间连续的,我们称为这种信...

现实生活中,我们听到的声音都是时间连续的,我们称为这种信... 前言最近在B站上看到一个漂亮的仙女姐姐跳舞视频,循环看了亿...

前言最近在B站上看到一个漂亮的仙女姐姐跳舞视频,循环看了亿... 【Android App】实战项目之仿抖音的短视频分享App(附源码和...

【Android App】实战项目之仿抖音的短视频分享App(附源码和... 前言这一篇博客应该是我花时间最多的一次了,从2022年1月底至...

前言这一篇博客应该是我花时间最多的一次了,从2022年1月底至... 因为我既对接过session、cookie,也对接过JWT,今年因为工作...

因为我既对接过session、cookie,也对接过JWT,今年因为工作...