Hadoop安装

0.部署规划:

| # hadoop nodes 192.168.75.128 master 192.168.75.130 slave1 192.168.75.131 slave2 |

| Sudo adduser hadoop Sudo vim /etc/sudoers ###可能需要添加可写权限chmod +w sudoers hadoop ALL=(ALL:ALL) ALL |

2.安装Java并设置环境变量:

下载JDK并解压到/usr/local,修改/etc/profile如下:

| # set jdk classpath export JAVA_HOME=/usr/local/jdk1.8.0_111 export JRE_HOME=$JAVA_HOME/jre export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH export CLAsspATH=$CLAsspATH:.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib |

运行source /etc/profile使环境变量生效。

3.安装openssh并生成key。

| sudo apt-get install openssh-server |

切换到hadoop用户,运行:

| ssh-keygen -t rsa cat .ssh/id_rsa.pub >> .ssh/authorized_keys |

将生成的 authorized_keys 文件复制到 slave1 和 slave2 的 .ssh目录下(其余类似)

scp .ssh/authorized_keys hadoop@slave1:~/.ssh

scp .ssh/authorized_keys hadoop@slave2:~/.ssh

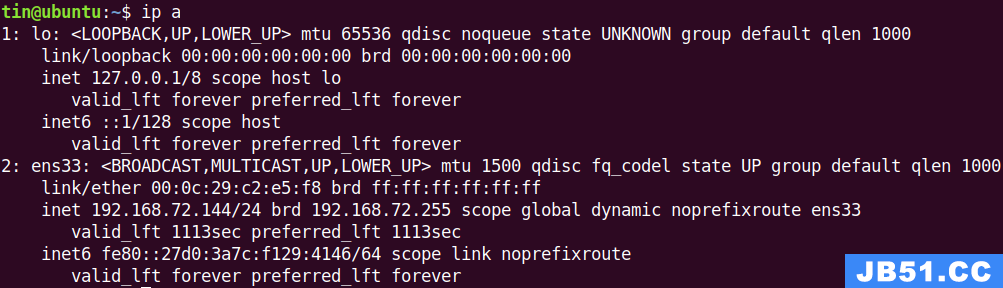

PS:设置命令行显示IP。

可使用ssh slave1测试能够免密码登录。

4.下载并配置hadoop(如下步骤三台机器都需要设置,或者设置一台后scp进行复制):

使用wget下载,例如:

wget http://apache.fayea.com/hadoop/common/hadoop-2.7.3/hadoop-2.7.3.tar.gz

配置HADOOP环境变量:

| # set hadoop classpath export HADOOP_HOME=/home/hadoop/hadoop-2.7.3/ export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop export HADOOP_PREFIX=$HADOOP_HOME |

配置./etc/hadoop/core-site.xml:

| <configuration> <property> <name>fs.defaultFS</name> <!-- master: /etc/hosts 配置的域名 master --> <value>hdfs://master:9000/</value> </property> </configuration> |

配置./etc/hadoop/hdfs-site.xml

| <configuration> <property> <name>dfs.namenode.name.dir</name> <value>/home/hadoop/hadoop-2.7.3/dfs/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/home/hadoop/hadoop-2.7.3/dfs/datanode</value> </property> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.secondary.http-address</name> <value>master:9001</value> </property> </configuration> |

配置./etc/hadoop/mapred-site.xml

| <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>master:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>master:19888</value> </property> </configuration> |

配置./etc/hadoop/yarn-site.xml

| <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>master:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>master:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>master:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>master:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>master:8088</value> </property> </configuration> |

配置./etc/hadoop/slaves文件:

| [hadoop@192.168.75.128 hadoop]$cat slaves localhost slave1 slave2 |

配置环境变量文件:hadoop-env.sh、mapred-env.sh、yarn-env.sh ,添加 JAVA_HOME:

# The java implementation to use.

export JAVA_HOME=/usr/local/jdk1.8.0_131

5.启动hadoop,在master节点操作。

A.初始化文件系统:

/home/hadoop/hadoop-2.7.3/bin/hdfs namenode -format

Storage directory /home/hadoop/hadoop-2.7.3/dfs/namenode has been successfully formatted.

表示初始化成功。

B.启动hadoop集群:

/home/hadoop/hadoop-2.7.3/sbin/start-all.sh

使用jps检查启动情况:

| ###master [hadoop@192.168.75.128 hadoop]$jps 3584 NameNode 4147 NodeManager 4036 ResourceManager 3865 SecondaryNameNode 3721 Datanode ###slave1和slave2使用jps只有: Datanode NodeManager |

浏览器查看HDFS:

http://192.168.75.128:50070

浏览器查看MapReduce:

http://192.168.75.128:8088

C.停止Hadoop:

/home/hadoop/hadoop-2.7.3/sbin/stop-all.sh