我想写一个Naive Base文本分类器.

因为sklearn不接受’text form’功能,所以我正在使用TfidfVectorizer对它们进行转换.

我成功地只使用转换后的数据作为特征来创建这样的分类.代码如下所示:

### text vectorization--go from strings to lists of numbers

vectorizer = TfidfVectorizer(sublinear_tf=True, max_df=0.5,

stop_words='english')

X_train_transformed = vectorizer.fit_transform(X_train_raw['url'])

X_test_transformed = vectorizer.transform(X_test_raw['url'])

### feature selection, because text is super high dimensional and

### can be really computationally chewy as a result

selector = Selectpercentile(f_classif, percentile=1)

selector.fit(X_train_transformed, y_train_raw)

X_train = selector.transform(X_train_transformed).toarray()

X_test = selector.transform(X_test_transformed).toarray()

clf = GaussianNB()

clf.fit(X_train, y_train_raw)

.....

一切都按预期工作,但当我想添加另一个功能时,我遇到了问题,例如.指示天气的标志给定文本包含特定关键字.

我尝试了多种方法来正确转换’url’功能,然后将转换后的功能与另一个布尔功能相结合,但我没有成功.

假设我有一个包含两个特征的pandas框架:’url'(我想要转换)和’contains_keyword’标志,应该如何完成它的提示?

失败的解决方案看起来像这样:

vectorizer = CountVectorizer(min_df=1)

X_train_transformed = vectorizer.fit_transform(X_train_raw['url'])

X_test_transformed = vectorizer.transform(X_test_raw['url'])

selector = Selectpercentile(f_classif, percentile=1)

selector.fit(X_train_transformed, y_train_raw)

X_train_selected = selector.transform(X_train_transformed)

X_test_selected = selector.transform(X_test_transformed)

X_train_raw['transformed_url'] = X_train_selected.toarray().tolist()

X_train_without = X_train_raw.drop(['url'], axis=1)

X_train = X_train_without.values

这会生成包含布尔标志的行和列表,这是sklearn模型的错误输入.我不知道我应该如何正确地改变它.感谢任何帮助.

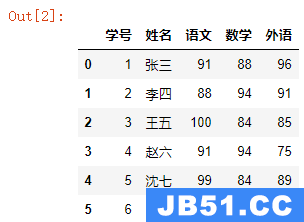

这是测试数据:

url,target,ads_keyword

googleadapis l google com,1,True

googleadapis l google com,1,True

clients1 google com,1,False

c go-mpulse net,1,False

translate google pl,1,False

target – 分类的目标类

ads_keyword – 表示天气的标记’url’包含’ads’字样.

我想使用TfidfVectorizer转换’url’,并将转换后的数据与’ads_keyword'(以及未来可能的更多功能)一起用作训练Naive Bayes模型的功能.

解决方法:

这是一个演示,展示了如何使用gridsearchcv结合功能以及如何调整超参数.

不幸的是,您的样本数据集太小,无法训练真实模型……

try:

from pathlib import Path

except ImportError: # Python 2

from pathlib2 import Path

import os

import re

from pprint import pprint

import pandas as pd

import numpy as np

from sklearn.base import BaseEstimator, TransformerMixin

from sklearn.preprocessing import FunctionTransformer, LabelEncoder, LabelBinarizer, StandardScaler

from sklearn.model_selection import train_test_split

from sklearn.feature_selection import Selectpercentile

from sklearn.feature_extraction import DictVectorizer

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn.model_selection import gridsearchcv

from sklearn.linear_model import SGDClassifier

from sklearn.naive_bayes import MultinomialNB, GaussianNB

from sklearn.neural_network import MLPClassifier

from sklearn.svm import SVC

from sklearn.pipeline import Pipeline, FeatureUnion

from sklearn.externals import joblib

from scipy.sparse import csr_matrix, hstack

class ColumnSelector(BaseEstimator, TransformerMixin):

def __init__(self, name=None, position=None,

as_cat_codes=False, sparse=False):

self.name = name

self.position = position

self.as_cat_codes = as_cat_codes

self.sparse = sparse

def fit(self, X, y=None):

return self

def transform(self, X, **kwargs):

if self.name is not None:

col_pos = X.columns.get_loc(self.name)

elif self.position is not None:

col_pos = self.position

else:

raise Exception('either [name] or [position] parameter must be not-None')

if self.as_cat_codes and X.dtypes.iloc[col_pos] == 'category':

ret = X.iloc[:, col_pos].cat.codes

else:

ret = X.iloc[:, col_pos]

if self.sparse:

ret = csr_matrix(ret.values.reshape(-1,1))

return ret

union = FeatureUnion([

('text',

Pipeline([

('select', ColumnSelector('url')),

#('pct', Selectpercentile(percentile=1)),

('vect', TfidfVectorizer(sublinear_tf=True, max_df=0.5,

stop_words='english')),

]) ),

('ads',

Pipeline([

('select', ColumnSelector('ads_keyword', sparse=True,

as_cat_codes=True)),

#('scale', StandardScaler(with_mean=False)),

]) )

])

pipe = Pipeline([

('union', union),

('clf', MultinomialNB())

])

param_grid = [

{

'union__text__vect': [TfidfVectorizer(sublinear_tf=True,

max_df=0.5,

stop_words='english')],

'clf': [SGDClassifier(max_iter=500)],

'union__text__vect__ngram_range': [(1,1), (2,5)],

'union__text__vect__analyzer': ['word','char_wb'],

'clf__alpha': np.logspace(-5, 0, 6),

#'clf__max_iter': [500],

},

{

'union__text__vect': [TfidfVectorizer(sublinear_tf=True,

max_df=0.5,

stop_words='english')],

'clf': [MultinomialNB()],

'union__text__vect__ngram_range': [(1,1), (2,5)],

'union__text__vect__analyzer': ['word','char_wb'],

'clf__alpha': np.logspace(-4, 2, 7),

},

#{ # NOTE: does NOT support sparse matrices!

# 'union__text__vect': [TfidfVectorizer(sublinear_tf=True,

# max_df=0.5,

# stop_words='english')],

# 'clf': [GaussianNB()],

# 'union__text__vect__ngram_range': [(1,1), (2,5)],

# 'union__text__vect__analyzer': ['word','char_wb'],

#},

]

gs_kwargs = dict(scoring='roc_auc', cv=3, n_jobs=1, verbose=2)

X_train, X_test, y_train, y_test = \

train_test_split(df[['url','ads_keyword']], df['target'], test_size=0.33)

grid = gridsearchcv(pipe, param_grid=param_grid, **gs_kwargs)

grid.fit(X_train, y_train)

# prediction

predicted = grid.predict(X_test)