我想读取一个sql文件并使用CountVectorizer来获取单词出现.

import re

import pandas as pd

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer

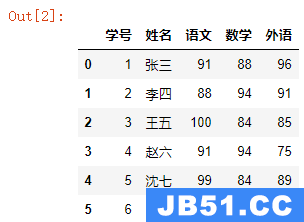

df = pd.read_sql(q, dlconn)

print(df)

count_vect = CountVectorizer()

X_train_counts= count_vect.fit_transform(df)

print(X_train_counts.shape)

print(count_vect.vocabulary_)

这给出了’cat’:1,’dog’:0的输出

看来,它只是采用列动物的名称并从那里开始计数.

如何获取它以访问整列并获得一个图表,该图表显示该列中的每个单词及其频率?

解决方法:

根据the CountVectorizer docs,方法fit_transform()期望字符串可迭代.

它不能直接处理DataFrame.

但是遍历数据框将返回列的标签,而不是值.我建议您改用df.itertuples().

尝试这样的事情:

value_list = [

row[0]

for row in df.itertuples(index=False, name=None)]

print(value_list)

print(type(value_list))

print(type(value_list[0]))

X_train_counts = count_vect.fit_transform(value_list)

value_list中的每个值都应为str类型.

让我们知道是否有帮助.

这是一个小例子:

>>> import pandas as pd

>>> df = pd.DataFrame(['my big dog', 'my lazy cat'])

>>> df

0

0 my big dog

1 my lazy cat

>>> value_list = [row[0] for row in df.itertuples(index=False, name=None)]

>>> value_list

['my big dog', 'my lazy cat']

>>> from sklearn.feature_extraction.text import CountVectorizer

>>> cv = CountVectorizer()

>>> x_train = cv.fit_transform(value_list)

>>> x_train

<2x5 sparse matrix of type '<class 'numpy.int64'>'

with 6 stored elements in Compressed Sparse Row format>

>>> x_train.toarray()

array([[1, 0, 1, 0, 1],

[0, 1, 0, 1, 1]], dtype=int64)

>>> cv.vocabulary_

{'my': 4, 'big': 0, 'dog': 2, 'lazy': 3, 'cat': 1}

现在,您可以显示每一行的单词计数(每个输入字符串分别):

>>> for word, col in cv.vocabulary_.items():

... for row in range(x_train.shape[0]):

... print('word:{:10s} | row:{:2d} | count:{:2d}'.format(word, row, x_train[row,col]))

word:my | row: 0 | count: 1

word:my | row: 1 | count: 1

word:big | row: 0 | count: 1

word:big | row: 1 | count: 0

word:dog | row: 0 | count: 1

word:dog | row: 1 | count: 0

word:lazy | row: 0 | count: 0

word:lazy | row: 1 | count: 1

word:cat | row: 0 | count: 0

word:cat | row: 1 | count: 1

您还可以显示总字数(行总数):

>>> x_train_sum = x_train.sum(axis=0)

>>> x_train_sum

matrix([[1, 1, 1, 1, 2]], dtype=int64)

>>> for word, col in cv.vocabulary_.items():

... print('word:{:10s} | count:{:2d}'.format(word, x_train_sum[0, col]))

word:my | count: 2

word:big | count: 1

word:dog | count: 1

word:lazy | count: 1

word:cat | count: 1

>>> with open('my-file.csv', 'w') as f:

... for word, col in cv.vocabulary_.items():

... f.write('{};{}\n'.format(word, x_train_sum[0, col]))

这应该阐明如何使用现有工具.