Oracle 12cR1 RAC集群安装文档:

Oracle 12cR1 RAC集群安装(一)--环境准备

Oracle 12cR1 RAC集群安装(二)--使用图形界面安装

Oracle 12cR1 RAC集群安装(三)--静默安装

------------------------------------------------------------------------------------------------------------

基本环境

| 操作系统版本 | RedHat6.7 |

| 数据库版本 | 12.1.0.2 |

| 数据库名称 | testdb |

| 数据库实例 | testdb1、testdb2 |

(一)安装服务器硬件要求

| 配置项目 | 参数要求 |

| 网卡 | 每台服务器至少2个网卡: --公网网卡:带宽至少1GB --私网网卡:带宽至少1GB,建议使用10GB,用于集群节点之间的内部通信 注意:所有节点的网卡接口名称必须相同。必然要节点1使用网卡eth0来做公网网卡,那么节点2也必须使用eth0来做公网网卡。 |

| 内存 | 根据是否安装GI,内存要求为: --如果只安装单节点数据库,至少1GB内存 --如果要安装GI,至少需要4GB内存 |

| 临时磁盘空间 | 至少1GB的 /tmp 空间 |

| 本地磁盘空间 | 磁盘空间要求如下: --至少为Grid home分配8GB的空间。Oracle建议分配100GB,为后续打补丁预留出空间 --至少为Grid base分配12GB的空间,GI base主要用于存放Oracle cluster和Oracle ASM的日志文件 --在GI Base下预留10GB的额外空间,用于存放TFA数据 --如果要安装Oracle软件,那么还需要准备6.4GB的空间 建议:如果磁盘充足,建议分别给GI和oracle各100GB空间 |

| 交换空间(swap) |

交换空间要求如下: |

(二)服务器IP地址规划

| 服务器名称 | 公网IP地址(public IP) | 虚拟IP地址(VIP) | SCAN IP地址 | 私网IP地址 |

| node1 | eth0:192.168.10.11 | 192.168.10.13 | 192.168.10.10 | eth1:10.10.10.11 |

| node2 | eth0:192.168.10.12 | 192.168.10.14 | 192.168.10.10(相同) | eth1:10.10.10.12 |

注意:

1.一共包含2个IP网段,公网、虚拟、SCAN必须在同一个网段(192.168.10.0/24),私网在另一个网段(10.10.10.0/24)。

2.主机名不能包含下划线“_”,例如:host_node1,这样是错误的,可以带有中划线“-”

(三)配置主机网络

(1)修改主机名

以节点1为例,重启主机生效。

[root@template ~]# vim /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=node1

(2)修改IP地址

每台服务器的公网IP与虚拟IP均需要修改,这里以节点1的公网IP修改为例,公网使用的网卡是eth0,使用下面方法修改eth0的配置

#进入网卡配置目录 cd /etc/sysconfig/network-scripts/ #修改eth0的网卡配置 vim ifcfg-eth0 DEVICE=eth0 HWADDR=00:0c:29:f8:80:bb TYPE=Ethernet ONBOOT=yes IPADDR=192.168.10.11 NETMASK=255.255.255.0

其他网卡类似,修改完成后重启网卡

[root@node1 ~]# service network restart

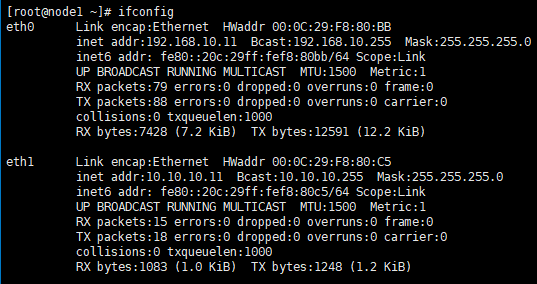

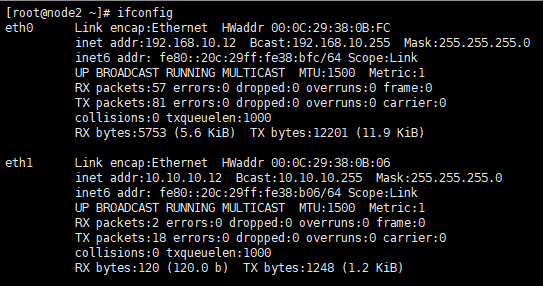

最终2台服务器的网卡配置信息如下图

node1:

node2:

(3)修改/etc/hosts文件,2个节点都做相同的修改

[root@node1 ~]# vim /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 node1 10.12 node2 10.13 node1-vip 10.14 node2-10.10 node-scan 10.10.10.11 node1-priv 10.12 node2-priv

(3)关闭防火墙

(4)关闭seLinux

将参数SELINUX=enforcing改为SELINUX=disabled

[root@node1 ~]# vim /etc/selinux/config

# This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of these two values: # targeted - Targeted processes are protected,# mls - Multi Level Security protection. SELINUXTYPE=targeted

重启服务器生效。

(四)创建用户和用户组,创建软件安装目录,配置用户环境变量

(1)创建用户oracle和grid,以及相关的用户组

/usr/sbin/groupadd -g 1000 oinstall /usr/sbin/groupadd -g 1020 asmadmin /usr/sbin/groupadd -g 1021 asmdba /usr/sbin/groupadd -g 1022 asmoper /usr/sbin/groupadd -g 1031 dba /usr/sbin/groupadd -g 1032 oper useradd -u 1100 -g oinstall -G asmadmin,asmdba,asmoper,oper,dba grid useradd -u 1101 -g oinstall -G dba,oper oracle

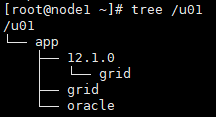

(2)创建GI和Oracle软件的安装目录,并授权

软件安装目录结构如下:

(3)配置grid的环境变量

[grid@node1 ~]$ vi .bash_profile # 在文件结尾添加如下内容 export TMP=/tmp export TMPDIR=$TMP export ORACLE_SID=+ASM1 export ORACLE_BASE=/u01/app/grid export ORACLE_HOME=/u01/app/grid export PATH=/usr/sbin:$PATH export PATH=$ORACLE_HOME/bin:$PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib umask 022

执行命令“source .bash_profile”使环境变量生效。

注意:如果是节点2,黄色参数需要改成ORACLE_SID=+ASM2。

(4)配置oracle的环境变量

[oracle@node1 ~]$ vim .bash_profile #在文件结尾添加一下内容 export TMP=/tmp export TMPDIR=$TMP export ORACLE_SIDtestdb1 export ORACLE_BASE=/u01/app/oracle export ORACLE_HOME=$ORACLE_BASE/product/db_1 export TNS_ADMIN=$ORACLE_HOME/network/admin export PATH=/usr/022

执行命令“source .bash_profile”使环境变量生效。

注意:如果是节点2,黄色参数需要改成ORACLE_SID=testdb2。

(五)配置内核参数和资源限制

(1)配置操作系统的内核参数

在/etc/sysctl.conf文件结尾添加参数

kernel.msgmnb = 65536 kernel.msgmax = kernel.shmmax = 68719476736 kernel.shmall = 4294967296 fs.aio-max-nr = 1048576 fs.file-max = 681574420971522002012160 kernel.shmmni = 4096 kernel.sem = 250 32000 100 128 net.ipv4.ip_local_port_range = 9000 65500 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = net.core.wmem_max = 1048586 net.ipv4.tcp_wmem = 262144 net.ipv4.tcp_rmem = 4194304 kernel.panic_on_oops = 1

sysctl -p生效。

(2)配置oracle和grid用户的资源限制

在/etc/security/limits.conf结尾添加参数

grid soft nproc 2047 grid hard nproc 16384 grid soft nofile 1024 grid hard nofile oracle soft nproc oracle hard nproc oracle soft nofile oracle hard nofile 65536

(3)配置/etc/pam.d/login文件,在结尾添加参数

session required pam_limits.so

(六)软件包安装

使用yum工具安装缺失的软件包,软件包信息如下

binutils-2.20.51.0.2-5.11.el6 (x86_64) compat-libcap1-1.10- (x86_64) compat-libstdc++-33-3.2.3-69.el6 (x86_64) compat-libstdc++-.el6 (i686) gcc-4.4.4-13.el6 (x86_64) gcc-c++-.el6 (x86_64) glibc-2.12-1.7.el6 (i686) glibc-.el6 (x86_64) glibc-devel-.el6 (i686) ksh libgcc-.el6 (i686) libgcc-.el6 (x86_64) libstdc++-.el6 (i686) libstdc++-devel-.el6 (x86_64) libstdc++-devel-.el6 (i686) libaio-0.3.107-10.el6 (x86_64) libaio-.el6 (i686) libaio-devel-.el6 (x86_64) libaio-devel-.el6 (i686) libXext-1.1 (x86_64) libXext- (i686) libXtst-1.0.99.2 (x86_64) libXtst- (i686) libX11-1.3 (x86_64) libX11- (i686) libXau-5 (x86_64) libXau- (i686) libxcb-1.5 (x86_64) libxcb- (i686) libXi- (x86_64) libXi- (i686) make-3.81-19.el6 sysstat-9.0.11.el6 (x86_64)

其中x86_64代表64位操作系统,i686代表32位操作系统,只需安装对应版本即可。

使用下面命令安装

(七)配置共享磁盘

oracle对于存放OCR磁盘组的大小要求如下

| 存储的文件类型 | 卷数量(磁盘数量) | 卷大小 |

| 投票具有外部冗余的文件 | 1 | 每个投票文件卷至少300 MB |

| 具有外部冗余的Oracle Cluster Registry(OCR)和Grid Infrastructure Management Repository | 1 | 包含Grid Infrastructure Management Repository(5.2 GB + 300 MB表决文件+ 400 MB OCR)的OCR卷至少为5.9 GB,对于超过四个节点的集群,每个节点加500 MB。 例如,六节点群集分配应为6.9 GB。 |

| Oracle Clusterware文件(OCR和投票文件)和Grid Infrastructure Management Repository,由Oracle软件提供冗余 | 3 |

每个OCR卷至少400 MB 例如,对于6节点群集,大小为14.1 GB: |

在这次安装中,磁盘规划如下:

| 磁盘组名称 | 磁盘数量 | 单个磁盘大小 | 功能说明 |

| OCR | 3 | 10GB | 存放OCR及GI management repository |

| DATA | 2 | 10GB | 存放数据库的数据 |

| ARCH | 1 | 10GB | 存放归档数据 |

(1)配置共享磁盘的方法

使用udev配置磁盘有2种方法,第一种是直接fdisk格式化磁盘,拿到/dev/sd*1的磁盘,然后使用udev绑定raw,第二种是获取wwid来绑定设备,生产中太长使用第二种方法。

(2)方法1:直接使用raw

(2.1)格式化磁盘,在1个节点上执行

# 在节点1上格式化,以/dev/sdb为例: [root@node1 ~]# fdisk /dev/sdb The number of cylinders for this disk is set to 3824. There is nothing wrong with that,but this is larger than ,and could in certain setups cause problems with: 1) software that runs at boot time (e.g.,old versions of LILO) 2) booting and partitioning software from other OSs (e.g.,DOS FDISK,OS/ FDISK) Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (4): First cylinder (3824,default ): Using default value Last cylinder or +size or +sizeM or +sizeK (): Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks.

(2.2)在2个节点上配置raw设备

[root@node1 ~]# vi /etc/udev/rules.d/60-raw.rules # 在后面添加 ACTION=="add",KERNEL==sdb1/bin/raw /dev/raw/raw1 %N" ACTION==sdc1/bin/raw /dev/raw/raw2 %Nsdd1/bin/raw /dev/raw/raw3 %Nsde1/bin/raw /dev/raw/raw4 %Nsdf1/bin/raw /dev/raw/raw5 %Nsdg1/bin/raw /dev/raw/raw6 %N KERNEL==raw[1]0660gridasmadmin KERNEL==raw[2]raw[3]raw[4]raw[5]raw[6]"

(2.3)启动裸设备,2个节点都要执行

[root@node1 ~]# start_udev

(2.4)查看裸设备,2个节点都要查看,如果有节点不能看到下面的raw设备信息,重启节点

[root@node1 ~]# raw -qa /dev/raw/raw1: bound to major 8,minor 17 /dev/raw/raw2: bound to major 33 /dev/raw/raw3: bound to major 49 /dev/raw/raw4: bound to major 65 /dev/raw/raw5: bound to major 81 /dev/raw/raw6: bound to major 97

(3)方法2:使用wwid来绑定设备

(3.1)编辑/etc/scsi_id.config文件,2个节点都要编辑

(3.2)将磁盘wwid信息写入99-oracle-asmdevices.rules文件,2个节点都要编辑

(3.3)查看99-oracle-asmdevices.rules文件,2个节点都要查看

[root@node1 ~]# cd /etc/udev/rules.d/ [root@node1 rules.d]# more asmdevices.rules KERNEL==sd*scsi/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name36000c293f718a0dcf1f7b410fb9fd1d9asm-diskb36000c296f46877bf6cff9febd7700fb9asm-diskc36000c2902c030ca8a0b0a4a32ab547c7asm-diskd36000c2982ad4757618bd0d06d54d04b8asm-diske36000c29872b79e70266a992e788836b6asm-diskf36000c29b1260d00b8faeb3786092143aasm-diskg"

(3.4)启动设备,2个节点都要执行

(3.5)确认磁盘已经添加成功

[root@node1 rules.d]# cd /dev [root@node1 dev]# ls -l asm* brw-rw---- 1 grid asmadmin 16 Aug 13 05:31 asm-diskb brw-rw---- 32 Aug diskc brw-rw---- 48 Aug diskd brw-rw---- 64 Aug diske brw-rw---- 80 Aug diskf brw-rw---- 96 Aug 31 asm-diskg

(八)用户等效性配置

oracle在安装包已经提供了grid和oracle ssh节点互信配置的工具,直接使用配置非常方便

(1)配置grid用户等效性

(1.1)解压grid安装包,在节点1执行

(1.2)配置节点互信

[grid@node1 sshsetup]$ pwd /home/grid/grid/sshsetup [grid@node1 sshsetup]$ sshUserSetup.sh [grid@node1 sshsetup]$ ./sshUserSetup.sh -hosts node1 node2" -user grid -advanced

配置记录如下:

1 The output of this script is also logged into /tmp/sshUserSetup_2019-08-13-06-12-30.log 2 Hosts are node1 node2 3 user is grid 4 Platform:- Linux 5 Checking if the remote hosts are reachable 6 PING node1 (10.11) 56(84) bytes of data. 7 64 bytes from node1 (10.11): icmp_seq=1 ttl=64 time=0.014 ms 8 2 ttl=0.043 9 3 ttl=0.032 10 4 ttl=0.040 11 5 ttl=0.042 12 13 --- node1 ping statistics --- 14 5 packets transmitted,1)">5 received,1)">0% packet loss, 3999ms 15 rtt min/avg/max/mdev = 0.014/0.034/0.043/0.011 16 PING node2 (10.12) 17 64 bytes from node2 (10.12): icmp_seq=3.05 18 0.716 19 0.807 20 1.37 21 0.704 22 23 --- node2 24 4007ms 25 rtt min/avg/max/mdev = 0.704/1.331/3.053/0.896 26 Remote host reachability check succeeded. 27 The following hosts are reachable: node1 node2. 28 The following hosts are not reachable: . 29 All hosts are reachable. Proceeding further... 30 firsthost node1 31 numhosts 2 32 The script will setup SSH connectivity from the host node1 to all 33 the remote hosts. After the script is executed,the user can use SSH to run 34 commands on the remote hosts or copy files between this host node1 35 and the remote hosts without being prompted passwords or confirmations. 36 37 NOTE : 38 As part of the setup procedure,this script will use ssh and scp to copy 39 files between the local host and the remote hosts. Since the script does not 40 store passwords,you may be prompted the passwords during the execution of 41 the script whenever ssh or is invoked. 42 43 NOTE 44 AS PER SSH REQUIREMENTS,THIS SCRIPT WILL SECURE THE USER HOME DIRECTORY 45 AND THE .ssh DIRECTORY BY REVOKING GROUP AND WORLD WRITE PRIVILEDGES TO THESE 46 directories. 47 48 Do you want to continue and let the script make the above mentioned changes (yes/no)? 49 yes 50 51 The user chose yes 52 Please specify if you want to specify a passphrase for the private key this script will create for the local host. Passphrase is used to encrypt the private key and makes SSH much more secure. Type 'yes' or no' and then press enter. In case you press ',you would need to enter the passphrase whenever the script executes . 53 The estimated number of times the user would be prompted for a passphrase is 4. In addition,1)">if the private-public files are also newly created,the user would have to specify the passphrase on one additional occasion. 54 Enter '. 55 56 57 58 Creating .ssh directory on local host,1)"> not present already 59 Creating authorized_keys on local host 60 Changing permissions on authorized_keys to 644 61 Creating known_hosts 62 Changing permissions on known_hosts to 63 Creating config 64 If a config file exists already at /home/grid/.ssh/config,it would be backed up to /home/grid/.ssh/config.backup. 65 Removing old private/public keys on local host 66 Running SSH keygen on local host 67 Enter passphrase (empty no passphrase): 备注:输入回车 68 Enter same passphrase again: 备注:输入回车 69 Generating public/private rsa key pair. 70 Your identification has been saved in /home/grid/.id_rsa. 71 Your public key has been saved id_rsa.pub. 72 The key fingerprint is: 73 a0:48:eb:ab:7d:39:0d:cf:d2:29:49:cd:f0:a0:85:9d grid@node1 74 The keys randomart image is: 75 +--[ RSA 1024]----+ 76 | | 77 | | 78 | .o .. | 79 | ..oE. . | 80 | oo.* S | 81 | .. o + | 82 | .. O . | 83 | . .B * | 84 |..o. + | 85 +-----------------+ 86 Creating . directory and setting permissions on remote host node1 87 THE SCRIPT WOULD ALSO BE REVOKING WRITE PERMISSIONS FOR group AND others ON THE HOME DIRECTORY FOR grid. THIS IS AN SSH REQUIREMENT. 88 The script would create ~grid/.ssh/config file on remote host node1. If a config file exists already at ~grid/. 89 The user may be prompted a password here since the script would be running SSH on host node1. 90 Warning: Permanently added node1,192.168.10.11 (RSA) to the list of known hosts. 91 grid@node1s password: 备注:输入节点1 grid的密码 92 Done with creating . directory and setting permissions on remote host node1. 93 Creating . directory and setting permissions on remote host node2 94 95 The script would create ~grid/.file on remote host node2. If a config 96 The user may be prompted a password here since the script would be running SSH on host node2. 97 Warning: Permanently added node2,192.168.10.12 98 grid@node2s password: 备注:输入节点2 grid的密码 99 Done with creating . directory and setting permissions on remote host node2. 100 Copying local host public key to the remote host node1 101 The user may be prompted for a password or passphrase here since the script would be using SCP host node1. 102 grid@node1s password: 备注:输入节点1 grid的密码 103 Done copying local host public key to the remote host node1 104 Copying local host public key to the remote host node2 105 The user may be prompted host node2. 106 grid@node2s password: 备注:输入节点2 grid的密码 107 Done copying local host public key to the remote host node2 108 Creating keys on remote host node1 if they do not exist already. This is required to setup SSH on host node1. 109 110 Creating keys on remote host node2 not exist already. This is required to setup SSH on host node2. 111 Generating public/112 Your identification has been saved in .113 Your public key has been saved 114 115 3e:55:4b:9f:63:a3:6c:1f:4c:ca:aa:d2:d1:93:97:46 grid@node2 116 The key117 +--[ RSA 118 | | 119 | | 120 | o | 121 | oEo . | 122 | S..o..B | 123 | ...+o+* o | 124 | .o. +* o | 125 | . .. o . . | 126 | .... . | 127 +-----------------+ 128 Updating authorized_keys on remote host node1 129 Updating known_hosts 130 The script will run SSH on the remote machine node1. The user may be prompted for a passphrase here in case the private key has been encrypted with a passphrase. 131 Updating authorized_keys on remote host node2 132 Updating known_hosts 133 The script will run SSH on the remote machine node2. The user may be prompted 134 cat: /home/grid/.ssh/known_hosts.tmp: No such or directory 135 ssh/authorized_keys.tmp: No such 136 SSH setup is complete. 137 138 ------------------------------------------------------------------------ 139 Verifying SSH setup 140 =================== 141 The script will now run the date command on the remote nodes using ssh 142 to verify if is setup correctly. IF THE SETUP IS CORRECTLY SETUP,143 THERE SHOULD BE NO OUTPUT OTHER THAN THE DATE AND SSH SHOULD NOT ASK FOR 144 PASSWORDS. If you see any output other than date or are prompted the 145 password,1)"> is not setup correctly and you will need to resolve the 146 issue and set up again. 147 The possible causes failure could be: 148 1. The server settings in /etc/ssh/sshd_config file do not allow 149 user grid. 150 . The server may have disabled public key based authentication. 151 3. The client public key on the server may be outdated. 152 4. ~grid or ~grid/. on the remote host may not be owned by grid. 153 5. User may not have passed -shared option shared remote users or 154 may be passing the -shared option for non-shared remote users. 155 6. If there is output in addition to the date156 it may be a security alert shown as part of company policy. Append the 157 additional text to the <OMS HOME>/sysman/prov/resources/ignoreMessages.txt 158 ------------------------------------------------------------------------ 159 --node1:-- 160 Running /usr/bin/ssh -x -l grid node1 to verify SSH connectivity has been setup from local host to node1. 161 IF YOU SEE ANY OTHER OUTPUT BESIDES THE OUTPUT OF THE DATE COMMAND OR IF YOU ARE PROMPTED FOR A PASSWORD HERE,IT MEANS SSH SETUP HAS NOT BEEN SUCCESSFUL. Please note that being prompted for a passphrase may be OK but being prompted a password is ERROR. 162 The script will run SSH on the remote machine node1. The user may be prompted 163 Tue Aug 06:12:55 HKT 2019 164 ------------------------------------------------------------------------ 165 --node2:-- 166 Running /usr/bin/ssh -x -l grid node2 to verify SSH connectivity has been setup from local host to node2. 167 IF YOU SEE ANY OTHER OUTPUT BESIDES THE OUTPUT OF THE DATE COMMAND OR IF YOU ARE PROMPTED FOR A PASSWORD HERE,1)">168 The script will run SSH on the remote machine node2. The user may be prompted 169 Tue Aug 58 HKT 170 ------------------------------------------------------------------------ 171 ------------------------------------------------------------------------ 172 Verifying SSH connectivity has been setup from node1 to node1 173 IF YOU SEE ANY OTHER OUTPUT BESIDES THE OUTPUT OF THE DATE COMMAND OR IF YOU ARE PROMPTED FOR A PASSWORD HERE,IT MEANS SSH SETUP HAS NOT BEEN SUCCESSFUL. 174 Tue Aug 175 ------------------------------------------------------------------------ 176 ------------------------------------------------------------------------ 177 Verifying SSH connectivity has been setup from node1 to node2 178 179 Tue Aug 59 HKT 180 ------------------------------------------------------------------------ 181 -Verification from complete- 182 SSH verification complete.

(2)配置oracle用户等效性

oracle用户的节点互信与grid类似,sshUserSetup.sh 工具在oracle的安装包下面

[oracle@node1 sshsetup]$ ./sshUserSetup." -user oracle -advanced

(八)安装前的预检查

(1)安装cvudisk包,2个节点都要执行

[root@node1 grid]# cd grid/rpm/ [root@node1 rpm]# cvuqdisk-9-.rpm [root@node1 rpm]# rpm -ivh cvuqdisk-.rpm Preparing... ########################################### [100%] Using default group oinstall to install package 1:cvuqdisk ########################################### [100%]

节点1通过scp命令将cvudisk包拷贝到节点2,安装即可

(2)执行预检查,在节点1执行即可

[root@node1 grid]# su - grid [grid@node1 ~]$ cd grid/ [grid@node1 grid]$ ls install response rpm runcluvfy. runInstaller sshsetup stage welcome.html [grid@node1 grid]$ ./runcluvfy.sh stage -pre crsinst -n node1,node2 -fixup -verbose>check.log

确保所有项都通过,检查结果如下。

1 Performing pre-checks cluster services setup 2 Checking node reachability... 4 5 Check: Node reachability from node node1" 6 Destination Node Reachable? 7 ------------------------------------ ------------------------ 8 node1 yes 9 node2 yes 10 Result: Node reachability check passed from node 11 13 Checking user equivalence... 14 15 Check: User equivalence for user 16 Node Name Status 17 ------------------------------------ ------------------------ 18 node2 passed 19 node1 passed 20 Result: User equivalence check passed 21 22 Checking node connectivity... 23 24 Checking hosts config ... 25 26 ------------------------------------ ------------------------ 29 30 Verification of the hosts config successful 31 32 33 Interface information for node Name IP Address Subnet Gateway Def. Gateway HW Address MTU 35 ------ --------------- --------------- --------------- --------------- ----------------- ------ 36 eth0 10.11 10.0 0.0.0.0 0.1 00:0C:29:F8:80:BB 1500 37 eth1 10.11 10.0 80:C5 38 eth2 0.109 0.0 80:CF 39 40 41 Interface information node2 42 43 ------ --------------- --------------- --------------- --------------- ----------------- ------ 44 eth0 10.12 38:0B:FC 45 eth1 10.12 38:0B:06 46 eth2 0.108 10 48 49 Check: Node connectivity of subnet 192.168.10.0 50 Source Destination Connected? 51 ------------------------------ ------------------------------ ---------------- 52 node1[10.11] node2[] yes 53 Result: Node connectivity passed for subnet with node(s) node1,node2 54 55 56 Check: TCP connectivity of subnet 57 Source Destination Connected? 58 ------------------------------ ------------------------------ ---------------- 59 node1 : 10.11 node1 : passed 60 node2 : 10.12 node1 : 61 node1 : 10.11 node2 : 62 node2 : 10.12 node2 : 63 Result: TCP connectivity check passed 64 65 66 Check: Node connectivity of subnet 10.10.10.0 67 Source Destination Connected? 68 ------------------------------ ------------------------------ ---------------- 69 node1[10.11] node2[] yes 70 Result: Node connectivity passed 71 72 73 Check: TCP connectivity of subnet 74 Source Destination Connected? 75 ------------------------------ ------------------------------ ---------------- 76 node1 : 10.11 node1 : passed 77 node2 : 10.12 node1 : 78 node1 : 10.11 node2 : 79 node2 : 10.12 node2 : 80 Result: TCP connectivity check passed 81 82 83 Check: Node connectivity of subnet 192.168.0.0 84 Source Destination Connected? 85 ------------------------------ ------------------------------ ---------------- 86 node1[0.109] node2[0.108 87 Result: Node connectivity passed 88 89 90 Check: TCP connectivity of subnet 91 Source Destination Connected? 92 ------------------------------ ------------------------------ ---------------- 93 node1 : 0.109 node1 : 0.109 94 node2 : 0.108 node1 : 95 node1 : 0.109 node2 : 96 node2 : 0.108 node2 : 97 Result: TCP connectivity check passed 98 99 100 Interfaces found on subnet " that are likely candidates VIP are: 101 node1 eth2:0.109 102 node2 eth2:0.108 103 104 Interfaces found on subnet a private interconnect are: 105 node1 eth0:10.11 106 node2 eth0:10.12 107 108 Interfaces found on subnet 109 node1 eth1:110 node2 eth1:111 Checking subnet mask consistency... 112 Subnet mask consistency check passed 113 Subnet mask consistency check passed 114 Subnet mask consistency check passed 115 Subnet mask consistency check passed. 116 117 Result: Node connectivity check passed 118 119 Checking multicast communication... 120 121 Checking subnet " for multicast communication with multicast group 224.0.0.251122 Check of subnet passed. 123 124 Check of multicast communication passed. 125 126 Check: Total memory 127 Node Name Available Required Status 128 ------------ ------------------------ ------------------------ ---------- 129 node2 3.729GB (3910180.0KB) 4GB (.0KB) failed 130 node1 131 Result: Total memory check failed 132 133 Check: Available memory 135 ------------ ------------------------ ------------------------ ---------- 136 node2 3.4397GB (3606804.0KB) 50MB (51200.0KB) passed 137 node1 3.1282GB (3280116.0KB) 50MB (138 Result: Available memory check passed 139 140 Check: Swap space 141 142 ------------ ------------------------ ------------------------ ---------- 143 node2 3.8594GB (4046844.0KB) 3910180.0KB) passed 144 node1 145 Result: Swap space check passed 146 147 Check: Free disk space for node2:/usr,node2:/var,node2:/etc,node2:/sbin" 148 Path Node Name Mount point Available Required Status 149 ---------------- ------------ ------------ ------------ ------------ ------------ 150 /usr node2 / 44.1611GB 65MB passed 151 /var node2 / 152 /etc node2 / 153 /sbin node2 / 154 Result: Free disk space check passed 155 156 Check: Free disk space node1:/usr,node1:/var,node1:/etc,node1:/sbin157 158 ---------------- ------------ ------------ ------------ ------------ ------------ 159 /usr node1 / 43.4079GB 65MB passed 160 /var node1 / 161 /etc node1 / 162 /sbin node1 / 163 Result: Free disk space check passed 164 165 Check: Free disk space node2:/tmp166 167 ---------------- ------------ ------------ ------------ ------------ ------------ 168 /tmp node2 /tmp .1611GB 1GB passed 169 Result: Free disk space check passed 170 171 Check: Free disk space node1:/tmp173 ---------------- ------------ ------------ ------------ ------------ ------------ 174 /tmp node1 /tmp .4062GB 1GB passed 175 Result: Free disk space check passed 176 177 Check: User existence Node Name Status Comment 179 ------------ ------------------------ ------------------------ 180 node2 passed exists(1100) 181 node1 passed exists(182 183 Checking for multiple users with UID value 1100 184 Result: Check passed 185 Result: User existence check passed 186 187 Check: Group existence oinstall188 189 ------------ ------------------------ ------------------------ 190 node2 passed exists 191 node1 passed exists 192 Result: Group existence check passed 193 194 Check: Group existence dba195 196 ------------ ------------------------ ------------------------ 197 198 199 Result: Group existence check passed 200 201 Check: Membership of user in group [as Primary] 202 Node Name User Exists Group Exists User Group Primary Status 203 ---------------- ------------ ------------ ------------ ------------ ------------ 204 node2 yes yes yes yes passed 205 node1 yes yes yes yes passed 206 Result: Membership check [as Primary] passed 207 208 Check: Membership of user 209 Node Name User Exists Group Exists User Group Status 210 ---------------- ------------ ------------ ------------ ---------------- 211 node2 yes yes yes passed 212 node1 yes yes yes passed 213 Result: Membership check passed 214 215 Check: Run level 216 Node Name run level Required Status 217 ------------ ------------------------ ------------------------ ---------- 218 node2 5 3,1)"> passed 219 node1 220 Result: Run level check passed 221 222 Check: Hard limits maximum open file descriptors223 Node Name Type Available Required Status 224 ---------------- ------------ ------------ ------------ ---------------- 225 node2 hard 65536 passed 226 node1 hard 227 Result: Hard limits check passed 228 229 Check: Soft limits 230 231 ---------------- ------------ ------------ ------------ ---------------- 232 node2 soft 1024 passed 233 node1 soft 234 Result: Soft limits check passed 235 236 Check: Hard limits maximum user processes237 238 ---------------- ------------ ------------ ------------ ---------------- 239 node2 hard 16384 240 node1 hard 241 Result: Hard limits check passed 242 243 Check: Soft limits 244 245 ---------------- ------------ ------------ ------------ ---------------- 246 node2 soft 2047 247 node1 soft 248 Result: Soft limits check passed 249 250 Check: System architecture 251 252 ------------ ------------------------ ------------------------ ---------- 253 node2 x86_64 x86_64 passed 254 node1 x86_64 x86_64 passed 255 Result: System architecture check passed 256 257 Check: Kernel version 258 259 ------------ ------------------------ ------------------------ ---------- 260 node2 2.6.32-573.el6.x86_64 32 passed 261 node1 262 Result: Kernel version check passed 263 264 Check: Kernel parameter semmsl265 Node Name Current Configured Required Status Comment 266 ---------------- ------------ ------------ ------------ ------------ ------------ 267 node1 250 250268 node2 269 Result: Kernel parameter check passed 270 271 Check: Kernel parameter semmns272 273 ---------------- ------------ ------------ ------------ ------------ ------------ 274 node1 32000 32000275 node2 276 Result: Kernel parameter check passed 277 278 Check: Kernel parameter semopm279 280 ---------------- ------------ ------------ ------------ ------------ ------------ 281 node1 100 100282 node2 283 Result: Kernel parameter check passed 284 285 Check: Kernel parameter semmni286 287 ---------------- ------------ ------------ ------------ ------------ ------------ 288 node1 128 289 node2 290 Result: Kernel parameter check passed 291 292 Check: Kernel parameter shmmax293 294 ---------------- ------------ ------------ ------------ ------------ ------------ 295 node1 2002012160 passed 296 node2 297 Result: Kernel parameter check passed 298 299 Check: Kernel parameter shmmni300 301 ---------------- ------------ ------------ ------------ ------------ ------------ 302 node1 4096 303 node2 304 Result: Kernel parameter check passed 305 306 Check: Kernel parameter shmall307 308 ---------------- ------------ ------------ ------------ ------------ ------------ 309 node1 2097152 391018 passed 310 node2 311 Result: Kernel parameter check passed 312 313 Check: Kernel parameter file-max314 315 ---------------- ------------ ------------ ------------ ------------ ------------ 316 node1 6815744 passed 317 node2 318 Result: Kernel parameter check passed 319 320 Check: Kernel parameter ip_local_port_range321 322 ---------------- ------------ ------------ ------------ ------------ ------------ 323 node1 between 9000 & 65500 between 65535 passed 324 node2 between 325 Result: Kernel parameter check passed 326 327 Check: Kernel parameter rmem_default328 329 ---------------- ------------ ------------ ------------ ------------ ------------ 330 node1 262144 331 node2 332 Result: Kernel parameter check passed 333 334 Check: Kernel parameter rmem_max335 336 ---------------- ------------ ------------ ------------ ------------ ------------ 337 node1 4194304 338 node2 339 Result: Kernel parameter check passed 340 341 Check: Kernel parameter wmem_default342 343 ---------------- ------------ ------------ ------------ ------------ ------------ 344 node1 345 node2 346 Result: Kernel parameter check passed 347 348 Check: Kernel parameter wmem_max349 350 ---------------- ------------ ------------ ------------ ------------ ------------ 351 node1 1048586 352 node2 353 Result: Kernel parameter check passed 354 355 Check: Kernel parameter aio-max-nr356 357 ---------------- ------------ ------------ ------------ ------------ ------------ 358 node1 1048576 359 node2 360 Result: Kernel parameter check passed 361 362 Check: Kernel parameter panic_on_oops363 364 ---------------- ------------ ------------ ------------ ------------ ------------ 365 node1 1 366 node2 367 Result: Kernel parameter check passed 368 369 Check: Package existence binutils370 371 ------------ ------------------------ ------------------------ ---------- 372 node2 binutils-5.43.el6 binutils- passed 373 node1 binutils-374 Result: Package existence check passed 375 376 Check: Package existence compat-libcap1377 378 ------------ ------------------------ ------------------------ ---------- 379 node2 compat-libcap1-1 compat-libcap1-1.10 passed 380 node1 compat-libcap1-381 Result: Package existence check passed 382 383 Check: Package existence compat-libstdc++-33(x86_64)384 385 ------------ ------------------------ ------------------------ ---------- 386 node2 compat-libstdc++-33(x86_64)-69.el6 compat-libstdc++- passed 387 node1 compat-libstdc++-388 Result: Package existence check passed 389 390 Check: Package existence libgcc(x86_64)391 392 ------------ ------------------------ ------------------------ ---------- 393 node2 libgcc(x86_64)-7-23.el6 libgcc(x86_64)-394 node1 libgcc(x86_64)-395 Result: Package existence check passed 396 397 Check: Package existence libstdc++(x86_64)398 399 ------------ ------------------------ ------------------------ ---------- 400 node2 libstdc++(x86_64)-23.el6 libstdc++(x86_64)- passed 401 node1 libstdc++(x86_64)-402 Result: Package existence check passed 403 404 Check: Package existence libstdc++-devel(x86_64)405 406 ------------ ------------------------ ------------------------ ---------- 407 node2 libstdc++-devel(x86_64)-23.el6 libstdc++-devel(x86_64)-408 node1 libstdc++-devel(x86_64)-409 Result: Package existence check passed 410 411 Check: Package existence sysstat412 413 ------------ ------------------------ ------------------------ ---------- 414 node2 sysstat-27.el6 sysstat- passed 415 node1 sysstat-416 Result: Package existence check passed 417 418 Check: Package existence gcc419 420 ------------ ------------------------ ------------------------ ---------- 421 node2 23.el6 passed 422 node1 423 Result: Package existence check passed 424 425 Check: Package existence gcc-c++426 427 ------------ ------------------------ ------------------------ ---------- 428 node2 23.el6 429 node1 430 Result: Package existence check passed 431 432 Check: Package existence ksh433 434 ------------ ------------------------ ------------------------ ---------- 435 node2 ksh ksh passed 436 node1 ksh ksh passed 437 Result: Package existence check passed 438 439 Check: Package existence make440 441 ------------ ------------------------ ------------------------ ---------- 442 node2 20.el6 3.81443 node1 444 Result: Package existence check passed 445 446 Check: Package existence glibc(x86_64)447 448 ------------ ------------------------ ------------------------ ---------- 449 node2 glibc(x86_64)-1.212.el6_10.3 glibc(x86_64)-2.12 passed 450 node1 glibc(x86_64)-451 Result: Package existence check passed 452 453 Check: Package existence glibc-devel(x86_64)454 455 ------------ ------------------------ ------------------------ ---------- 456 node2 glibc-devel(x86_64)-3 glibc-devel(x86_64)-457 node1 glibc-devel(x86_64)-458 Result: Package existence check passed 459 460 Check: Package existence libaio(x86_64)461 462 ------------ ------------------------ ------------------------ ---------- 463 node2 libaio(x86_64)-10.el6 libaio(x86_64)-107 passed 464 node1 libaio(x86_64)-465 Result: Package existence check passed 466 467 Check: Package existence libaio-devel(x86_64)468 469 ------------ ------------------------ ------------------------ ---------- 470 node2 libaio-devel(x86_64)-10.el6 libaio-devel(x86_64)-471 node1 libaio-devel(x86_64)-472 Result: Package existence check passed 473 474 Check: Package existence nfs-utils475 476 ------------ ------------------------ ------------------------ ---------- 477 node2 nfs-utils-1.2.64.el6 nfs-utils-15478 node1 nfs-utils-479 Result: Package existence check passed 480 481 Checking availability of ports 6200,6100" required for component Oracle Notification Service (ONS)482 Node Name Port Number Protocol Available Status 483 ---------------- ------------ ------------ ------------ ---------------- 484 node2 6200 TCP yes successful 485 node1 486 node2 6100487 node1 488 Result: Port availability check passed for ports 489 490 Checking availability of ports 42424Oracle Cluster Synchronization Services (CSSD)491 492 ---------------- ------------ ------------ ------------ ---------------- 493 node2 42424 TCP yes successful 494 node1 495 Result: Port availability check passed 496 497 Checking 0 498 Result: Check 0499 500 Check: Current group ID 501 Result: Current group ID check passed 502 503 Starting check consistency of primary group of root user 504 505 ------------------------------------ ------------------------ 506 507 508 509 Check for consistency of root users primary group passed 510 511 Starting Clock synchronization checks using Network Time Protocol(NTP)... 512 513 Checking existence of NTP configuration file /etc/ntp.conf across nodes 514 Node Name File exists? 515 ------------------------------------ ------------------------ 516 517 518 The NTP configuration is available on all nodes 519 NTP configuration existence check passed 520 No NTP Daemons or Services were found to be running 521 PRVF-5507 : NTP daemon or service is not running on any node but NTP configuration exists on the following node(s): 522 523 Result: Clock synchronization check using Network Time Protocol(NTP) failed 524 525 Checking Core name pattern consistency... 526 Core name pattern consistency check passed. 527 528 Checking to make sure user " is not in root group 529 530 ------------ ------------------------ ------------------------ 531 node2 passed does not exist 532 node1 passed does not exist 533 Result: User " is not part of group. Check passed 534 535 Check default user creation mask 536 Node Name Available Required Comment 537 ------------ ------------------------ ------------------------ ---------- 538 node2 0022 0022 passed 539 node1 540 Result: Default user creation mask check passed 541 Checking integrity of /etc/resolv.conf542 543 Checking the " to make sure only one of domain' and search entries is defined 544 545 WARNING: 546 PRVF-5640 : Both ' entries are present on the following nodes: node1,1)">547 548 Check for integrity of 549 550 Check: Time zone consistency 551 Result: Time zone consistency check passed 552 553 Checking integrity of name service switch configuration /etc/nsswitch.conf ... 554 Checking if hosts" entry is consistent across nodes... 555 Checking make sure that only one entry is defined 556 More than one " entry does not exist in any file 557 All nodes have same " entry defined 558 Check for integrity of name service switch configuration 559 560 561 Checking daemon avahi-daemon is not configured and running 562 563 Check: Daemon not configured 564 Node Name Configured Status 565 ------------ ------------------------ ------------------------ 566 node2 no passed 567 node1 no passed 568 Daemon not configured check passed for process 569 570 Check: Daemon not running 571 Node Name Running? Status 572 ------------ ------------------------ ------------------------ 573 574 575 Daemon not running check passed 576 577 Starting check for /dev/shm mounted as temporary system ... 578 579 Check system passed 580 581 Starting check for /boot mount582 583 Check 584 585 Starting check zeroconf check ... 586 587 ERROR: 588 589 PRVE-10077 : NOZEROCONF parameter was not specified or was not set to ' /etc/sysconfig/network" on node 590 PRVE-591 592 Check zeroconf check failed 593 594 Pre-check cluster services setup was unsuccessful on all the nodes. 595 596 ****************************************************************************************** 597 Following is the list of fixable prerequisites selected to fix this session 598 ****************************************************************************************** 599 600 -------------- --------------- ---------------- 601 Check failed. Failed on nodes Reboot required? 602 -------------- --------------- ---------------- 603 zeroconf check node2,node1 no 604 605 606 607 Execute /tmp/CVU_12.1.0.2.0_grid/runfixup.sh" as root user on nodes to perform the fix up operations manually 608 609 Press ENTER key to continue after execution of " has completed on nodes 610 611 Fix: zeroconf check 612 613 ------------------------------------ ------------------------ 614 node2 failed 615 node1 failed 616 617 618 PRVG-9023 : Manual fix up command " was not issued by root user on node 619 620 PRVG-621 622 Result: zeroconf check" could not be fixed on nodes 623 Fix up operations for selected fixable prerequisites were unsuccessful on nodes "

后续:安装Grid infrastructure和RDBMS,以及创建数据库,见文档:

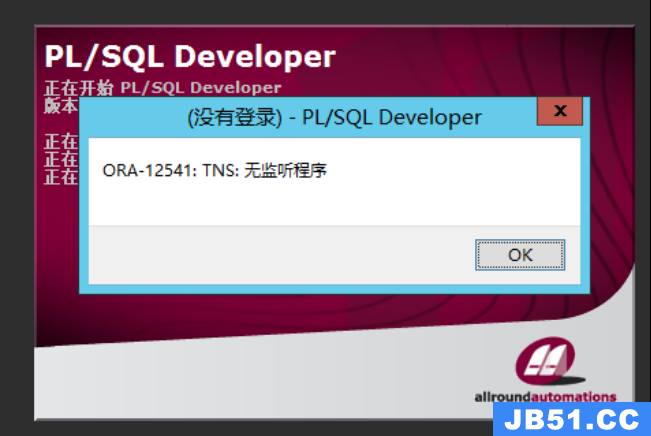

文章浏览阅读8.7k次,点赞2次,收藏17次。此现象一般定位到远...

文章浏览阅读8.7k次,点赞2次,收藏17次。此现象一般定位到远... 文章浏览阅读2.8k次。mysql脚本转化为oracle脚本_mysql建表语...

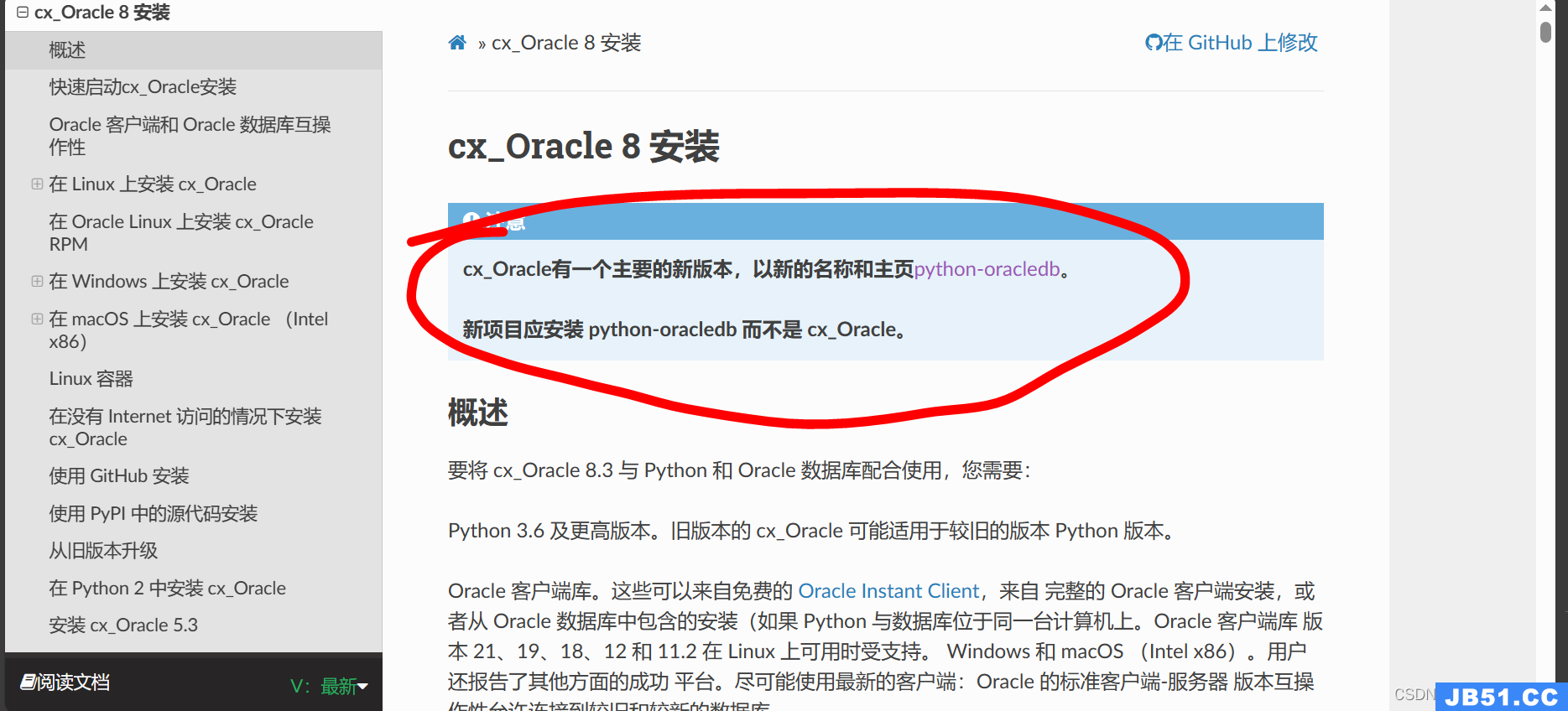

文章浏览阅读2.8k次。mysql脚本转化为oracle脚本_mysql建表语... 文章浏览阅读2.2k次。cx_Oracle报错:cx_Oracle DatabaseErr...

文章浏览阅读2.2k次。cx_Oracle报错:cx_Oracle DatabaseErr... 文章浏览阅读1.1k次,点赞38次,收藏35次。本文深入探讨了Or...

文章浏览阅读1.1k次,点赞38次,收藏35次。本文深入探讨了Or... 文章浏览阅读1.5k次。默认自动收集统计信息的时间为晚上10点...

文章浏览阅读1.5k次。默认自动收集统计信息的时间为晚上10点...