一、拉镜像

docker pull elasticsearch:7.17.5

docker pull kibana:7.17.5

docker pull logstash:7.17.5

二、安装Elasticsearch

Create user defined network (useful for connecting to other services attached to the same network (e.g. Kibana)):

$ docker network create somenetwork

Run Elasticsearch:

docker run -d --name elasticsearch \

--restart always \

-p 9200:9200 \

-p 9300:9300 \

-e "discovery.type=single-node" \

-e ES_JAVA_OPTS="-Xms512m -Xmx1024m" \

-e TZ="Asia/Shanghai" elasticsearch:7.17.5验证

> curl http://192.168.79.21:9200

三、安装Kibana

Run Kibana

$ docker run -d --name kibana --net somenetwork -p 5601:5601 kibana:7.7.0

docker run -d --name kibana \

-e ELASTICSEARCH_HOSTS=http://192.168.79.21:9200 \

-v /opt/elk/config:/usr/share/kibana/config \

-p 5601:5601 \

kibana:7.17.5

## 需要使用如下命令启动kibana,然后将配置文件拷贝到宿主机目录

docker run -d --name kibana \

-e ELASTICSEARCH_HOSTS=http://192.168.79.21:9200 \

-p 5601:5601 \

kibana:7.17.5

cd /opt/elk/config

docker cp f4c739e9604a:/usr/share/kibana/config/kibana.yml ./Configure Kibana

添加: i18n.locale: "zh-CN"

vim kibana.yml

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.host: "0.0.0.0"

server.shutdownTimeout: "5s"

elasticsearch.hosts: [ "http://192.168.79.21:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

i18n.locale: "zh-CN"

然后重新启动镜像,可以访问中文kibana

四、安装Logstash

1、启动logstash

docker run -d --name=logstash logstash:7.17.52、拷贝数据,授予权限

docker cp logstash:/usr/share/logstash /opt/elk

chmod 777 -R logstash3、配置文件

vim logstash/config/logstash.yml

# 修改内容如下

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://192.168.79.21:9200" ]

path.config: /usr/share/logstash/config/conf.d/*.conf

path.logs: /usr/share/logstash/logs4、配置同步任务

cd /opt/elk/logstash/config/conf.d

touch syslog.conf

vim syslog.conf

input {

file {

#标签

type => "systemlog-localhost"

#采集点

path => "/var/log/messages"

#开始收集点

start_position => "beginning"

#扫描间隔时间,默认是1s,建议5s

stat_interval => "5"

}

}

output {

elasticsearch {

hosts => ["192.168.79.21:9200"]

index => "logstash-system-localhost-%{+YYYY.MM.dd}"

}

}

不配置同步任务,logstash服务会不断重启

5、重新启动logstash

重新启动logstash

docker rm -f logstash

docker run -d \

--name=logstash \

--restart=always \

-p 5044:5044 \

-v /opt/elk/logstash:/usr/share/logstash \

-v /opt/elk/logstash/config/conf.d:/usr/share/logstash/c/usr/share/logstash/libonfig/conf.d \

-v /opt/elk/logstash/logs:/usr/share/logstash/logs \

-v /opt/elk/logstash/pipeline:/usr/share/logstash/pipeline \

-v /opt/elk/logstash/lib:/usr/share/logstash/lib \

-v /opt/elk/logstash/onstore2:/usr/share/logstash/onstore2 \

-v /var/log/messages:/var/log/messages \

logstash:7.17.56、查看同步任务是否生效

http://192.168.79.21:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .geoip_databases seKWsAp8R0WmGw2BZ4KAEg 1 0 41 0 38.8mb 38.8mb

green open .kibana_7.17.5_001 PxzFrrFmR7W06P2FKNM0dQ 1 0 60 52 4.8mb 4.8mb

green open .apm-custom-link vtong4BlT3WVyfehlUNrNg 1 0 0 0 226b 226b

green open .apm-agent-configuration sR7FsFRaTXScJKtuh7nmXg 1 0 0 0 226b 226b

green open .kibana_task_manager_7.17.5_001 2cowHwbwTWazFa44xTIjBQ 1 0 17 10440 1.4mb 1.4mb

yellow open logstash-system-localhost-2022.08.24 _i9Vzaj1SMqnteGUeW0AoA 1 1 7959 0 794kb 794kb

green open .tasks Uel1QayGQJW1kQpVJqetvA 1 0 12 0 39.5kb 39.5kb六、Logstash同步数据

1、创建配置文件

cd /opt/elk/logstash/config/conf.d

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

stdin {

}

jdbc{

jdbc_connection_string=> "jdbc:mysql://x.x.x.x:3306/onstore?useUnicode=true&characterEncoding=utf-8&useSSL=false&serverTimezone=Asia/Shanghai"

jdbc_user=> "username"

jdbc_password=> "U&fcN5234a"

jdbc_driver_class=> "com.mysql.jdbc.Driver"

jdbc_driver_library=> "/usr/share/logstash/lib/mysql-connector-java-8.0.20.jar"

jdbc_fetch_size=> 1000

#每分钟执行一次

schedule => "*/2 * * * * *"

#是否记录上次执行结果, 如果为真,将会把上次执行到的 tracking_column 字段的值记录下来,保存到 last_run_metadata_path 指定的文件中

record_last_run => true

# 是否需要记录某个column 的值,如果record_last_run为真,可以自定义我们需要 track 的 column 名称,此时该参数就要为 true. 否则默认 track 的是 timestamp 的值

use_column_value => true

#记录的字段(这里一定要小写)

tracking_column => "unix_ts_in_secs"

#记录字段的文件位置和名称

last_run_metadata_path => "/usr/share/logstash/onstore2/test.txt"

# 是否清除 last_run_metadata_path 的记录,如果为真那么每次都相当于从头开始查询所有的数据库记录

clean_run => false

#分页可自选

jdbc_paging_enabled => false

#jdbc_page_size => "1000"

#要执行的sql文件位置

statement_filepath => "/usr/share/logstash/onstore2/test.sql"

jdbc_default_timezone => "Asia/Shanghai"

}

}

filter {

date {

match => ["timestamp", "yyyy-MM-dd HH:mm:ss,SSS"]

target => "@timestamp"

}

mutate {

remove_field => ["@version","@timestamp","unix_ts_in_secs"]

}

}

output {

elasticsearch {

hosts => ["http://192.168.79.21:9200"]

index => "pre_order"

manage_template => false

template_overwrite => false

action => "update"

doc_as_upsert => "true"

document_id => "%{id}"

#user => "elastic"

#password => "changeme"

}

stdout {

codec => json_lines

}

}2、编写SQL语句

[root@localhost onstore2]# cat test.sql

select *,id as unix_ts_in_secs

from store_order_up

where id > :sql_last_value3、编写last_run文件

[root@localhost onstore2]# cat test.txt

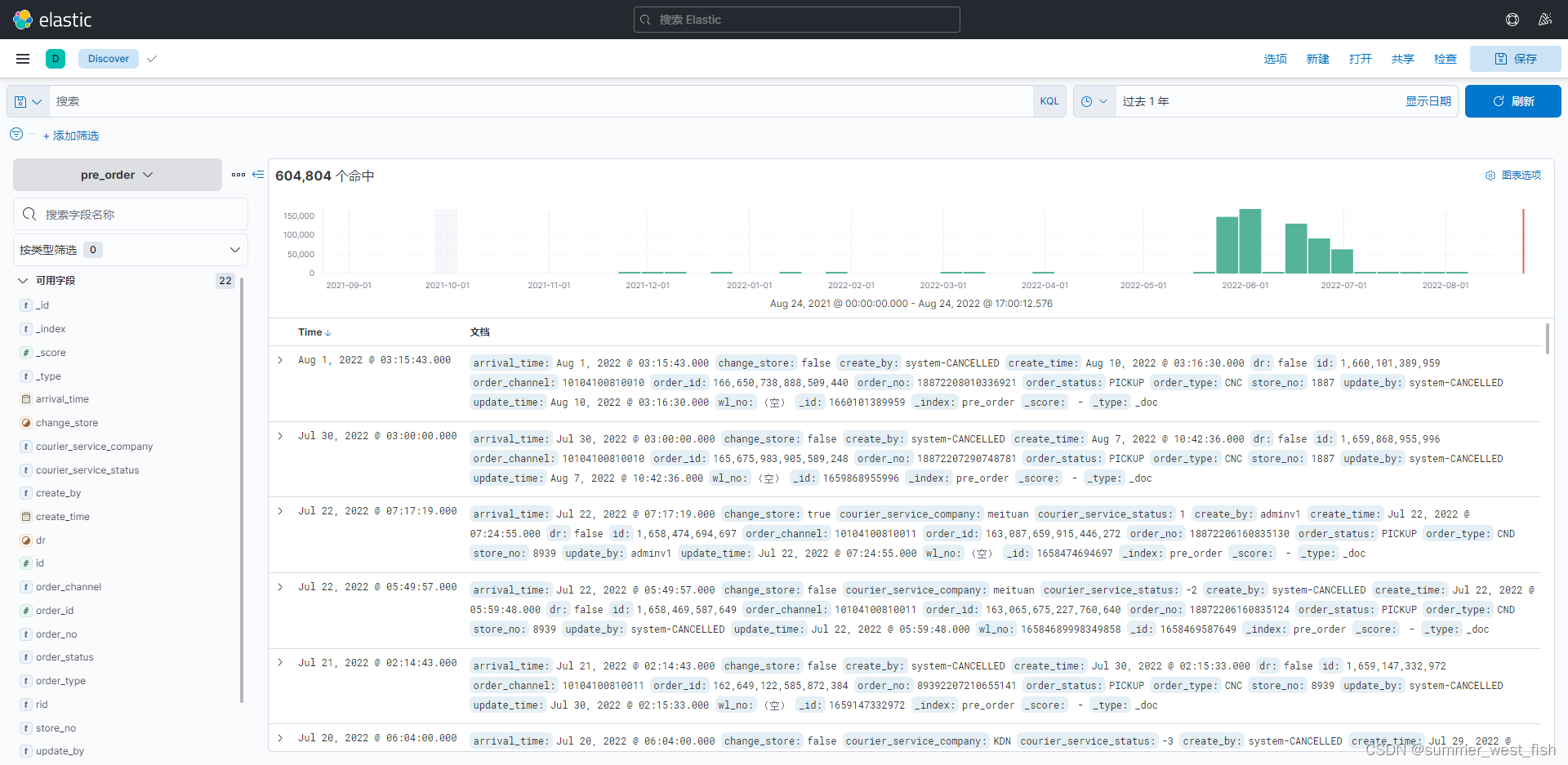

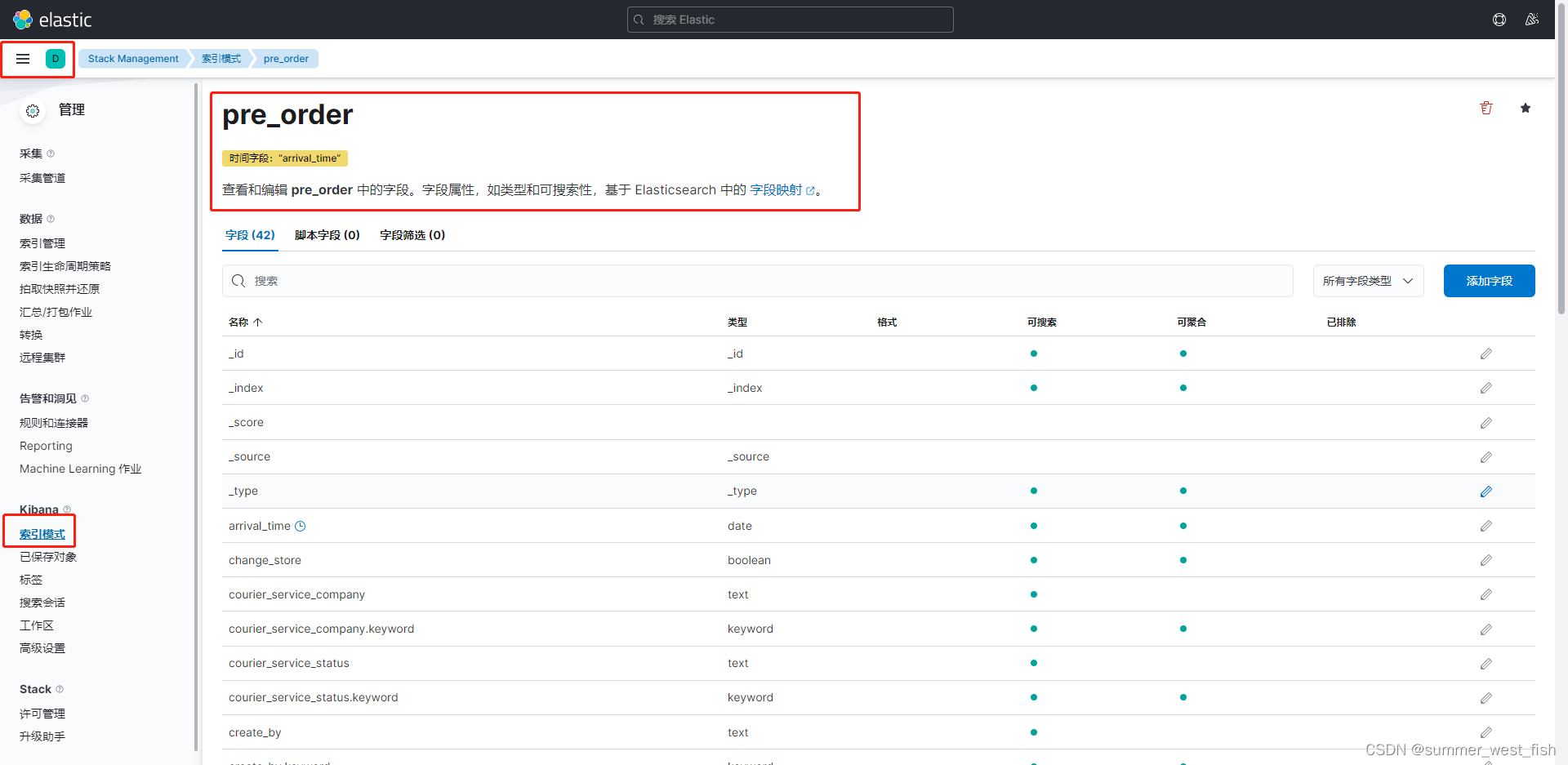

--- 04、Kibana创建索引模式

结果: