我有一个语料库,我有一个词.对于语料库中每个单词的出现,我想得到一个包含前面的k个单词和单词后面的k个单词的列表.我在算法上做得很好(见下文),但我想知道NLTK是否为我错过了我的需求提供了一些功能?

def sized_context(word_index, window_radius, corpus):

""" Returns a list containing the window_size amount of words to the left

and to the right of word_index, not including the word at word_index.

"""

max_length = len(corpus)

left_border = word_index - window_radius

left_border = 0 if word_index - window_radius < 0 else left_border

right_border = word_index + 1 + window_radius

right_border = max_length if right_border > max_length else right_border

return corpus[left_border:word_index] + corpus[word_index+1: right_border]

解决方法:

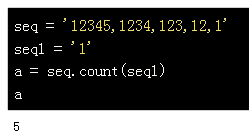

最简单的nltk-ish方法是使用nltk.ngrams().

words = nltk.corpus.brown.words()

k = 5

for ngram in nltk.ngrams(words, 2*k+1, pad_left=True, pad_right=True, pad_symbol=" "):

if ngram[k+1].lower() == "settle":

print(" ".join(ngram))

pad_left和pad_right确保查看所有单词.如果你不让你的索引跨越句子(因此:许多边界情况),这一点很重要.

words = (w for w in nltk.corpus.brown.words() if re.search(r"\w", w))