docker容器访问hdfs报错UnkNownHostException,报错信息如下:

java.lang.RuntimeException: java.net.UnkNownHostException: Invalid host name: local host is: (unkNown); destination host is: "namenode1":8020; java.net.UnkNownHostException; For more details see: http://wiki.apache.org/hadoop/UnkNownHost

at org.apache.gobblin.configuration.sourceState.materializeWorkUnitAndDatasetStates(SourceState.java:252)

at org.apache.gobblin.configuration.sourceState.getPrevIoUsWorkUnitStatesByDatasetUrns(SourceState.java:224)

at org.apache.gobblin.source.extractor.extract.kafka.KafkaSource.getAllPrevIoUsOffsetState(KafkaSource.java:664)

at org.apache.gobblin.source.extractor.extract.kafka.KafkaSource.getPrevIoUsOffsetForPartition(KafkaSource.java:617)

at org.apache.gobblin.source.extractor.extract.kafka.KafkaSource.getWorkUnitForTopicPartition(KafkaSource.java:438)

at org.apache.gobblin.source.extractor.extract.kafka.KafkaSource.getWorkUnitsForTopic(KafkaSource.java:389)

at org.apache.gobblin.source.extractor.extract.kafka.KafkaSource.access$600(KafkaSource.java:82)

at org.apache.gobblin.source.extractor.extract.kafka.KafkaSource$WorkUnitCreator.run(KafkaSource.java:901)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.net.UnkNownHostException: Invalid host name: local host is: (unkNown); destination host is: "namenode1":8020; java.net.UnkNownHostException; For more details see: http://wiki.apache.org/hadoop/UnkNownHost

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:792)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:744)

at org.apache.hadoop.ipc.Client$Connection.<init>(Client.java:409)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1518)

at org.apache.hadoop.ipc.Client.call(Client.java:1451)

at org.apache.hadoop.ipc.Client.call(Client.java:1412)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy13.getFileInfo(UnkNown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:771)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy14.getFileInfo(UnkNown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2108)

at org.apache.hadoop.hdfs.distributedFileSystem$22.doCall(distributedFileSystem.java:1305)

at org.apache.hadoop.hdfs.distributedFileSystem$22.doCall(distributedFileSystem.java:1301)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.distributedFileSystem.getFileStatus(distributedFileSystem.java:1317)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1426)

at org.apache.gobblin.runtime.FsDatasetStateStore.getLatestDatasetStatesByUrns(FsDatasetStateStore.java:296)

at org.apache.gobblin.runtime.CombinedWorkUnitAndDatasetStateGenerator.getCombinedWorkUnitAndDatasetState(CombinedWorkUnitAndDatasetStateGenerator.java:59)

at org.apache.gobblin.configuration.sourceState.materializeWorkUnitAndDatasetStates(SourceState.java:246)

... 12 more

Caused by: java.net.UnkNownHostException

at org.apache.hadoop.ipc.Client$Connection.<init>(Client.java:410)

尝试以下方式:

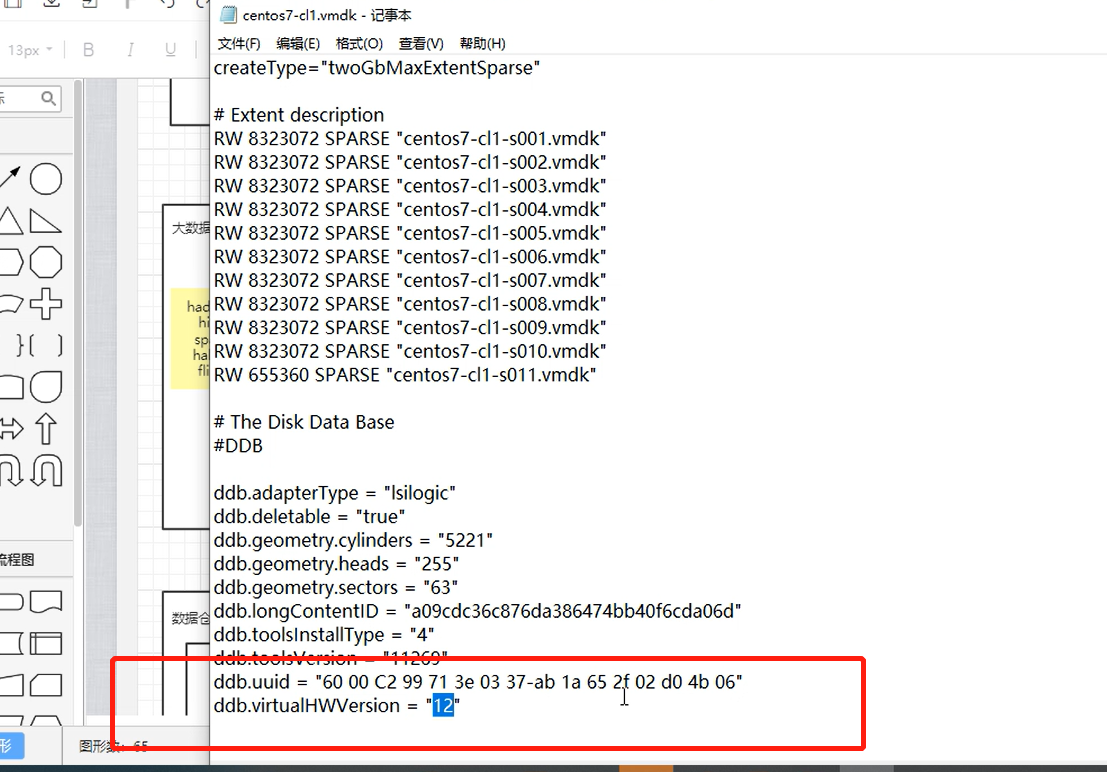

问题依旧,根据官方文档提示,修改/etc/hosts文件,将

192.168.0.1 namenode1

192.168.0.1 namenode1 namenode1.

问题修复