下载并传入包

下载地址:https://archive.apache.org/dist/flume/1.6.0/

部署flume组件

#解压包

[root@master ~]# tar xf apache-flume-1.6.0-bin.tar.gz -C /usr/local/src/

#进入目录

[root@master ~]# cd /usr/local/src/

#修改名字为flume

[root@master src]# mv apache-flume-1.6.0-bin/ flume

#权限

[root@master src]# chown -R hadoop.hadoop /usr/local/src/

#创建环境变量

[root@master src]# vi /etc/profile.d/flume.sh

export FLUME_HOME=/usr/local/src/flume

export PATH=${FLUME_HOME}/bin:$PATH

查看是否有路径

[root@master src]# su - hadoop

Last login: Fri Apr 29 16:36:50 CST 2022 on pts/1

[hadoop@master ~]$ echo $PATH

/home/hadoop/.local/bin:/home/hadoop/bin:/usr/local/src/zookeeper/bin:/usr/local/src/sqoop/bin:/usr/local/src/hive/bin:/usr/local/src/hbase/bin:/usr/local/src/jdk/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/src/flume/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin

#有看到flume的安装路径则表示没问题

配置flume

#修改配置文件

[hadoop@master ~]$ vi /usr/local/src/hbase/conf/hbase-env.sh

#export HBASE_CLAsspATH=/usr/local/src/hadoop/etc/hadoop/ 注释这一行

#进入目录

[hadoop@master ~]$ cd /usr/local/src/flume/conf/

#复制并改名为flume-env.sh

[hadoop@master conf]$ cp flume-env.sh.template flume-env.sh

#修改配置文件

[hadoop@master conf]$ vi flume-env.sh

export JAVA_HOME=/usr/local/src/jdk

#启动所有组件

[hadoop@master conf]$ start-all.sh

#查看版本

[hadoop@master conf]$ flume-ng version

Flume 1.6.0

Source code repository: https://git-wip-us.apache.org/repos/asf/flume.git

Revision: 2561a23240a71ba20bf288c7c2cda88f443c2080

Compiled by hshreedharan on Mon May 11 11:15:44 PDT 2015

From source with checksum b29e416802ce9ece3269d34233baf43f

使用flume发送接受信息

#进入目录

[hadoop@master conf]$ cd /usr/local/src/flume/

#写入数据

[hadoop@master flume]$ vi /usr/local/src/flume/simple-hdfs-flume.conf

a1.sources=r1

a1.sinks=k1

a1.channels=c1

a1.sources.r1.type=spooldir

a1.sources.r1.spoolDir=/usr/local/src/hadoop/logs/

a1.sources.r1.fileHeader=true

a1.sinks.k1.type=hdfs

a1.sinks.k1.hdfs.path=hdfs://master:9000/tmp/flume

a1.sinks.k1.hdfs.rollsize=1048760

a1.sinks.k1.hdfs.rollCount=0

a1.sinks.k1.hdfs.rollInterval=900

a1.sinks.k1.hdfs.useLocalTimeStamp=true

a1.channels.c1.type=file

a1.channels.c1.capacity=1000

a1.channels.c1.transactionCapacity=100

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

#运行

[hadoop@master flume]$ flume-ng agent --conf-file simple-hdfs-flume.conf --name a1

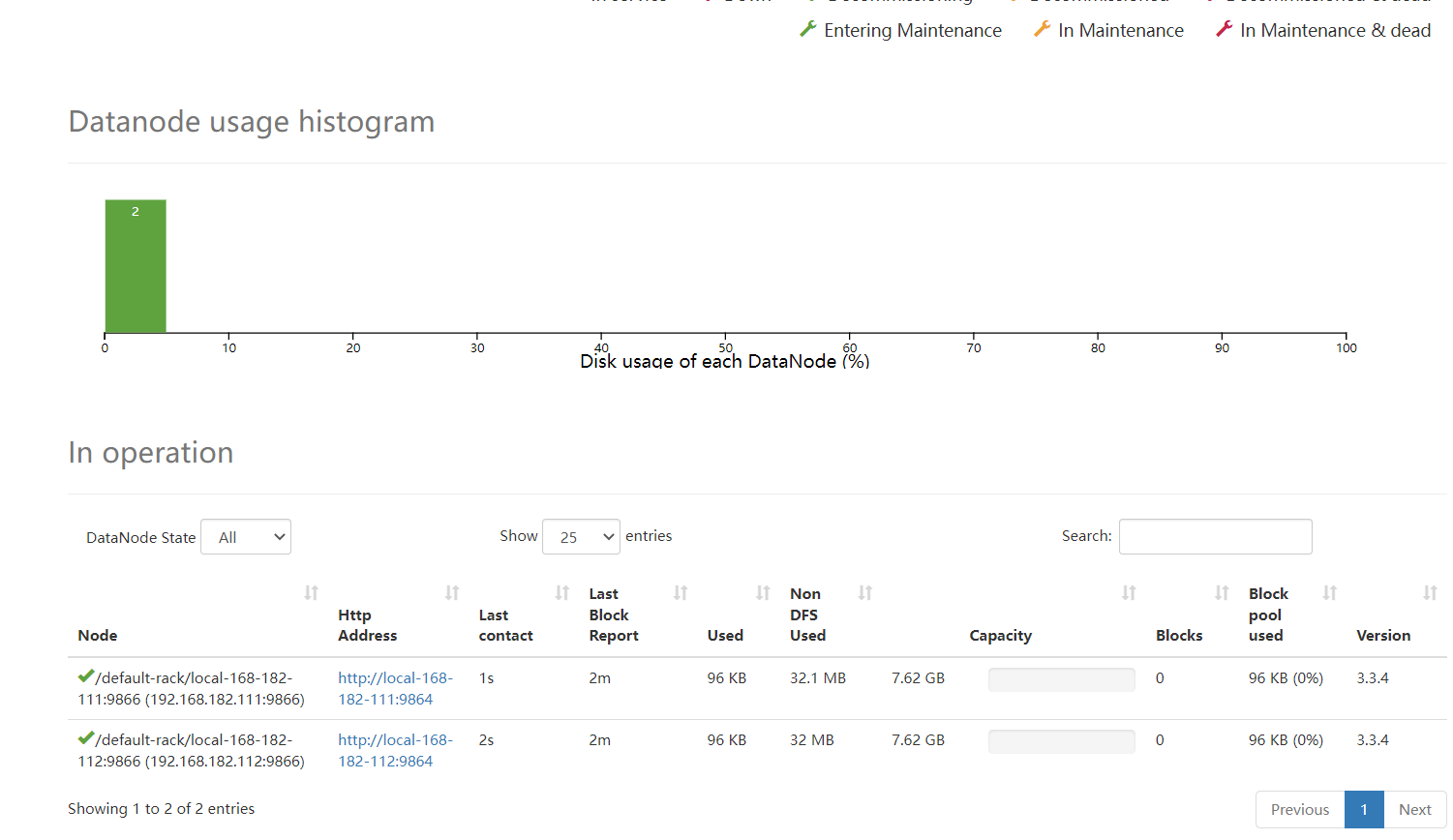

查看flume传输到hdfs的文件

[hadoop@master flume]$ hdfs dfs -ls /tmp/flume