问题描述

- 查看spark默认的python版本

[root@master day27]# pyspark

/home/software/spark-2.3.4-bin-hadoop2.7/conf/spark-env.sh: line 2: /usr/local/hadoop/bin/hadoop: No such file or directory

Python 2.7.5 (default, Nov 16 2020, 22:23:17)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

2027-11-29 18:45:59 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.3.4

/_/

Using Python version 2.7.5 (default, Nov 16 2020 22:23:17)

SparkSession available as 'spark'.

- 修改为python3

# 查找位置

[root@master ~]# whereis pyspark

pyspark: /home/software/spark-2.3.4-bin-hadoop2.7/bin/pyspark2.cmd /home/software/spark-2.3.4-bin-hadoop2.7/bin/pyspark.cmd /home/software/spark-2.3.4-bin-hadoop2.7/bin/pyspark

# 进入指定路径

cd /home/software/spark-2.3.4-bin-hadoop2.7/bin

# 编辑

vim pyspark

# python3的路径

export PYSPARK_HOME=/home/software/anaconda3/bin/python3

export PYSPARK_PYTHON=/home/software/anaconda3/bin/python3

- 报错如下

ssh://root@192.168.128.78:22/home/software/anaconda3/bin/python3 -u /tmp/pycharm_project_115/day27/test1.py

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

27/11/29 18:41:40 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

/home/software/anaconda3/lib/python3.6/site-packages/pyspark/context.py:238: FutureWarning: Python 3.6 support is deprecated in Spark 3.2.

FutureWarning

27/11/29 18:41:49 ERROR Executor: Exception in task 0.0 in stage 0.0 (TID 0)

java.io.IOException: Cannot run program "python3": error=2, No such file or directory

at java.lang.ProcessBuilder.start(ProcessBuilder.java:1048)

at org.apache.spark.api.python.PythonWorkerFactory.startDaemon(PythonWorkerFactory.scala:215)

- 解决方案

[root@master ~]# cd ~

[root@master ~]# vim .bashrc

# 配置python3的路径

export PYSPARK_HOME=/home/software/anaconda3/bin/python3

export PYSPARK_PYTHON=/home/software/anaconda3/bin/python3

# 配置生效

[root@master ~]# source .bashrc

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

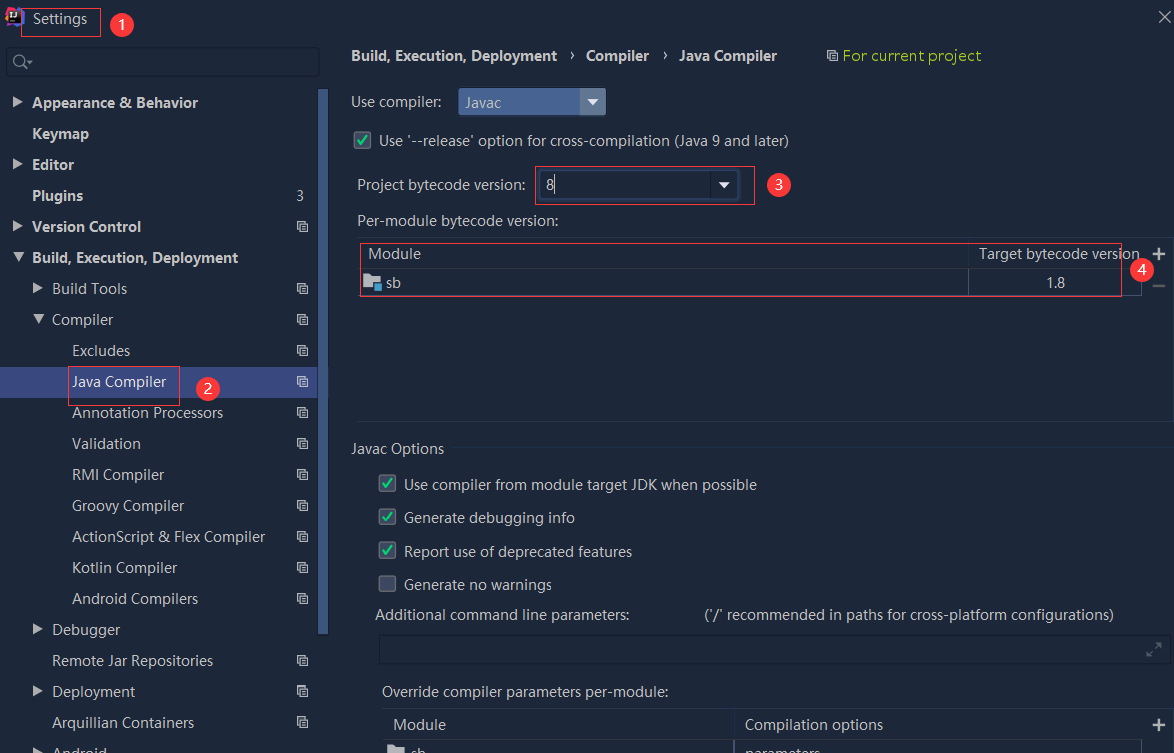

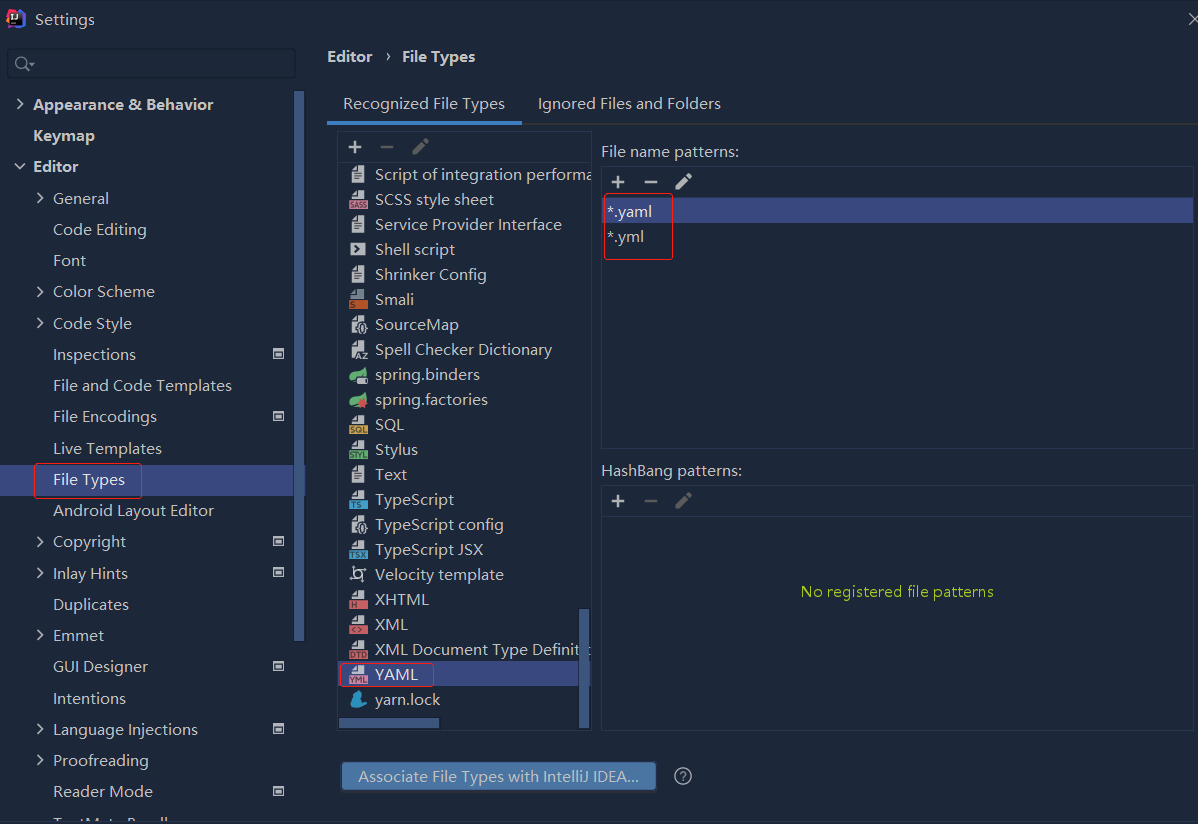

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

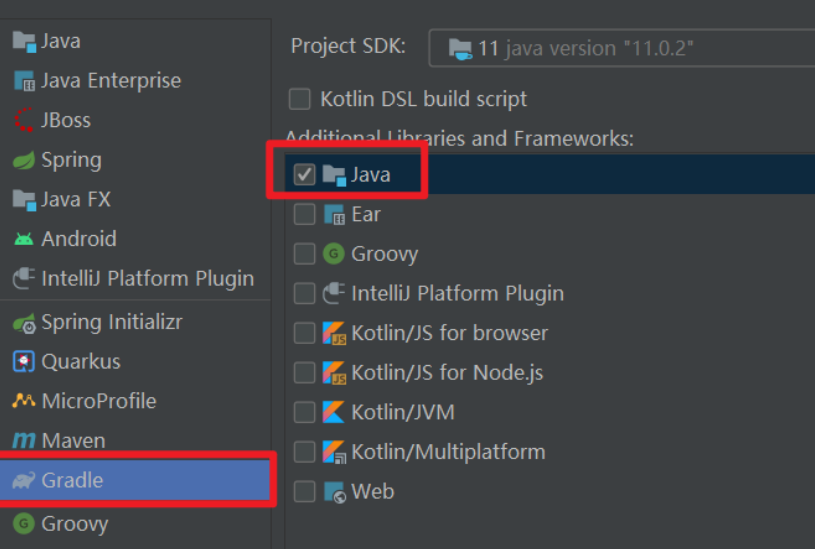

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...