问题描述

- 错误1

# 重写数据

hive (edu)> insert overwrite table dwd_trade_cart_add_inc

> select data.id,

> data.user_id,

> data.course_id,

> date_format(data.create_time, 'yyyy-MM-dd') date_id,

> data.session_id,

> data.create_time,

> data.cart_price,

> date_format(data.create_time, 'yyyy-MM-dd') dt

> from ods_cart_info_inc

> where dt = '2023-12-21' and type = 'bootstrap-insert';

FAILED: SemanticException 1:23 Need to specify partition columns because the destination table is partitioned. Error encountered near token 'dwd_trade_cart_add_inc'

# 查看日志

# cat /tmp/root/hive.log

2023-12-28T21:43:39,379 ERROR [bdac01c5-b6e3-4cd3-8a9c-186366b66791 main] ql.Driver: FAILED: SemanticException 1:23 Need to specify partition columns because the destination table is partitioned. Error encountered near token 'dwd_trade_cart_add_inc'

org.apache.hadoop.hive.ql.parse.SemanticException: 1:23 Need to specify partition columns because the destination table is partitioned. Error encountered near token 'dwd_trade_cart_add_inc'

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genFileSinkPlan(SemanticAnalyzer.java:6817)

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genPostGroupByBodyPlan(SemanticAnalyzer.java:9772)

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genBodyPlan(SemanticAnalyzer.java:9644)

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genPlan(SemanticAnalyzer.java:10549)

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genPlan(SemanticAnalyzer.java:10427)

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.genOPTree(SemanticAnalyzer.java:11125)

at org.apache.hadoop.hive.ql.parse.CalcitePlanner.genOPTree(CalcitePlanner.java:481)

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.analyzeInternal(SemanticAnalyzer.java:11138)

at org.apache.hadoop.hive.ql.parse.CalcitePlanner.analyzeInternal(CalcitePlanner.java:286)

at org.apache.hadoop.hive.ql.parse.BaseSemanticAnalyzer.analyze(BaseSemanticAnalyzer.java:258)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:512)

at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1317)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1457)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1237)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1227)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:233)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:184)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:403)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:821)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:759)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:686)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:244)

at org.apache.hadoop.util.RunJar.main(RunJar.java:158)

# 插入1条数据

hive (edu)> insert into table ods_cart_info_full values('1','9549','1','java基础课程A',100,'https://csdnimg.cn/release/blogv2/dist/pc/img/reprint.png','be10c8b7-854a-4731-b331-12fbac5a192','2021-09-24 22:17:37','2023-07-01 22:17:37','0','0');

FAILED: SemanticException 1:18 Need to specify partition columns because the destination table is partitioned. Error encountered near token 'ods_cart_info_full'

- 解决方案

# 制定分区

insert overwrite table dwd_trade_cart_add_inc partition (dt = '2023-12-21')

select data.id,

data.user_id,

data.course_id,

date_format(data.create_time, 'yyyy-MM-dd') date_id,

data.session_id,

data.create_time,

data.cart_price

from ods_cart_info_inc

where dt = '2023-12-21' and type = 'bootstrap-insert';

# 制定分区

insert into table ods_cart_info_full partition(dt = '2023-07-01') values('1','9549','1','java基础课程A',100,'https://csdnimg.cn/release/blogv2/dist/pc/img/reprint.png','be10c8b7-854a-4731-b331bac5a192','2021-09-24 22:17:37','2023-07-01 22:17:37','0','0');

Query ID = root_20231228235007_31bc7194-1ac6-4338-ab76-265584ebaff2

- 报错2

hive (edu)> insert overwrite table dwd_trade_cart_add_inc partition (dt = '2023-12-21')

> select data.id,

> data.user_id,

> data.course_id,

> date_format(data.create_time, 'yyyy-MM-dd') date_id,

> data.session_id,

> data.create_time,

> data.cart_price,

> date_format(data.create_time, 'yyyy-MM-dd') dt

> from ods_cart_info_inc

> where dt = '2023-12-21' and type = 'bootstrap-insert';

FAILED: SemanticException [Error 10044]: Line 1:23 Cannot insert into target table because column number/types are different ''2023-12-21'': Table insclause-0 has 7 columns, but query has 8 columns.

- 解决方案,参考

# 字段数量或类型与目标表不相同

- 错误3

hive (edu)> insert overwrite table dwd_trade_cart_add_inc partition (dt = '2023-12-21')

> select data.id,

> data.user_id,

> data.course_id,

> date_format(data.create_time, 'yyyy-MM-dd') date_id,

> data.session_id,

> data.create_time,

> data.cart_price,

> from ods_cart_info_inc

> where dt = '2023-12-21' and type = 'bootstrap-insert';

NoViableAltException(130@[80:1: selectItem : ( ( tableAllColumns )=> tableAllColumns -> ^( TOK_SELEXPR tableAllColumns ) | ( expression ( ( ( KW_AS )? identifier ) | ( KW_AS LPAREN identifier ( COMMA identifier )* RPAREN ) )? ) -> ^( TOK_SELEXPR expression ( identifier )* ) );])

at org.apache.hadoop.hive.ql.parse.HiveParser_SelectClauseParser$DFA13.specialStateTransition(HiveParser_SelectClauseParser.java:4624)

at org.antlr.runtime.DFA.predict(DFA.java:80)

at org.apache.hadoop.hive.ql.parse.HiveParser_SelectClauseParser.selectItem(HiveParser_SelectClauseParser.java:1615)

at org.apache.hadoop.hive.ql.parse.HiveParser_SelectClauseParser.selectList(HiveParser_SelectClauseParser.java:1176)

at org.apache.hadoop.hive.ql.parse.HiveParser_SelectClauseParser.selectClause(HiveParser_SelectClauseParser.java:950)

at org.apache.hadoop.hive.ql.parse.HiveParser.selectClause(HiveParser.java:42064)

at org.apache.hadoop.hive.ql.parse.HiveParser.atomSelectStatement(HiveParser.java:36720)

at org.apache.hadoop.hive.ql.parse.HiveParser.selectStatement(HiveParser.java:36987)

at org.apache.hadoop.hive.ql.parse.HiveParser.regularBody(HiveParser.java:36504)

at org.apache.hadoop.hive.ql.parse.HiveParser.queryStatementExpressionBody(HiveParser.java:35822)

at org.apache.hadoop.hive.ql.parse.HiveParser.queryStatementExpression(HiveParser.java:35710)

at org.apache.hadoop.hive.ql.parse.HiveParser.execStatement(HiveParser.java:2284)

at org.apache.hadoop.hive.ql.parse.HiveParser.statement(HiveParser.java:1333)

at org.apache.hadoop.hive.ql.parse.ParseDriver.parse(ParseDriver.java:208)

at org.apache.hadoop.hive.ql.parse.ParseUtils.parse(ParseUtils.java:77)

at org.apache.hadoop.hive.ql.parse.ParseUtils.parse(ParseUtils.java:70)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:468)

at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1317)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1457)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1237)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1227)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:233)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:184)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:403)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:821)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:759)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:686)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:244)

at org.apache.hadoop.util.RunJar.main(RunJar.java:158)

FAILED: ParseException line 9:0 cannot recognize input near 'from' 'ods_cart_info_inc' 'where' in selection target

-

解决方案:语法错误,

from前面多了逗号 -

报错4,参考

hive (edu)> select * from ods_cart_info_full limit 1;

FAILED: SemanticException Queries against partitioned tables without a partition filter are disabled for safety reasons. If you know what you are doing, please sethive.strict.checks.large.query to false and that hive.mapred.mode is not set to 'strict' to proceed. Note that if you may get errors or incorrect results if you make a mistake while using some of the unsafe features. No partition predicate for Alias "ods_cart_info_full" Table "ods_cart_info_full"

- 解决方案

hive (edu)> set hive.strict.checks.cartesian.product=flase;

hive (edu)> set hive.mapred.mode=nonstrict;

- 报错5:装载数据时报错

FAILED: SemanticException Failed to get a spark session: org.apache.hadoop.hive.ql.metadata.HiveException: Failed to create spark client.

- 报错6:装载数据时报错

Job failed with java.lang.ClassNotFoundException: Class org.apache.hive.hcatalog.data.JsonSerDe not found

FAILED: Execution Error, return code 3 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. java.util.concurrent.ExecutionException: Exception thrown by job

at org.apache.spark.JavaFutureActionWrapper.getImpl(FutureAction.scala:272)

at org.apache.spark.JavaFutureActionWrapper.get(FutureAction.scala:277)

at org.apache.hive.spark.client.RemoteDriver$JobWrapper.call(RemoteDriver.java:362)

at org.apache.hive.spark.client.RemoteDriver$JobWrapper.call(RemoteDriver.java:323)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 4 times, most recent failure: Lost task 0.3 in stage 0.0 (TID 3, slave3, executor 1): java.lang.RuntimeException: Map operator initialization failed: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ClassNotFoundException: Class org.apache.hive.hcatalog.data.JsonSerDe not found

at org.apache.hadoop.hive.ql.exec.spark.SparkMapRecordHandler.init(SparkMapRecordHandler.java:122)

at org.apache.hadoop.hive.ql.exec.spark.HiveMapFunction.call(HiveMapFunction.java:55)

at org.apache.hadoop.hive.ql.exec.spark.HiveMapFunction.call(HiveMapFunction.java:30)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$7$1.apply(JavaRDDLike.scala:186)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$7$1.apply(JavaRDDLike.scala:186)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$23.apply(RDD.scala:797)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$23.apply(RDD.scala:797)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:323)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:287)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:96)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:53)

at org.apache.spark.scheduler.Task.run(Task.scala:108)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:335)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.ClassNotFoundException: Class org.apache.hive.hcatalog.data.JsonSerDe not found

at org.apache.hadoop.hive.ql.exec.MapOperator.getConvertedOI(MapOperator.java:328)

at org.apache.hadoop.hive.ql.exec.MapOperator.setChildren(MapOperator.java:420)

at org.apache.hadoop.hive.ql.exec.spark.SparkMapRecordHandler.init(SparkMapRecordHandler.java:87)

... 16 more

Caused by: java.lang.ClassNotFoundException: Class org.apache.hive.hcatalog.data.JsonSerDe not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2101)

at org.apache.hadoop.hive.ql.plan.PartitionDesc.getDeserializer(PartitionDesc.java:177)

at org.apache.hadoop.hive.ql.exec.MapOperator.getConvertedOI(MapOperator.java:295)

... 18 more

- 解决方案

[root@slave1 bin]# cd /usr/local/software/hive-2.3.9/lib/

[root@slave1 lib]# ll hive-hcatalog*

-rw-r--r--. 1 root root 265922 6月 2 2021 hive-hcatalog-core-2.3.9.jar

# 进入hive执行如下

hive (edu)> add jar /usr/local/software/hive-2.3.9/lib/hive-hcatalog-core-2.3.9.jar;

Added [/usr/local/software/hive-2.3.9/lib/hive-hcatalog-core-2.3.9.jar] to class path

Added resources: [/usr/local/software/hive-2.3.9/lib/hive-hcatalog-core-2.3.9.jar]

- 报错7:装载数据时报错

Job failed with java.lang.IllegalArgumentException: Timestamp format must be yyyy-mm-dd hh:mm:ss[.fffffffff]

FAILED: Execution Error, return code 3 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. java.util.concurrent.ExecutionException: Exception thrown by job

at org.apache.spark.JavaFutureActionWrapper.getImpl(FutureAction.scala:272)

at org.apache.spark.JavaFutureActionWrapper.get(FutureAction.scala:277)

at org.apache.hive.spark.client.RemoteDriver$JobWrapper.call(RemoteDriver.java:362)

at org.apache.hive.spark.client.RemoteDriver$JobWrapper.call(RemoteDriver.java:323)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

- 解决方案

# 装载语句如下

date_format(from_utc_timestamp(action.ts * 1000, 'GMT+8'), 'yyyy-MM-dd HH:mm:ss') date_id,

# 修改为如下

date_format(from_utc_timestamp(ts, 'GMT+8'), 'yyyy-MM-dd HH:mm:ss'),

- 解决方案

# 修改VMware和hadoop内存

vim /hadoop/etc/hadoop/yarn-site.xml

# 之前已经将VMware和hadoop内存设置为4GB了,重启服务器再次尝试

查看详情

# 设置非严格模式

hive (default)> SET hive.exec.dynamic.partition.mode=nonstrict;

hive (default)> SET hive.exec.dynamic.partition.mode;

hive.exec.dynamic.partition.mode=nonstrict

# strict为开启严格模式,nostrict关闭严格模式

set hive.mapred.mode=strict

set hive.mapred.mode=nostrict

# 表示未开启严格模式

hive (default)> set hive.mapred.mode;

hive.mapred.mode is undefined

# 设置严格模式

set hive.mapred.mode=strict;

set hive.strict.checks.no.partition.filter=true;

set hive.strict.checks.orderby.no.limit=true;

set hive.strict.checks.cartesian.product=true;

# 非严格模式,限制笛卡尔积运算

set hive.mapred.mode=nonstrict;

set hive.strict.checks.cartesian.product=true;

# 严格模式,不限制笛卡尔积运算

set hive.mapred.mode=strict;

set hive.strict.checks.cartesian.product=false;

# 显示当前库

set hive.cli.print.current.db=true;

# 查询结果时显示字段名称

set hive.cli.print.header=true;

# 不会执行mapreduce程序

set hive.fetch.task.conversion=more;

# 都会执行mapreduce程序

set hive.fetch.task.conversion=none;

# 指定队列

set mapreduce.job.queuename=hive;

# 建表格式改为orc

# hive.default.fileformat.managed=none时,可以不改

set hive.default.fileformat=ORC;

set hive.default.fileformat.managed=ORC;

# 当一个sql中有多个job时候,且这多个job之间没有依赖,则可以让顺序执行变为并行执行

set hive.exec.parallel=true;

# 同一个sql允许并行任务的最大线程数

set hive.exec.parallel.thread.number=16;

# 设置单机还是集群执行

set hive.exec.mode.local.auto=true;

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

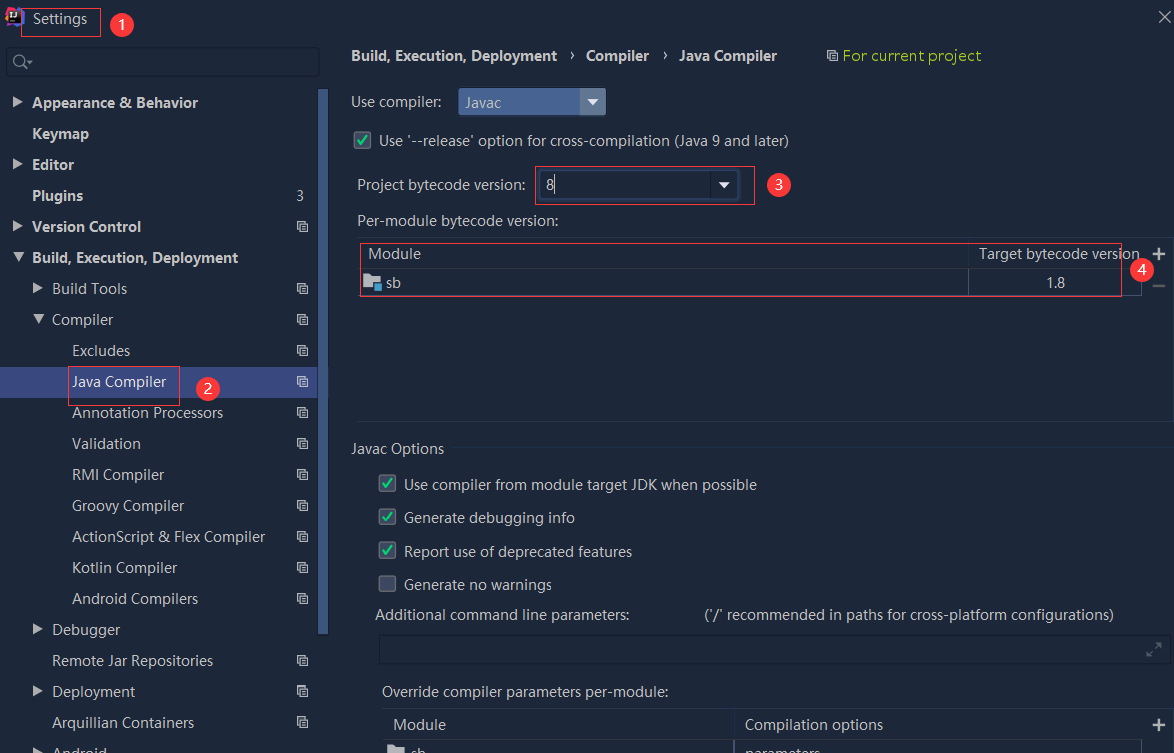

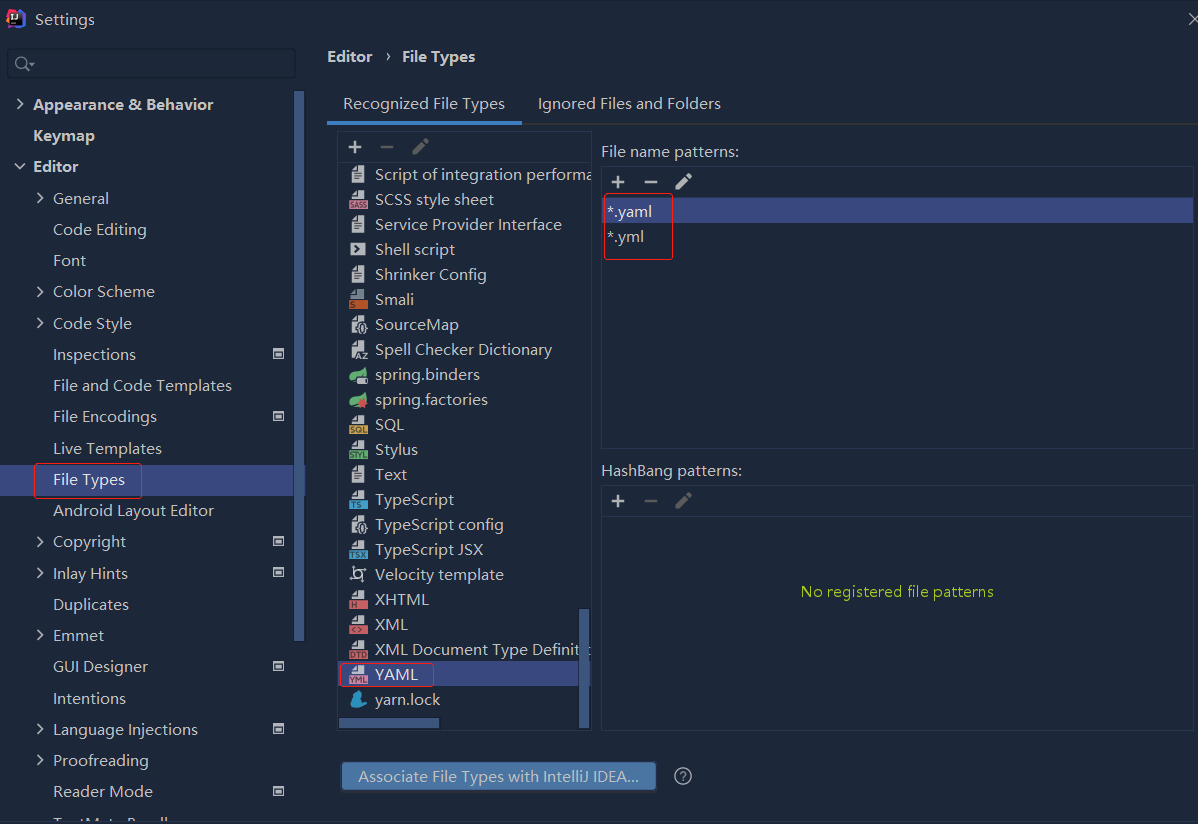

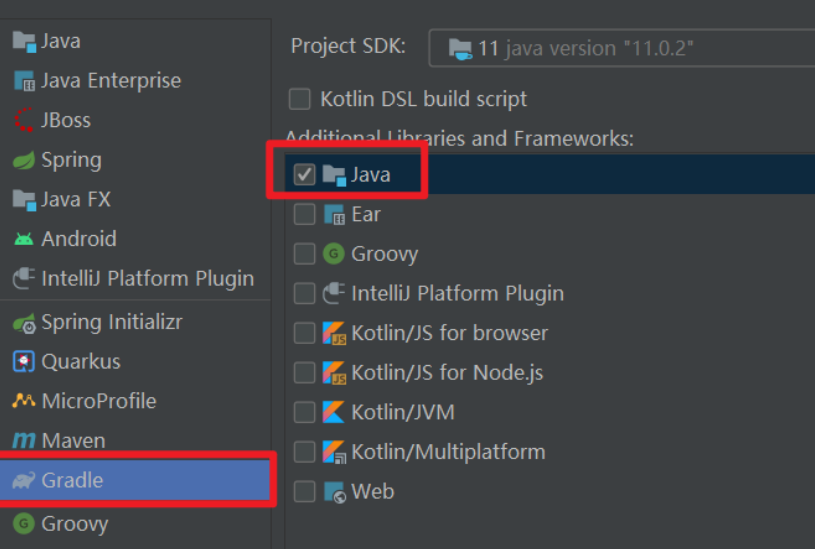

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...