问题描述

- 错误1

hive (edu)> insert into huanhuan values(1,'haoge');

Query ID = root_20240110071417_fe1517ad-3607-41f4-bdcf-d00b98ac443e

Total jobs = 1

Launching Job 1 out of 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Failed to execute spark task, with exception 'org.apache.hadoop.hive.ql.metadata.HiveException(Failed to create spark client.)'

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Failed to create spark client.

- 查看hive日志

2024-01-10T07:23:31,428 ERROR [1bd7b427-5c5f-4fea-83ad-88af3631a9a1 main] client.SparkClientImpl: Timed out waiting for client to connect.

Possible reasons include network issues, errors in remote driver or the cluster has no available resources, etc.

Please check YARN or Spark driver's logs for further information.

java.util.concurrent.ExecutionException: java.util.concurrent.TimeoutException: Timed out waiting for client connection.

at io.netty.util.concurrent.AbstractFuture.get(AbstractFuture.java:41) ~[netty-all-4.0.52.Final.jar:4.0.52.Final]

at org.apache.hive.spark.client.SparkClientImpl.<init>(SparkClientImpl.java:109) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hive.spark.client.SparkClientFactory.createClient(SparkClientFactory.java:80) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.createRemoteClient(RemoteHiveSparkClient.java:101) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.exec.spark.RemoteHiveSparkClient.<init>(RemoteHiveSparkClient.java:97) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.exec.spark.HiveSparkClientFactory.createHiveSparkClient(HiveSparkClientFactory.java:73) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionImpl.open(SparkSessionImpl.java:62) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.exec.spark.session.SparkSessionManagerImpl.getSession(SparkSessionManagerImpl.java:115) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.exec.spark.SparkUtilities.getSparkSession(SparkUtilities.java:126) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:103) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:100) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2183) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1839) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1526) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1237) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1227) ~[hive-exec-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:233) ~[hive-cli-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:184) ~[hive-cli-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:403) ~[hive-cli-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:821) ~[hive-cli-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:759) ~[hive-cli-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:686) ~[hive-cli-2.3.9.jar:2.3.9]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_181]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_181]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_181]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_181]

at org.apache.hadoop.util.RunJar.run(RunJar.java:244) ~[hadoop-common-2.9.2.jar:?]

at org.apache.hadoop.util.RunJar.main(RunJar.java:158) ~[hadoop-common-2.9.2.jar:?]

Caused by: java.util.concurrent.TimeoutException: Timed out waiting for client connection.

at org.apache.hive.spark.client.rpc.RpcServer$2.run(RpcServer.java:173) ~[hive-exec-2.3.9.jar:2.3.9]

at io.netty.util.concurrent.PromiseTask$RunnableAdapter.call(PromiseTask.java:38) ~[netty-all-4.0.52.Final.jar:4.0.52.Final]

at io.netty.util.concurrent.ScheduledFutureTask.run(ScheduledFutureTask.java:120) ~[netty-all-4.0.52.Final.jar:4.0.52.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:399) ~[netty-all-4.0.52.Final.jar:4.0.52.Final]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:464) ~[netty-all-4.0.52.Final.jar:4.0.52.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:131) ~[netty-all-4.0.52.Final.jar:4.0.52.Final]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_181]

- 查看hadoop日志

org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1651090254-192.168.128.101-1702919765365:blk_1073742858_2034 file=/spark/hive-jars/mesos-1.0.0-shaded-protobuf.jar

at org.apache.hadoop.hdfs.DFSInputStream.refetchLocations(DFSInputStream.java:1084)

at org.apache.hadoop.hdfs.DFSInputStream.chooseDataNode(DFSInputStream.java:1068)

at org.apache.hadoop.hdfs.DFSInputStream.chooseDataNode(DFSInputStream.java:1047)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:655)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:949)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:1004)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:93)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:67)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:128)

at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:392)

at org.apache.hadoop.yarn.util.FSDownload.copy(FSDownload.java:267)

at org.apache.hadoop.yarn.util.FSDownload.call(FSDownload.java:363)

at org.apache.hadoop.yarn.util.FSDownload.call(FSDownload.java:62)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

- 报错2

[root@slave1 ~]# hadoop fs -ls /

ls: Call From slave1/192.168.128.101 to slave1:9000 failed on connection exception: java.net.ConnectException: 拒绝连接; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

- 解决方案

# 先停止

# slave1执行

stop-dfs.sh

# slave3执行

stop-yarn.sh

# 再启动

# 在slave1启动hdfs

start-dfs.sh

# 在slave3启动yarn

start-yarn.sh

# jps查看3台机器的进程是否正确

# slave1格式化

hdfs namenode -format

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

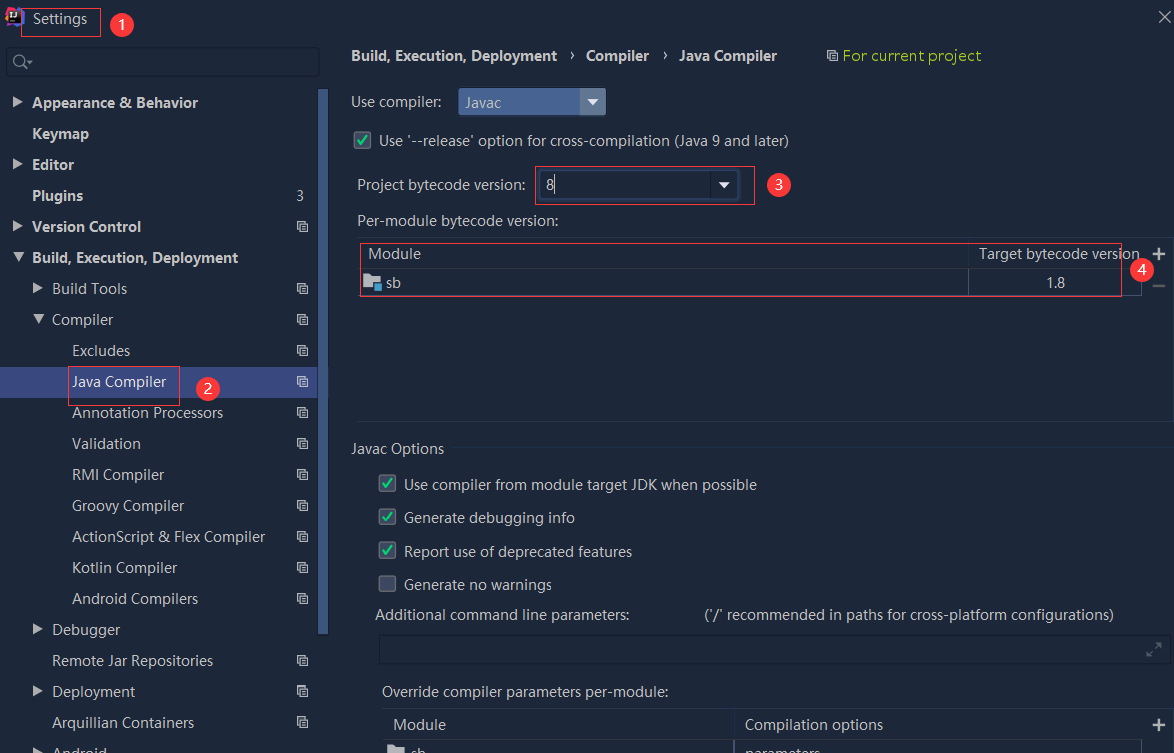

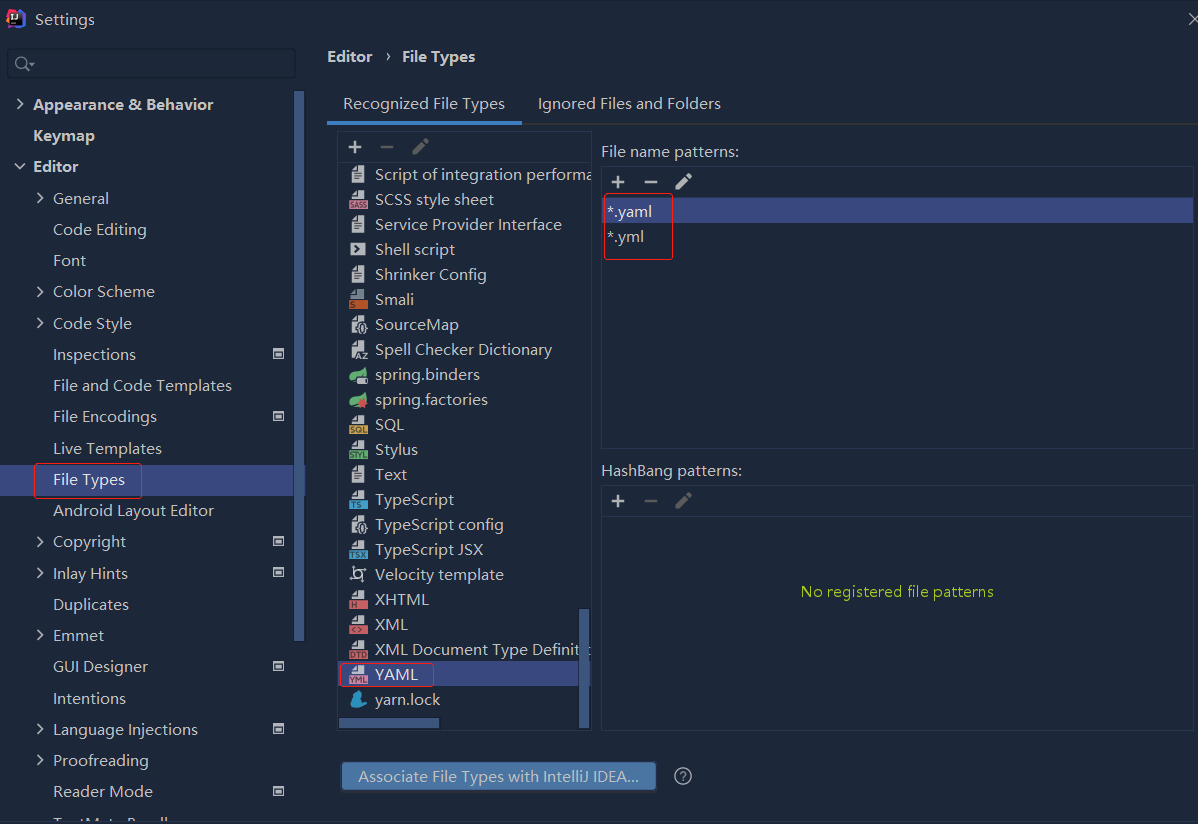

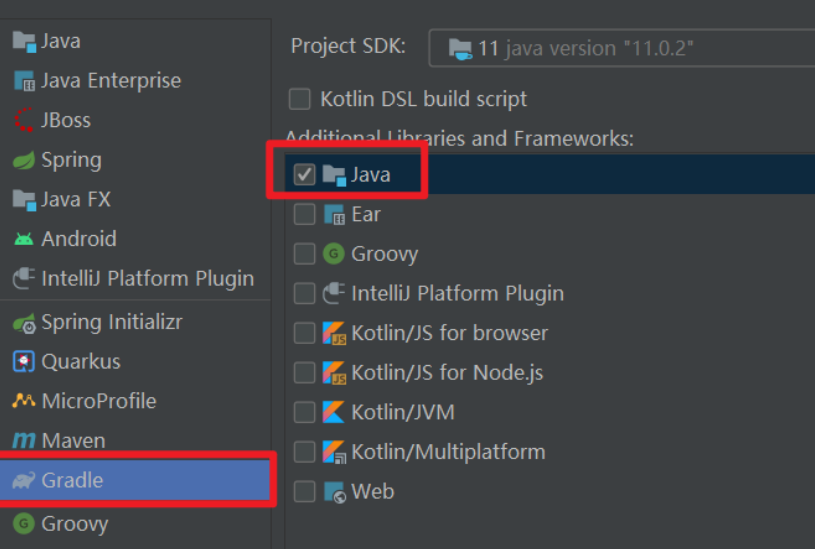

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...