问题描述

- 远程新建表

CREATE TABLE `UserBehavior` (

`id` int NOT NULL AUTO_INCREMENT,

`user_id` varchar(45) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`item_id` varchar(45) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`category_id` varchar(45) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`behavior_id` varchar(45) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`timestamp` varchar(45) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 10660001 CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = DYNAMIC;

-

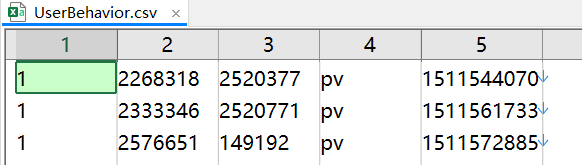

准备csv文件

-

将csv文件导出指定行数,文件会小点,如果直接导入超大文件,加载时间过长,会导入失败

import os

import pandas as pd

# 每份csv文件截取100万行,保存到当前路径

chunksize = 1000000

# csv路径

filename = r"C:\work\mysql\UserBehavior.csv"

data1 = pd.read_csv(filename, chunksize=chunksize, header=None, sep=',')

num = 0

for chunk in data1:

num += len(chunk)

head, tail = os.path.split(filename)

data2 = pd.read_csv(filename, chunksize=chunksize, header=None, sep=',')

# 保存了多少份csv文件

i = 0

for chunk in data2:

chunk.to_csv('{0}\{1}{2}'.format(head, i, tail), header=True, index=False)

print('保存第{0}个数据'.format(i))

i += 1

- 测试1

# 本地进入UserBehavior.csv所在文件夹,右键打开Git Bash

# 执行如下代码,导入成功,导入速度极快,100w条数据(36MB)导入花费6秒

ychen@DESKTOP-49HGBQ1 MINGW64 /c/work/mysql

$ mysqlimport -h192.168.128.80 -P3306 -uroot -p123456 db_test --fields-terminated-by=',' UserBehavior.csv --columns='user_id,item_id,category_id,behavior_id,timestamp' --local

mysqlimport: [Warning] Using a password on the command line interface can be insecure.

db_test.UserBehavior: Records: 1000001 Deleted: 0 Skipped: 0 Warnings: 0

- 测试2

# 打开cmd,登录mysql

C:\Users\ychen>mysql -h 192.168.128.80 -P 3306 -u root -p --local-infile

Enter password: **********

# 切换库

mysql> use db_test

Database changed

# 执行如下代码,只导入成功9739条数据

mysql> LOAD DATA LOCAL INFILE 'C:\\work\\mysql\\UserBehavior.csv'

-> INTO TABLE UserBehavior

-> FIELDS TERMINATED BY ','

-> ENCLOSED BY '"'

-> LINES TERMINATED BY '\n'

-> IGNORE 1 ROWS;

Query OK, 9739 rows affected, 65535 warnings (3.76 sec)

Records: 1000000 Deleted: 0 Skipped: 990261 Warnings: 1990261

# 复制如下代码

LOAD DATA LOCAL INFILE 'C:\\work\\mysql\\UserBehavior.csv'

INTO TABLE UserBehavior

FIELDS TERMINATED BY ','

ENCLOSED BY '"'

LINES TERMINATED BY '\n'

IGNORE 1 ROWS;

- 连接失败,防火墙开放端口

firewall-cmd --zone=public --add-port=3306/tcp --permanent

firewall-cmd --reload

- 报错1114

[root@hadoop01 ~]# whereis my.cnf

my: /etc/my.cnf

[root@hadoop01 ~]# cd /etc

[root@hadoop01 etc]# vim my.cnf

# 添加或修改如下

tmp_table_size=256M

max_heap_table_size=256M

# 重启

[root@hadoop01 etc]# systemctl restart mysqld.service

# 查看

show variables like '%max_heap_table_size%';

show variables like '%tmp_table_size%';

- 报错1130,修改为允许任意ip连接

mysql -u root -p

use mysql;

update user set host = '%' where user ='root';

flush privileges;

select host, user from user

- 报错

> 2 - File 'C:xxx' not found (Errcode: 2 "No such file or directory")

# 将单斜杠改为双斜杠

- 报错

ychen@DESKTOP-49HGBQ1 MINGW64 /c/work/mysql

$ mysqlimport -h192.168.128.80 -P3306 -uroot -pvmc79m8035 db_test --fields-terminated-by=',' UserBehavior.csv --columns='user_id,item_id,category_id,behavior_id,timestamp' --local

mysqlimport: [Warning] Using a password on the command line interface can be insecure.

mysqlimport: Error: 3948, Loading local data is disabled; this must be enabled on both the client and server sides, when using table: UserBehavior

# 修改权限

show variables like 'local_infile';

set global local_infile = 1;

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

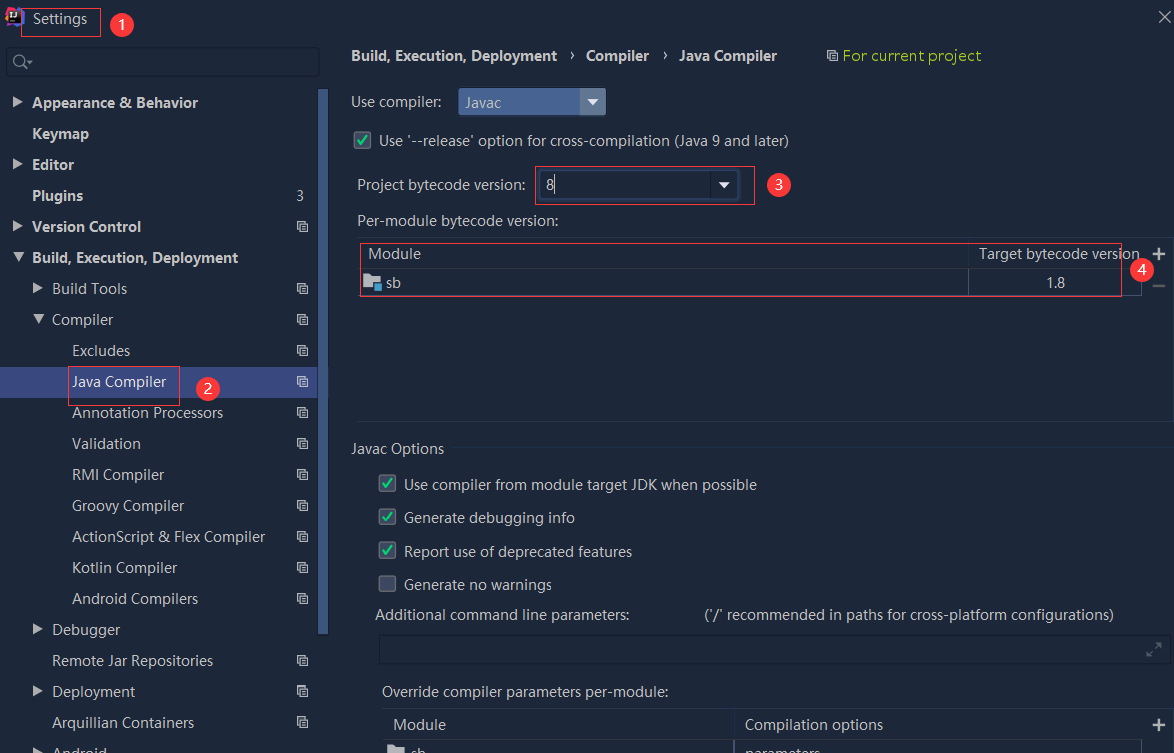

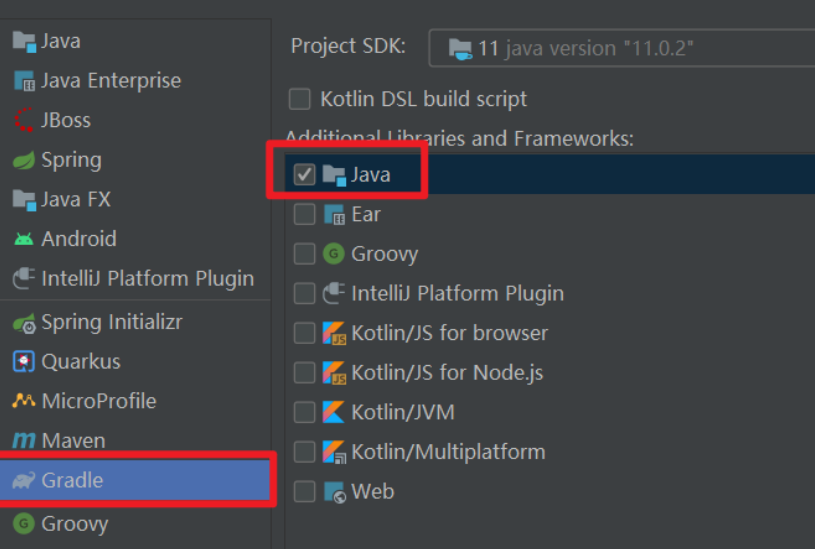

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

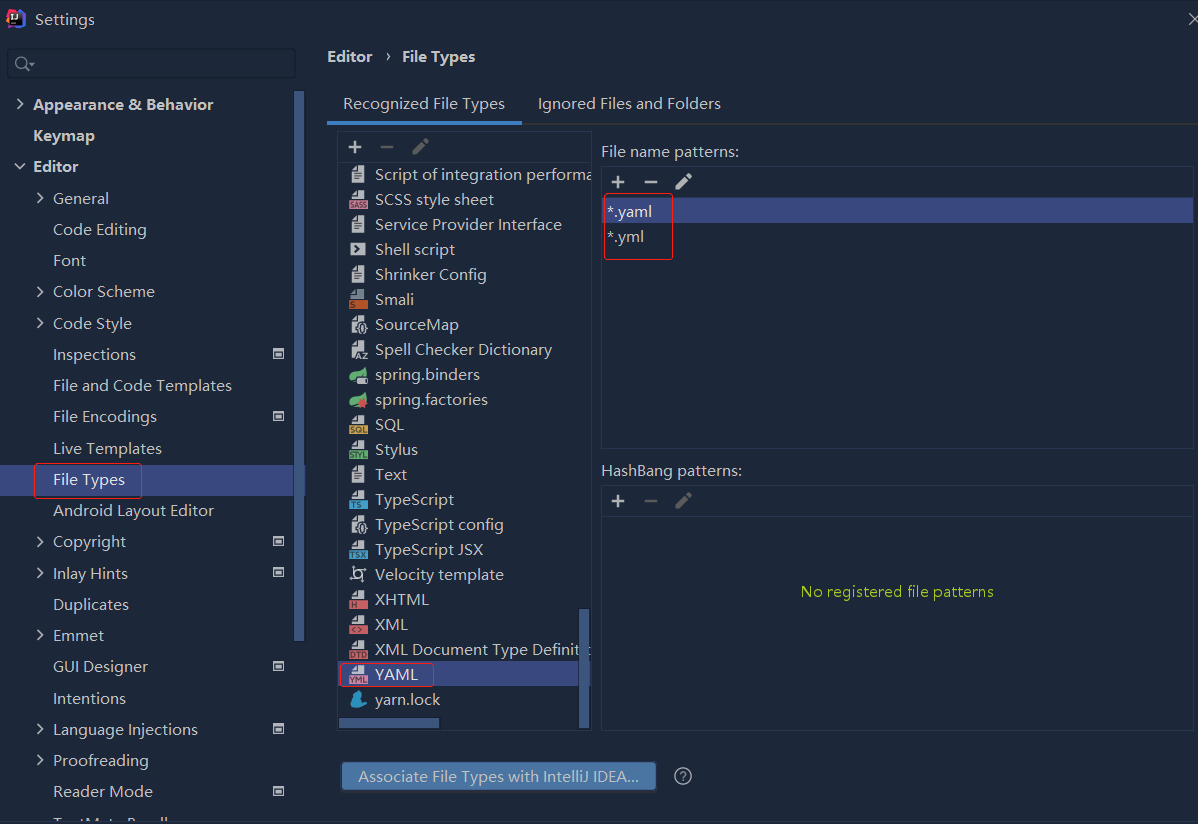

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...