问题描述

- 报错1:

mysql账号密码、数据库名、ip、端口都没有问题,仍然报错

2023-12-18 23:49:57.550 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2023-12-18 23:49:57.551 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2023-12-18 23:49:57.551 [main] INFO JobContainer - DataX jobContainer starts job.

2023-12-18 23:49:57.553 [main] INFO JobContainer - Set jobId = 0

2023-12-18 23:49:57.927 [job-0] WARN DBUtil - test connection of [jdbc:mysql://slave1:3306/edu2077] failed, for Code:[DBUtilErrorCode-10], Description:[连接数据库失败. 请检查您的 账号、密码、数据库名称、IP、Port或者向 DBA 寻求帮助(注意网络环境).]. - 具体错误信息为:com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server..

2023-12-18 23:49:57.930 [job-0] ERROR RetryUtil - Exception when calling callable, 异常Msg:DataX无法连接对应的数据库,可能原因是:1) 配置的ip/port/database/jdbc错误,无法连接。2) 配置的username/password错误,鉴权失败。请和DBA确认该数据库的连接信息是否正确。

java.lang.Exception: DataX无法连接对应的数据库,可能原因是:1) 配置的ip/port/database/jdbc错误,无法连接。2) 配置的username/password错误,鉴权失败。请和DBA确认该数据库的连接信息是否正确。

- 解决方案

# 进入如下目录,对应的mysql驱动包是否有,没有需要添加到该目录

[root@slave1 libs]# pwd

/opt/software/datax/plugin/reader/mysqlreader/libs

[root@slave1 libs]# ls

commons-collections-3.0.jar datax-common-0.0.1-SNAPSHOT.jar hamcrest-core-1.3.jar mysql-connector-java-8.0.20.jar

commons-io-2.4.jar druid-1.0.15.jar logback-classic-1.0.13.jar plugin-rdbms-util-0.0.1-SNAPSHOT.jar

commons-lang3-3.3.2.jar fastjson-1.1.46.sec01.jar logback-core-1.0.13.jar slf4j-api-1.7.10.jar

commons-math3-3.1.1.jar guava-r05.jar mysql-connector-java-5.1.34.jar

- 报错2:

ERROR JobContainer - Exception when job run

2023-12-18 23:54:06.192 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2023-12-18 23:54:06.196 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2023-12-18 23:54:06.197 [main] INFO JobContainer - DataX jobContainer starts job.

2023-12-18 23:54:06.199 [main] INFO JobContainer - Set jobId = 0

2023-12-18 23:54:06.220 [job-0] ERROR JobContainer - Exception when job run

java.lang.ClassCastException: java.lang.String cannot be cast to java.util.List

at com.alibaba.datax.common.util.Configuration.getList(Configuration.java:426) ~[datax-common-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil.dealJdbcAndTable(OriginalConfPretreatmentUtil.java:84) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil.simplifyConf(OriginalConfPretreatmentUtil.java:59) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil.doPretreatment(OriginalConfPretreatmentUtil.java:33) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Job.init(CommonRdbmsReader.java:55) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.reader.mysqlreader.MysqlReader$Job.init(MysqlReader.java:37) ~[mysqlreader-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.initJobReader(JobContainer.java:673) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.init(JobContainer.java:303) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:113) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.start(Engine.java:92) [datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.entry(Engine.java:171) [datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.main(Engine.java:204) [datax-core-0.0.1-SNAPSHOT.jar:na]

2023-12-18 23:54:06.232 [job-0] INFO StandAloneJobContainerCommunicator - Total 0 records, 0 bytes | Speed 0B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 0.00%

2023-12-18 23:54:06.234 [job-0] ERROR Engine -

-

解决方案:检查字段是否对应

-

报错3:

Description:[您配置凌乱了. 不能同时既配置table又配置querySql]

2023-12-19 00:04:44.350 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2023-12-19 00:04:44.351 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2023-12-19 00:04:44.351 [main] INFO JobContainer - DataX jobContainer starts job.

2023-12-19 00:04:44.353 [main] INFO JobContainer - Set jobId = 0

2023-12-19 00:04:44.379 [job-0] ERROR JobContainer - Exception when job run

com.alibaba.datax.common.exception.DataXException: Code:[DBUtilErrorCode-08], Description:[您配置凌乱了. 不能同时既配置table又配置querySql]. - 您的配置凌乱了. 因为datax不能同时既配置table又配置querySql.请检查您的配置并作出修改.

at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:26) ~[datax-common-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil.recognizeTableOrQuerySqlMode(OriginalConfPretreatmentUtil.java:257) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil.simplifyConf(OriginalConfPretreatmentUtil.java:56) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.reader.util.OriginalConfPretreatmentUtil.doPretreatment(OriginalConfPretreatmentUtil.java:33) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader$Job.init(CommonRdbmsReader.java:55) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.reader.mysqlreader.MysqlReader$Job.init(MysqlReader.java:37) ~[mysqlreader-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.initJobReader(JobContainer.java:673) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.init(JobContainer.java:303) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:113) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.start(Engine.java:92) [datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.entry(Engine.java:171) [datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.main(Engine.java:204) [datax-core-0.0.1-SNAPSHOT.jar:na]

2023-12-19 00:04:44.383 [job-0] INFO StandAloneJobContainerCommunicator - Total 0 records, 0 bytes | Speed 0B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 0.00%

2023-12-19 00:04:44.384 [job-0] ERROR Engine -

-

解决方案:querySql和table的配置删除一种

-

报错4:

ERROR HdfsWriter$Job - 请检查参数path:[${targetdir}],需要配置为绝对路径

2023-12-19 02:09:06.005 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2023-12-19 02:09:06.007 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2023-12-19 02:09:06.007 [main] INFO JobContainer - DataX jobContainer starts job.

2023-12-19 02:09:06.009 [main] INFO JobContainer - Set jobId = 0

2023-12-19 02:09:07.138 [job-0] INFO OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:mysql://localhost:3306/edu2077?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true.

2023-12-19 02:09:07.192 [job-0] INFO OriginalConfPretreatmentUtil - table:[base_province] has columns:[id,name,region_id,area_code,iso_code,iso_3166_2].

2023-12-19 02:09:07.226 [job-0] ERROR HdfsWriter$Job - 请检查参数path:[${targetdir}],需要配置为绝对路径

2023-12-19 02:09:07.231 [job-0] ERROR JobContainer - Exception when job run

com.alibaba.datax.common.exception.DataXException: Code:[HdfsWriter-02], Description:[您填写的参数值不合法.]. - 请检查参数path:[${targetdir}],需要配置为绝对路径

at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:26) ~[datax-common-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.writer.hdfswriter.HdfsWriter$Job.validateParameter(HdfsWriter.java:63) ~[hdfswriter-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.writer.hdfswriter.HdfsWriter$Job.init(HdfsWriter.java:42) ~[hdfswriter-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.initJobWriter(JobContainer.java:704) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.init(JobContainer.java:304) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:113) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.start(Engine.java:92) [datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.entry(Engine.java:171) [datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.main(Engine.java:204) [datax-core-0.0.1-SNAPSHOT.jar:na]

2023-12-19 02:09:07.234 [job-0] INFO StandAloneJobContainerCommunicator - Total 0 records, 0 bytes | Speed 0B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 0.00%

2023-12-19 02:09:07.246 [job-0] ERROR Engine -

- 解决方案

# 使用hadoop新建目录

hdfs dfs -mkdir /base_province

# 查看是否新建成功

hdfs dfs -ls -R /

# 配置path

"path":"/base_province",

- 报错5:

您配置的path: [/base_province/base_province.gz] 不是一个合法的目录, 请您注意文件重名, 不合法目录名等情况.

2023-12-19 02:42:02.102 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2023-12-19 02:42:02.104 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2023-12-19 02:42:02.104 [main] INFO JobContainer - DataX jobContainer starts job.

2023-12-19 02:42:02.106 [main] INFO JobContainer - Set jobId = 0

2023-12-19 02:42:02.983 [job-0] INFO OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:mysql://slave1:3306/edu2077?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true.

2023-12-19 02:42:03.039 [job-0] INFO OriginalConfPretreatmentUtil - table:[base_province] has columns:[id,name,region_id,area_code,iso_code,iso_3166_2].

十二月 19, 2023 2:42:03 上午 org.apache.hadoop.util.NativeCodeLoader <clinit>

警告: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2023-12-19 02:42:04.387 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2023-12-19 02:42:04.387 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do prepare work .

2023-12-19 02:42:04.387 [job-0] INFO JobContainer - DataX Writer.Job [hdfswriter] do prepare work .

2023-12-19 02:42:04.481 [job-0] ERROR JobContainer - Exception when job run

com.alibaba.datax.common.exception.DataXException: Code:[HdfsWriter-02], Description:[您填写的参数值不合法.]. - 您配置的path: [/base_province/base_province.gz] 不是一个合法的目录, 请您注意文件重名, 不合法目录名等情况.

at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:26) ~[datax-common-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.writer.hdfswriter.HdfsWriter$Job.prepare(HdfsWriter.java:153) ~[hdfswriter-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.prepareJobWriter(JobContainer.java:724) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.prepare(JobContainer.java:309) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:115) ~[datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.start(Engine.java:92) [datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.entry(Engine.java:171) [datax-core-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.Engine.main(Engine.java:204) [datax-core-0.0.1-SNAPSHOT.jar:na]

2023-12-19 02:42:04.486 [job-0] INFO StandAloneJobContainerCommunicator - Total 0 records, 0 bytes | Speed 0B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 0.00%

2023-12-19 02:42:04.591 [job-0] ERROR Engine -

-

解决方案:将path配置成hadoop中存在的目录

-

报错6:

Call From slave1/192.168.128.101 to slave1:8020 failed on connection exceptio

2023-12-19 02:14:41.588 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2023-12-19 02:14:41.590 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2023-12-19 02:14:41.590 [main] INFO JobContainer - DataX jobContainer starts job.

2023-12-19 02:14:41.592 [main] INFO JobContainer - Set jobId = 0

2023-12-19 02:14:42.626 [job-0] INFO OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:mysql://localhost:3306/edu2077?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true.

2023-12-19 02:14:42.680 [job-0] INFO OriginalConfPretreatmentUtil - table:[base_province] has columns:[id,name,region_id,area_code,iso_code,iso_3166_2].

十二月 19, 2023 2:14:43 上午 org.apache.hadoop.util.NativeCodeLoader <clinit>

警告: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2023-12-19 02:14:44.326 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2023-12-19 02:14:44.327 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do prepare work .

2023-12-19 02:14:44.327 [job-0] INFO JobContainer - DataX Writer.Job [hdfswriter] do prepare work .

2023-12-19 02:14:44.372 [job-0] ERROR HdfsWriter$Job - 判断文件路径[message:filePath =/base_province]是否存在时发生网络IO异常,请检查您的网络是否正常!

2023-12-19 02:14:44.396 [job-0] ERROR JobContainer - Exception when job run

com.alibaba.datax.common.exception.DataXException: Code:[HdfsWriter-06], Description:[与HDFS建立连接时出现IO异常.]. - java.net.ConnectException: Call From slave1/192.168.128.101 to slave1:8020 failed on connection exception: java.net.ConnectException: 拒绝连接; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:792)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:732)

at org.apache.hadoop.ipc.Client.call(Client.java:1480)

at org.apache.hadoop.ipc.Client.call(Client.java:1407)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy10.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:771)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy11.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2116)

at org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:1305)

at org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:1301)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1317)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1424)

at com.alibaba.datax.plugin.writer.hdfswriter.HdfsHelper.isPathexists(HdfsHelper.java:156)

at com.alibaba.datax.plugin.writer.hdfswriter.HdfsWriter$Job.prepare(HdfsWriter.java:151)

at com.alibaba.datax.core.job.JobContainer.prepareJobWriter(JobContainer.java:724)

at com.alibaba.datax.core.job.JobContainer.prepare(JobContainer.java:309)

at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:115)

at com.alibaba.datax.core.Engine.start(Engine.java:92)

at com.alibaba.datax.core.Engine.entry(Engine.java:171)

at com.alibaba.datax.core.Engine.main(Engine.java:204)

- 解决方案

# 查看搭建hadoop集群时配置的core-site.xml

<configuration>

<!-- 指定HDFS namenode的通信地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://slave1:9000</value>

</property>

# 将hdfswriter的端口修改为9000

"defaultFS":"hdfs://slave1:9000",

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

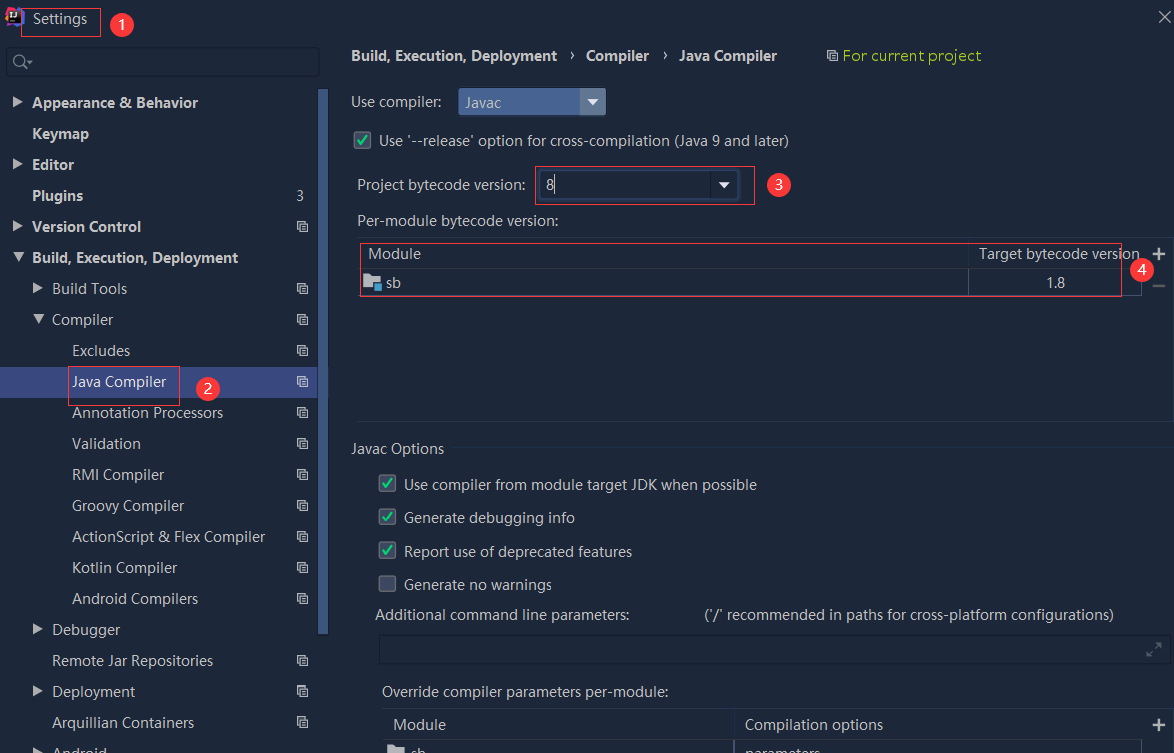

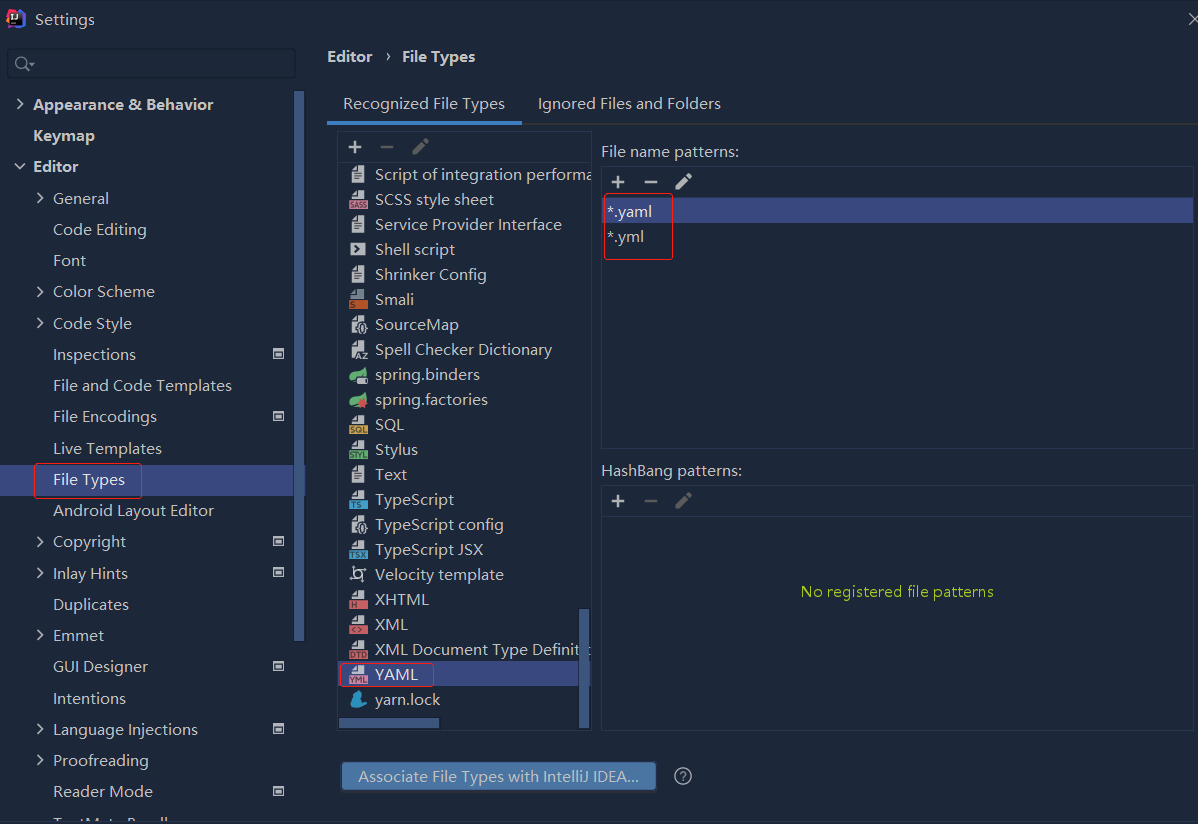

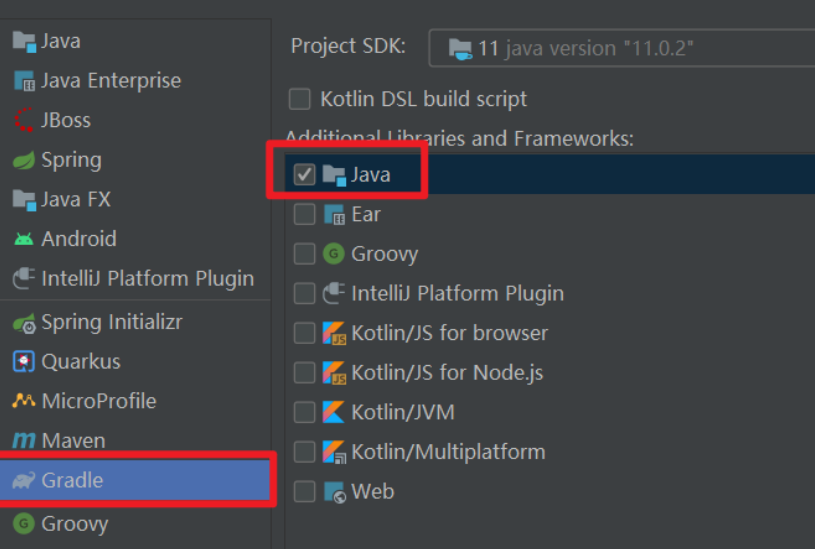

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...