问题描述

我正在尝试为我的模型制作一个评估器。到现在为止,所有其他组件都很好,但是当我尝试此配置时:

eval_config = tfma.EvalConfig(

model_specs=[

tfma.ModelSpec(label_key='Category'),],metrics_specs=tfma.metrics.default_multi_class_classification_specs(),slicing_specs=[

tfma.SlicingSpec(),tfma.SlicingSpec(feature_keys=['Category'])

])

创建此评估器:

model_resolver = ResolverNode(

instance_name='latest_blessed_model_resolver',resolver_class=latest_blessed_model_resolver.LatestBlessedModelResolver,model=Channel(type=Model),model_blessing=Channel(type=ModelBlessing))

context.run(model_resolver)

evaluator = Evaluator(

examples=example_gen.outputs['examples'],model=trainer.outputs['model'],baseline_model=model_resolver.outputs['model'],eval_config=eval_config)

context.run(evaluator)

我明白了:

[...]

IndexError Traceback (most recent call last)

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/apache_beam/runners/common.cpython-37m-darwin.so in apache_beam.runners.common.DoFnRunner.process()

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/apache_beam/runners/common.cpython-37m-darwin.so in apache_beam.runners.common.PerWindowInvoker.invoke_process()

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/apache_beam/runners/common.cpython-37m-darwin.so in apache_beam.runners.common.PerWindowInvoker._invoke_process_per_window()

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/apache_beam/runners/common.cpython-37m-darwin.so in apache_beam.runners.common._OutputProcessor.process_outputs()

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/apache_beam/runners/worker/operations.cpython-37m-darwin.so in apache_beam.runners.worker.operations.SingletonConsumerSet.receive()

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/apache_beam/runners/worker/operations.cpython-37m-darwin.so in apache_beam.runners.worker.operations.PGBKCVOperation.process()

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/apache_beam/runners/worker/operations.cpython-37m-darwin.so in apache_beam.runners.worker.operations.PGBKCVOperation.process()

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/tensorflow_model_analysis/evaluators/metrics_and_plots_evaluator_v2.py in add_input(self,accumulator,element)

355 for i,(c,a) in enumerate(zip(self._combiners,accumulator)):

--> 356 result = c.add_input(a,get_combiner_input(elements[0],i))

357 for e in elements[1:]:

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/tensorflow_model_analysis/metrics/calibration_histogram.py in add_input(self,element)

141 flatten=True,--> 142 class_weights=self._class_weights)):

143 example_weight = float(example_weight)

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/tensorflow_model_analysis/metrics/metric_util.py in to_label_prediction_example_weight(inputs,eval_config,model_name,output_name,sub_key,class_weights,flatten,squeeze,allow_none)

283 elif sub_key.top_k is not None:

--> 284 label,prediction = select_top_k(sub_key.top_k,label,prediction)

285

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/tensorflow_model_analysis/metrics/metric_util.py in select_top_k(top_k,labels,predictions,scores)

621 if not labels.shape or labels.shape[-1] == 1:

--> 622 labels = one_hot(labels,predictions)

623

/opt/miniconda3/envs/archiving/lib/python3.7/site-packages/tensorflow_model_analysis/metrics/metric_util.py in one_hot(tensor,target)

671 # indexing the -1 and then removing it after.

--> 672 tensor = np.delete(np.eye(target.shape[-1] + 1)[tensor],-1,axis=-1)

673 return tensor.reshape(target.shape)

IndexError: arrays used as indices must be of integer (or boolean) type

During handling of the above exception,another exception occurred:

[...]

IndexError: arrays used as indices must be of integer (or boolean) type [while running 'ExtractEvaluateAndWriteResults/ExtractAndEvaluate/EvaluateMetricsAndPlots/ComputeMetricsAndPlots()/ComputePerSlice/ComputeUnsampledMetrics/CombinePerSliceKey/WindowIntoDiscarding']

我以为这是我的配置,但是我不明白这是怎么回事。

我正在使用此数据集TF Github。 我已遵循以下笔记本:Kaggle - BBC News Classification以便通过Tensorflow Serving服务我的模型。

注意:我正在使用的模型如下:

def _build_keras_model(vectorize_layer: TextVectorization) -> tf.keras.Model:

input_layer = tf.keras.layers.Input(shape=(1,),dtype=tf.string)

deep = vectorize_layer(input_layer)

deep = layers.Embedding(_max_features + 1,_embedding_dim)(deep)

deep = layers.Dropout(0.5)(deep)

deep = layers.GlobalAveragePooling1D()(deep)

deep = layers.Dropout(0.5)(deep)

output = layers.Dense(5,activation=tf.nn.softmax)(deep)

model = tf.keras.Model(input_layer,output)

model.compile(

loss=losses.SparseCategoricalCrossentropy(from_logits=True),optimizer='adam',metrics=['accuracy'])

model.summary(print_fn=absl.logging.info)

return model

解决方法

我明白了。我的问题是在数据集中,标签(文档类别)的格式为字符串(例如:“体育”,“业务”等)。因此,为了将其编码为整数,我使用了Transform组件对其进行了预处理。

但是,在构建评估器组件时,我传递了ExampleGen组件,其中未对数据进行任何处理。因此,评估人员试图将ExampleGen中的字符串转换为与模型输出的整数相匹配。

因此,要解决此问题,我只是这样做了:

model_resolver = ResolverNode(

instance_name='latest_blessed_model_resolver',resolver_class=latest_blessed_model_resolver.LatestBlessedModelResolver,model=Channel(type=Model),model_blessing=Channel(type=ModelBlessing))

context.run(model_resolver)

evaluator = Evaluator(

examples=transform.outputs['transformed_examples'],model=trainer.outputs['model'],baseline_model=model_resolver.outputs['model'],eval_config=eval_config)

context.run(evaluator)

我使用了来自transform组件的示例。当然,我还更改了配置中的标签键以匹配转换组件的标签名称。

我不知道是否有一种“清洁”的方式来执行此操作(或者如果我做错了,请纠正我!)

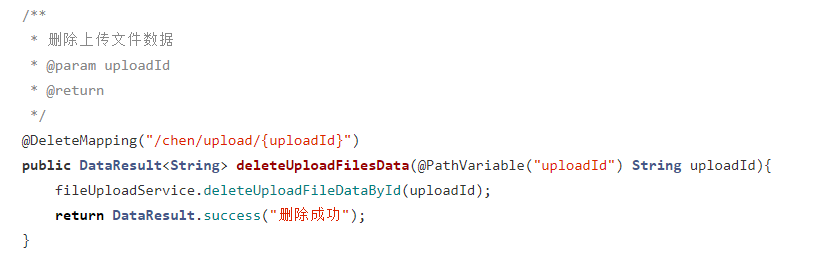

设置时间 控制面板

设置时间 控制面板 错误1:Request method ‘DELETE‘ not supported 错误还原:...

错误1:Request method ‘DELETE‘ not supported 错误还原:...