问题描述

我保存了一堆自tf.keras.layers.Layer继承的自定义模型实例。我想通过TFX Serving为他们提供服务,这需要我拥有model_config文件。

我想知道如何根据这本书来创建它。现在,我有以下代码,我认为这些代码更多的是关于我自己的Bricolage,而不是我应该做的...

model_server_config = model_server_config_pb2.ModelServerConfig()

#Create a config to add to the list of served models

config_list = model_server_config_pb2.ModelConfigList()

for i in range(0,len(trainable_unit_name)): # add models one by one to the model config.

model_name = name[i]

base_path = "/models/{}".format(name[i])

one_config = config_list.config.add()

one_config.name = model_name

one_config.base_path = base_path

one_config.model_platform ="tensorflow"

model_server_config.model_config_list.MergeFrom(config_list)

with open(C.CONF_FILEPATH,'w+') as f:

f.write("model_config_list {" + config_list.__str__() + "}") #manually wrap it around "model_config_list { .." because this is the required format by TFX Serving.

解决方法

https://www.tensorflow.org/tfx/serving/serving_config链接中包含有关Tensorflow Serving Configuration的完整信息。

在此TF Serving Github Repository中可以找到关于如何为model_config_file创建Serving的问题的答案。

有关PB文件的详细信息,请参阅此Stack Overflow Question。

在下面的Github Code中提供以下内容,以防上面的Github Link无效:

syntax = "proto3";

package tensorflow.serving;

option cc_enable_arenas = true;

import "google/protobuf/any.proto";

import "tensorflow_serving/config/logging_config.proto";

import "tensorflow_serving/sources/storage_path/file_system_storage_path_source.proto";

// The type of model.

enum ModelType {

MODEL_TYPE_UNSPECIFIED = 0 [deprecated = true];

TENSORFLOW = 1 [deprecated = true];

OTHER = 2 [deprecated = true];

};

// Common configuration for loading a model being served.

message ModelConfig {

// Name of the model.

string name = 1;

repeated string alias = 9;

string base_path = 2;

// Type of model.

// TODO(b/31336131): DEPRECATED. Please use 'model_platform' instead.

ModelType model_type = 3 [deprecated = true];

// Type of model (e.g. "tensorflow").

//

// (This cannot be changed once a model is in serving.)

string model_platform = 4;

reserved 5;

// Version policy for the model indicating which version(s) of the model to

// load and make available for serving simultaneously.

// The default option is to serve only the latest version of the model.

//

// (This can be changed once a model is in serving.)

FileSystemStoragePathSourceConfig.ServableVersionPolicy model_version_policy =

7;

// String labels to associate with versions of the model,allowing inference

// queries to refer to versions by label instead of number. Multiple labels

// can map to the same version,but not vice-versa.

map<string,int64> version_labels = 8;

// Configures logging requests and responses,to the model.

//

// (This can be changed once a model is in serving.)

LoggingConfig logging_config = 6;

}

// Static list of models to be loaded for serving.

message ModelConfigList {

repeated ModelConfig config = 1;

}

// ModelServer config.

message ModelServerConfig {

// ModelServer takes either a static file-based model config list or an Any

// proto representing custom model config that is fetched dynamically at

// runtime (through network RPC,custom service,etc.).

oneof config {

ModelConfigList model_config_list = 1;

google.protobuf.Any custom_model_config = 2;

}

}

设置时间 控制面板

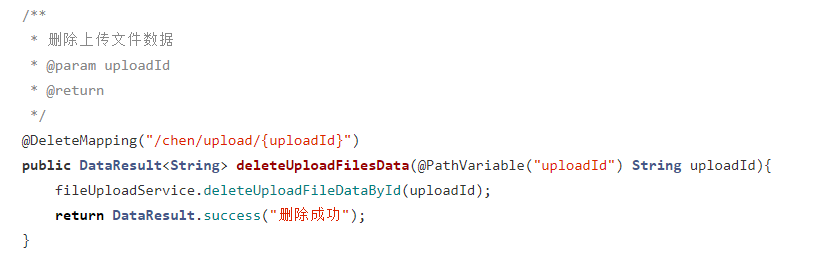

设置时间 控制面板 错误1:Request method ‘DELETE‘ not supported 错误还原:...

错误1:Request method ‘DELETE‘ not supported 错误还原:...