问题描述

当使用中间张量流概率DistributionLambda层为模型创建自定义损失时,无需执行Eager就很容易,因为该层可以存储在变量中,然后从损失内部使用。启用“急切”执行时,它会失败,因为它表示未对网络变量进行损失评估。如果我使用损失中前一层的输入参数而不是DistributionLambda层,而是在损失中创建了分布,则它失败了,因为该输入层是符号张量。有什么帮助吗?

这是complete example colab,下面是示例代码:

import numpy as np

from tensorflow.keras import layers

from tensorflow.keras import models

from tensorflow import math

from tensorflow import version

from tensorflow_probability import distributions

from tensorflow_probability import layers as tfp_layers

print(version.GIT_VERSION)

print(version.VERSION)

x = np.array([[1.0],[2.0],[3.0],[4.0],[5.0]])

y = np.array([[6.0,7.0],[7.0,8.0],[8.0,9.0],[9.0,10.0],[10.0,11.0]])

def normal_distribution(param):

"""Normal distribution parameterization from input."""

return distributions.Normal(param[:,0],math.sqrt(math.exp(param[:,1])))

class DistribNN(object):

"""Neural net with a distributional output."""

def __init__(self):

"""Constructor of DistribNN."""

self._distribution_layer = None

self._predist = None

self._the_model = None

def _loss(self):

"""Correct final loss for this model."""

def likelihood_loss(y_true,y_pred):

"""Adding negative log likelihood loss."""

# return -distributions.Normal(self._predist[:,# math.sqrt(math.exp(self._predist[:,1]))).log_prob(

# y_true + y_pred - y_pred + 0.001)

# return -normal_distribution(self._predist).log_prob(

# y_true + y_pred - y_pred + 0.001)

return -self._distribution_layer.log_prob(y_true + 0.001)

return likelihood_loss

def create_net(self):

"""Create neural net model.."""

input_layer = layers.Input((1,))

dense_layer = layers.Dense(10)(input_layer)

params_dense_layer = layers.Dense(4)(dense_layer)

self._predist = layers.Reshape((2,2))(params_dense_layer)

self._distribution_layer = tfp_layers.DistributionLambda(

make_distribution_fn=normal_distribution)(self._predist)

last_layer = layers.Lambda(lambda param: param - 0.001)(self._distribution_layer)

self._the_model = models.Model(inputs=input_layer,outputs=last_layer)

self._the_model.compile(loss=self._loss(),optimizer='Adam')

def fit(self,x,y):

self._the_model.fit(x=x,y=y,epochs=2)

one_model = DistribNN()

one_model.create_net()

one_model.fit(x,y)

给出错误:

v2.3.0-0-gb36436b087

2.3.0

Epoch 1/2

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-3-6b2d34187a56> in <module>()

40 one_model = DistribNN()

41 one_model.create_net()

---> 42 one_model.fit(x,y)

11 frames

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/func_graph.py in wrapper(*args,**kwargs)

971 except Exception as e: # pylint:disable=broad-except

972 if hasattr(e,"ag_error_metadata"):

--> 973 raise e.ag_error_metadata.to_exception(e)

974 else:

975 raise

ValueError: in user code:

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py:806 train_function *

return step_function(self,iterator)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py:796 step_function **

outputs = model.distribute_strategy.run(run_step,args=(data,))

/usr/local/lib/python3.6/dist-packages/tensorflow/python/distribute/distribute_lib.py:1211 run

return self._extended.call_for_each_replica(fn,args=args,kwargs=kwargs)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/distribute/distribute_lib.py:2585 call_for_each_replica

return self._call_for_each_replica(fn,args,kwargs)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/distribute/distribute_lib.py:2945 _call_for_each_replica

return fn(*args,**kwargs)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py:789 run_step **

outputs = model.train_step(data)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py:757 train_step

self.trainable_variables)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/engine/training.py:2737 _minimize

trainable_variables))

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/optimizer_v2/optimizer_v2.py:562 _aggregate_gradients

filtered_grads_and_vars = _filter_grads(grads_and_vars)

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/optimizer_v2/optimizer_v2.py:1271 _filter_grads

([v.name for _,v in grads_and_vars],))

ValueError: No gradients provided for any variable: ['dense/kernel:0','dense/bias:0','dense_1/kernel:0','dense_1/bias:0'].

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

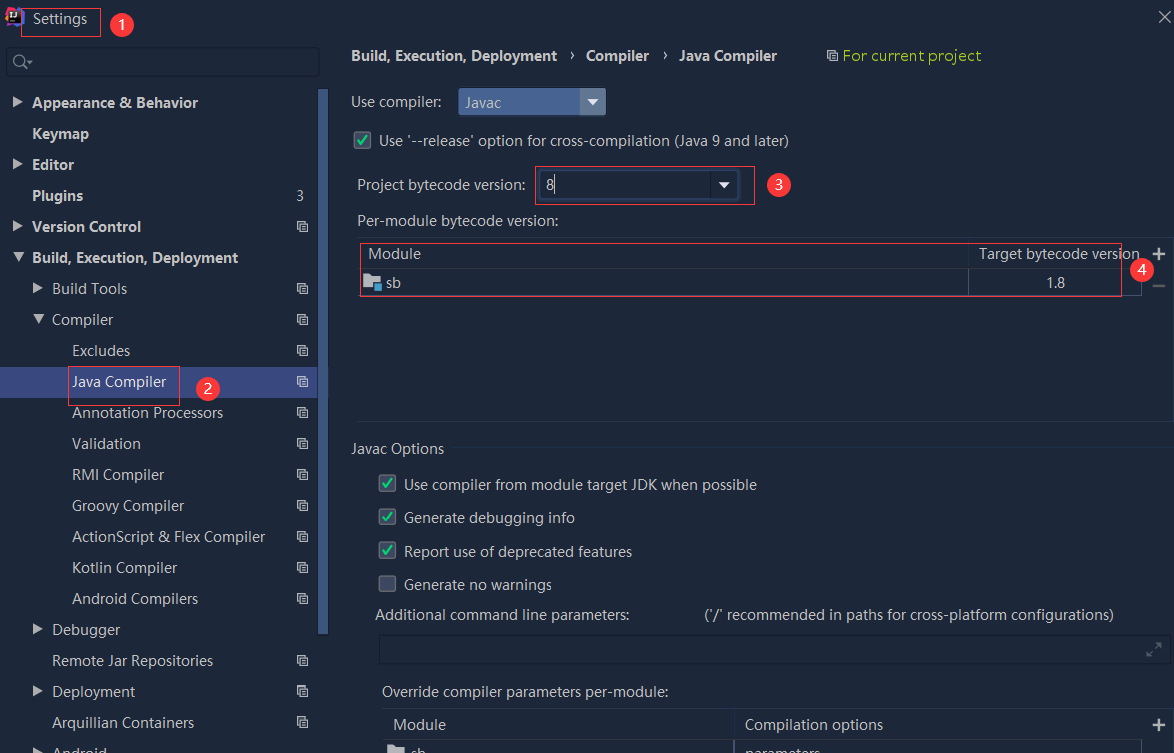

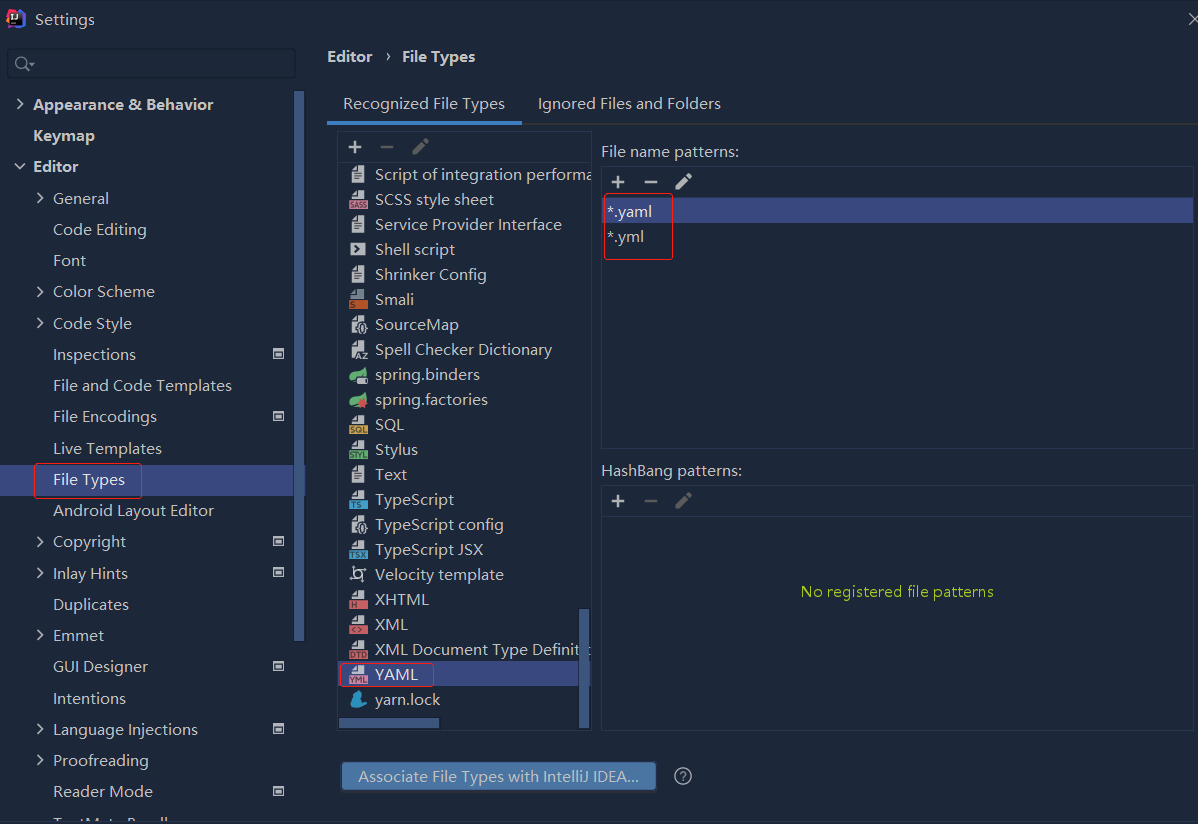

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

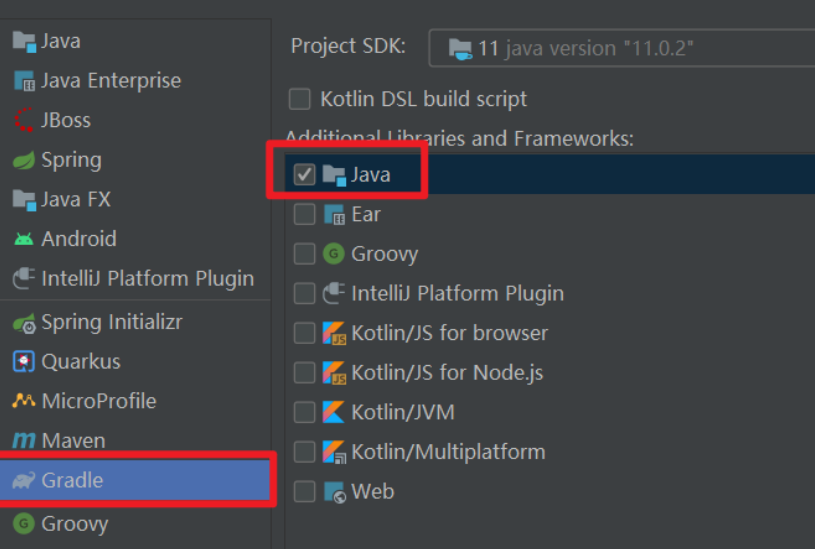

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...