问题描述

版本Apache Hudi 0.6.1,Spark 2.4.6

以下是Hudi deltastreamer的标准spark-submit命令,由于未定义主要参数,因此该命令正在抛出。我可以看到所有的属性参数都给出了。感谢对此错误的任何帮助。

[hadoop@ip-00-00-00-00 target]$ spark-submit --class org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer 'ls /mnt/hudi/packaging/hudi-utilities-bundle/target/hudi-utilities-bundle_2.11-0.6.1-SNAPSHOT.jar' --master yarn --deploy-mode client --storage-type COPY_ON_WRITE --source-class org.apache.hudi.utilities.sources.JsonKafkaSource --source-ordering-field ts --target-base-path /user/hive/warehouse/stock_ticks_cow --target-table stock_ticks_cow --props /var/demo/config/kafka-source.properties --schemaprovider-class org.apache.hudi.utilities.schema.FilebasedSchemaProvider

20/09/08 05:14:46 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Exception in thread "main" org.apache.hudi.com.beust.jcommander.ParameterException: Was passed main parameter '--master' but no main parameter was defined in your arg class

at org.apache.hudi.com.beust.jcommander.JCommander.initMainParameterValue(JCommander.java:936)

at org.apache.hudi.com.beust.jcommander.JCommander.parseValues(JCommander.java:752)

at org.apache.hudi.com.beust.jcommander.JCommander.parse(JCommander.java:340)

at org.apache.hudi.com.beust.jcommander.JCommander.parse(JCommander.java:319)

at org.apache.hudi.com.beust.jcommander.JCommander.<init>(JCommander.java:240)

at org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer.getConfig(HoodieDeltaStreamer.java:445)

at org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer.main(HoodieDeltaStreamer.java:454)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:845)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:161)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:184)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:920)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:929)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

20/09/08 05:14:46 INFO util.ShutdownHookManager: Shutdown hook called

20/09/08 05:14:46 INFO util.ShutdownHookManager: Deleting directory /mnt/tmp/spark-3ad6af85-94be-4117-a479-53423a91fd75

解决方法

我认为这是spark-submit和class的参数冲突的方式,所以我按照下面给出的顺序进行操作

spark-submit \

--jars "/mnt/hudi/packaging/hudi-utilities-bundle/target/hudi-utilities-bundle_2.11-0.6.1-SNAPSHOT.jar" \

--deploy-mode "client" \

--class "org.apache.hudi.utilities.deltastreamer.HoodieDeltaStreamer" \

/mnt/hudi/packaging/hudi-utilities-bundle/target/hudi-utilities-bundle_2.11-0.6.1-SNAPSHOT.jar \

--props /var/demo/config/kafka-source.properties \

--table-type COPY_ON_WRITE \

--source-class org.apache.hudi.utilities.sources.JsonKafkaSource \

--source-ordering-field ts \

--target-base-path /user/hive/warehouse/stock_ticks_cow \

--target-table stock_ticks_cow \

--schemaprovider-class org.apache.hudi.utilities.schema.FilebasedSchemaProvider

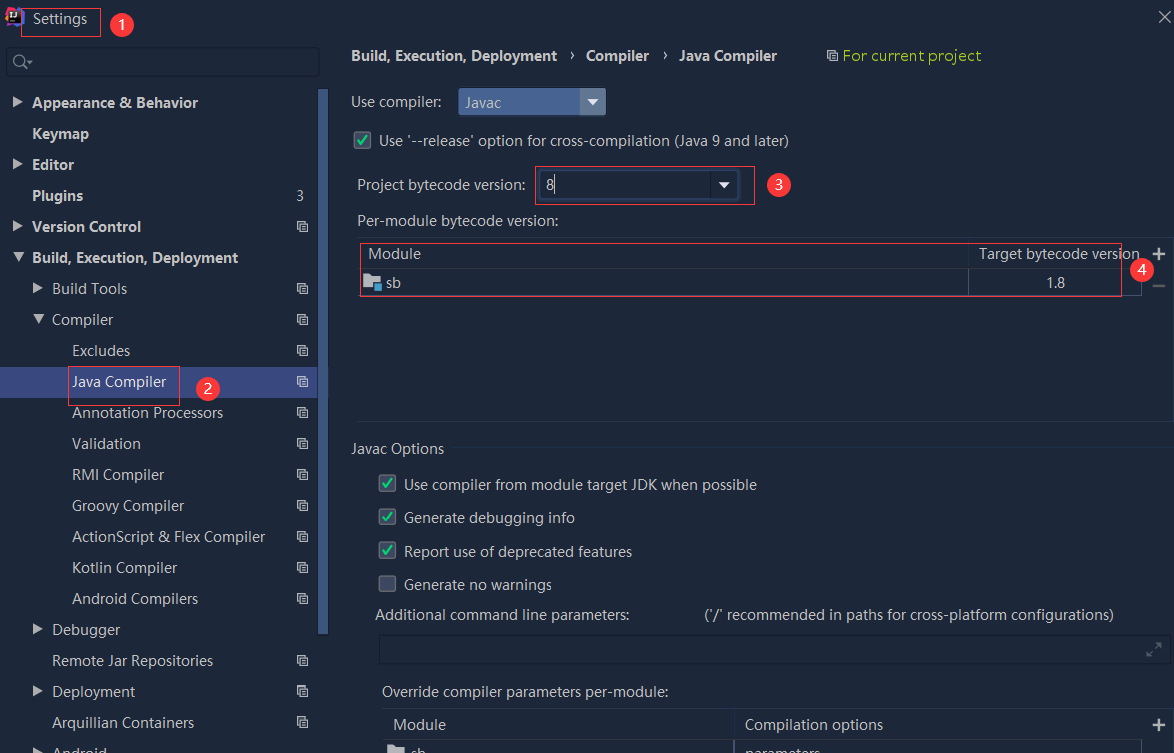

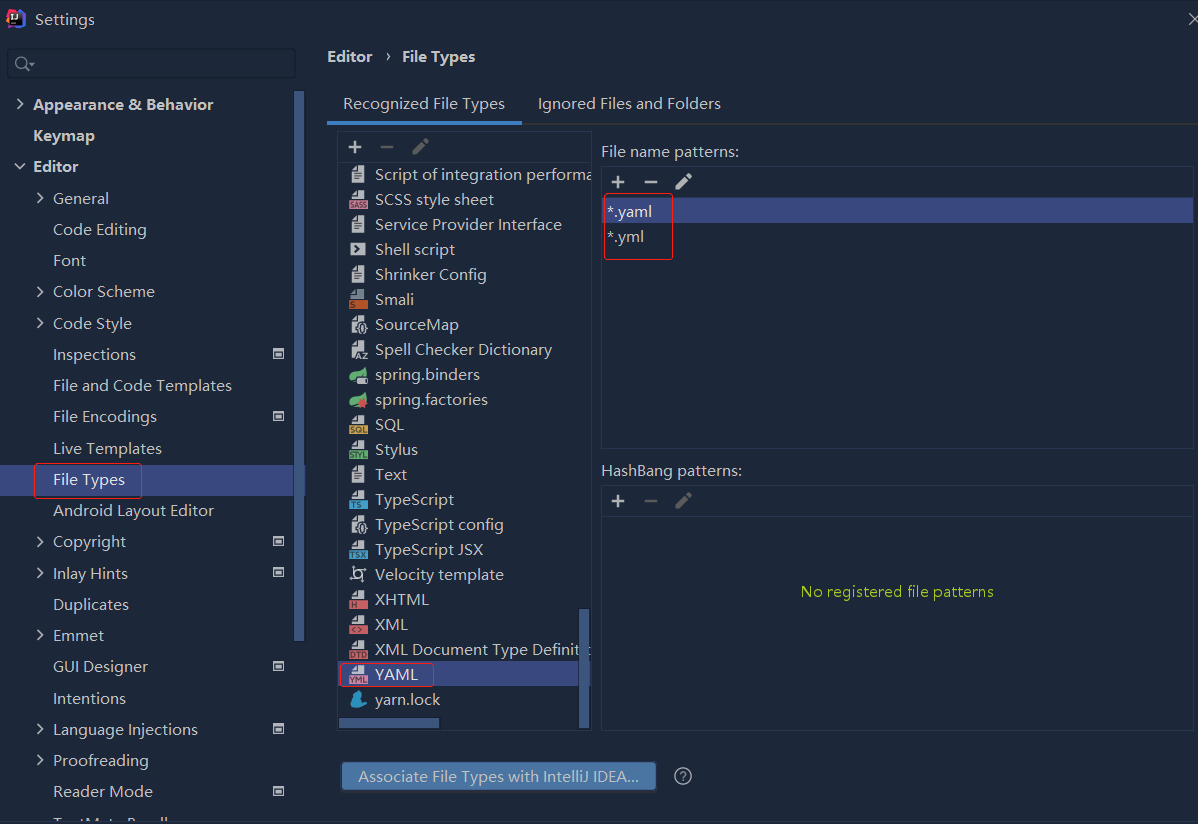

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

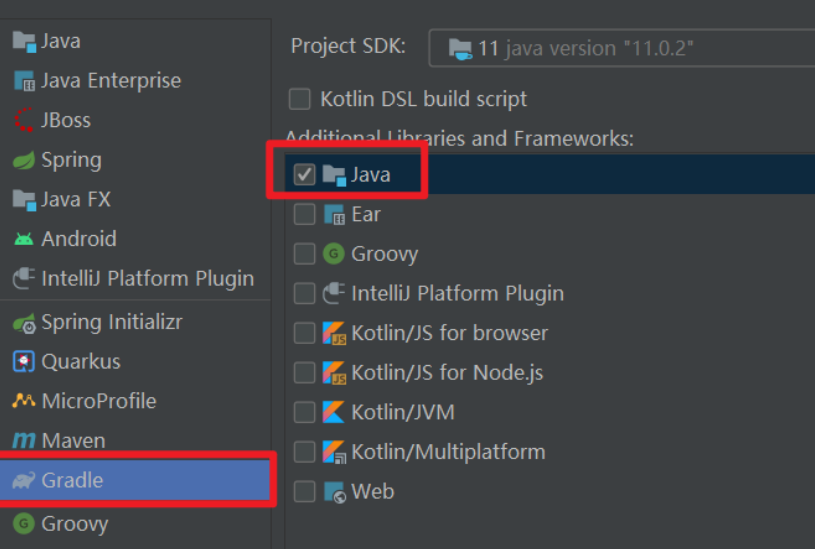

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...