问题描述

我正在跟踪此Apple Sample Code,并想知道如何清除输入缓冲区(在这种情况下为result),以便一旦说完一个单词就重新开始听写。

例如:

用户说出单词时,会将它们添加到result.bestTranscription.formattedString中,然后附加。因此,如果我说“白色”,“紫色”,“黄色”,result.bestTranscription.formattedString看起来像“白色紫色黄色”,并且单词会不断添加,直到缓冲区停止(显然〜1分钟)为止。我说出一个单词时就在做一个动作,因此,例如,如果您说“ blue”,我正在检查缓冲区是否包含“ blue”(或“ Blue”),既然如此,请继续执行下一个活动并重置缓冲区。

但是,当我这样做时,会出现此错误:

2020-09-09 18:25:44.247575-0400 Test App [28460:1337362] [实用程序] + [AFAggregator logDictationFailedWithError:]错误域= kAFAssistantErrorDomain代码= 209“(null)”

当听到“蓝色”时停止音频检测效果很好,但是一旦我尝试重新初始化语音识别代码,它就会窒息。下面是我的识别任务:

// Create a recognition task for the speech recognition session.

// Keep a reference to the task so that it can be canceled.

recognitionTask = speechRecognizer.recognitionTask(with: recognitionRequest) { result,error in

var isFinal = false

if let result = result {

// Update the text view with the results.

self.speechInput = result.bestTranscription.formattedString

isFinal = result.isFinal

print("Text \(result.bestTranscription.formattedString)")

}

if (result?.bestTranscription.formattedString.range(of:"blue") != nil) || (result?.bestTranscription.formattedString.range(of:"Blue") != nil) {

self.ColorView.backgroundColor = .random()

isFinal = true

}

if error != nil || isFinal {

// Stop recognizing speech if there is a problem.

self.audioEngine.stop()

self.recognitionRequest?.endAudio() // Necessary

inputNode.removeTap(onBus: 0)

self.recognitionRequest = nil

self.recognitionTask = nil

}

}

这是完整的代码:

import UIKit

import Speech

private let audioEngine = AVAudioEngine()

extension CGFloat {

static func random() -> CGFloat {

return CGFloat(arc4random()) / CGFloat(UInt32.max)

}

}

extension UIColor {

static func random() -> UIColor {

return UIColor(

red: .random(),green: .random(),blue: .random(),alpha: 1.0

)

}

}

class ViewController: UIViewController,SFSpeechRecognizerDelegate {

@IBOutlet var ColorView: UIView!

@IBOutlet weak var StartButton: UIButton!

private let speechRecognizer = SFSpeechRecognizer()!

private var recognitionRequest: SFSpeechAudioBufferRecognitionRequest?

private var recognitionTask: SFSpeechRecognitionTask?

private let audioEngine = AVAudioEngine()

private var speechInput: String = ""

override func viewDidLoad() {

super.viewDidLoad()

// Create and configure the speech recognition request.

recognitionRequest = SFSpeechAudioBufferRecognitionRequest()

guard let recognitionRequest = recognitionRequest else { fatalError("Unable to create a SFSpeechAudioBufferRecognitionRequest object") }

recognitionRequest.shouldReportPartialResults = true

// Do any additional setup after loading the view.

}

func start() {

// Cancel the previous task if it's running.

recognitionTask?.cancel()

self.recognitionTask = nil

// Configure the audio session for the app.

let audioSession = AVAudioSession.sharedInstance()

do {

try audioSession.setCategory(.record,mode: .measurement,options: .duckOthers)

} catch {}

do {

try audioSession.setActive(true,options: .notifyOthersOnDeactivation)

} catch {}

let inputNode = audioEngine.inputNode

// Create and configure the speech recognition request.

recognitionRequest = SFSpeechAudioBufferRecognitionRequest()

guard let recognitionRequest = recognitionRequest else { fatalError("Unable to create a SFSpeechAudioBufferRecognitionRequest object") }

recognitionRequest.shouldReportPartialResults = true

// Keep speech recognition data on device

if #available(iOS 13,*) {

recognitionRequest.requiresOnDeviceRecognition = false

}

// Create a recognition task for the speech recognition session.

// Keep a reference to the task so that it can be canceled.

recognitionTask = speechRecognizer.recognitionTask(with: recognitionRequest) { result,error in

var isFinal = false

if let result = result {

// Update the text view with the results.

self.speechInput = result.bestTranscription.formattedString

isFinal = result.isFinal

print("Text \(result.bestTranscription.formattedString)")

}

if (result?.bestTranscription.formattedString.range(of:"blue") != nil) || (result?.bestTranscription.formattedString.range(of:"Blue") != nil) {

self.ColorView.backgroundColor = .random()

isFinal = true

}

if error != nil || isFinal {

// Stop recognizing speech if there is a problem.

self.audioEngine.stop()

self.recognitionRequest?.endAudio() // Necessary

inputNode.removeTap(onBus: 0)

self.recognitionRequest = nil

self.recognitionTask = nil

self.start() //This chokes it

}

}

// Configure the microphone input.

let recordingFormat = inputNode.outputFormat(forBus: 0)

inputNode.installTap(onBus: 0,bufferSize: 1024,format: recordingFormat) { (buffer: AVAudioPCMBuffer,when: AVAudioTime) in

self.recognitionRequest?.append(buffer)

}

audioEngine.prepare()

do {

try audioEngine.start()

} catch {}

// Let the user know to start talking.

//textView.text = "(Go ahead,I'm listening)"

}

override func viewDidAppear(_ animated: Bool) {

super.viewDidAppear(animated)

// Configure the SFSpeechRecognizer object already

// stored in a local member variable.

speechRecognizer.delegate = self

// Asynchronously make the authorization request.

SFSpeechRecognizer.requestAuthorization { authStatus in

// Divert to the app's main thread so that the UI

// can be updated.

OperationQueue.main.addOperation {

switch authStatus {

case .authorized:

self.ColorView.backgroundColor = .green

case .denied:

self.ColorView.backgroundColor = .red

case .restricted:

self.ColorView.backgroundColor = .orange

case .notDetermined:

self.ColorView.backgroundColor = .gray

default:

self.ColorView.backgroundColor = .random()

}

}

}

}

@IBAction func start(_ sender: Any) {

start()

}

public func speechRecognizer(_ speechRecognizer: SFSpeechRecognizer,availabilityDidChange available: Bool) {

if available {

//recordButton.isEnabled = true

//recordButton.setTitle("Start Recording",for: [])

} else {

//recordButton.isEnabled = false

//recordButton.setTitle("Recognition Not Available",for: .disabled)

}

}

}

我确定我缺少一些简单的东西,有什么建议吗?

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

设置时间 控制面板

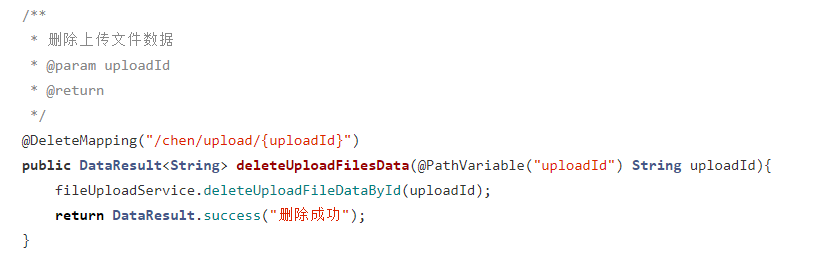

设置时间 控制面板 错误1:Request method ‘DELETE‘ not supported 错误还原:...

错误1:Request method ‘DELETE‘ not supported 错误还原:...