问题描述

我正在尝试将 Spark 作业提交到在 AWS EKS 上设置的 Spark 集群

NAME READY STATUS RESTARTS AGE

spark-master-5f98d5-5kdfd 1/1 Running 0 22h

spark-worker-878598b54-jmdcv 1/1 Running 2 3d11h

spark-worker-878598b54-sz6z6 1/1 Running 2 3d11h

我正在使用以下清单

apiVersion: batch/v1

kind: Job

metadata:

name: spark-on-eks

spec:

template:

spec:

containers:

- name: spark

image: repo:spark-appv6

command: [

"/bin/sh","-c","/opt/spark/bin/spark-submit \

--master spark://192.XXX.XXX.XXX:7077 \

--deploy-mode cluster \

--name spark-app \

--class com.xx.migration.convert.TestCase \

--conf spark.kubernetes.container.image=repo:spark-appv6

--conf spark.kubernetes.namespace=spark-pi \

--conf spark.kubernetes.authenticate.driver.serviceAccountName=spark-pi \

--conf spark.executor.instances=2 \

local:///opt/spark/examples/jars/testing-jar-with-dependencies.jar"

]

serviceAccountName: spark-pi

restartPolicy: Never

backoffLimit: 4

并低于错误日志

20/12/25 10:06:41 INFO Utils: Successfully started service 'driverClient' on port 34511.

20/12/25 10:06:41 INFO TransportClientFactory: Successfully created connection to /192.XXX.XXX.XXX:7077 after 37 ms (0 ms spent in bootstraps)

20/12/25 10:06:41 INFO ClientEndpoint: Driver successfully submitted as driver-20201225100641-0011

20/12/25 10:06:41 INFO ClientEndpoint: ... waiting before polling master for driver state

20/12/25 10:06:46 INFO ClientEndpoint: ... polling master for driver state

20/12/25 10:06:46 INFO ClientEndpoint: State of driver-2020134340641-0011 is ERROR

20/12/25 10:06:46 ERROR ClientEndpoint: Exception from cluster was: java.io.IOException: No FileSystem for scheme: local

java.io.IOException: No FileSystem for scheme: local

at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2660)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2667)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:94)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2703)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2685)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:373)

at org.apache.spark.util.Utils$.getHadoopFileSystem(Utils.scala:1853)

at org.apache.spark.util.Utils$.doFetchFile(Utils.scala:737)

at org.apache.spark.util.Utils$.fetchFile(Utils.scala:535)

at org.apache.spark.deploy.worker.DriverRunner.downloadUserJar(DriverRunner.scala:166)

at org.apache.spark.deploy.worker.DriverRunner.prepareAndRunDriver(DriverRunner.scala:177)

at org.apache.spark.deploy.worker.DriverRunner$$anon$2.run(DriverRunner.scala:96)

20/12/25 10:06:46 INFO ShutdownHookManager: Shutdown hook called

20/12/25 10:06:46 INFO ShutdownHookManager: Deleting directory /tmp/spark-d568b819-fe8e-486f-9b6f-741rerf87cf1

此外,当我尝试在没有容器参数的客户端模式下提交作业时,它已成功提交,但作业继续运行并在工作节点上旋转多个执行程序。

Spark 版本 - 3.0.0

当使用 k8s://http://Spark-Master-ip:7077 \ 我得到以下错误

20/12/28 06:59:12 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

20/12/28 06:59:12 INFO SparkKubernetesClientFactory: Auto-configuring K8S client using current context from users K8S config file

20/12/28 06:59:12 INFO KerberosConfDriverFeatureStep: You have not specified a krb5.conf file locally or via a ConfigMap. Make sure that you have the krb5.conf locally on the driver image.

20/12/28 06:59:13 WARN WatchConnectionManager: Exec Failure

java.net.SocketException: Connection reset

at java.net.SocketInputStream.read(SocketInputStream.java:209)

at java.net.SocketInputStream.read(SocketInputStream.java:141)

at okio.Okio$2.read(Okio.java:140)

at okio.AsyncTimeout$2.read(AsyncTimeout.java:237)

at okio.RealBufferedSource.indexOf(RealBufferedSource.java:354)

at okio.RealBufferedSource.readUtf8LineStrict(RealBufferedSource.java:226)

at okhttp3.internal.http1.Http1Codec.readHeaderLine(Http1Codec.java:215)

at okhttp3.internal.http1.Http1Codec.readResponseHeaders(Http1Codec.java:189)

at okhttp3.internal.http.CallServerInterceptor.intercept(CallServerInterceptor.java:88)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.connection.ConnectInterceptor.intercept(ConnectInterceptor.java:45)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at okhttp3.internal.cache.CacheInterceptor.intercept(CacheInterceptor.java:93)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at okhttp3.internal.http.BridgeInterceptor.intercept(BridgeInterceptor.java:93)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RetryAndFollowUpInterceptor.intercept(RetryAndFollowUpInterceptor.java:127)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at io.fabric8.kubernetes.client.utils.BackwardsCompatibilityInterceptor.intercept(BackwardsCompatibilityInterceptor.java:134)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at io.fabric8.kubernetes.client.utils.ImpersonatorInterceptor.intercept(ImpersonatorInterceptor.java:68)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at io.fabric8.kubernetes.client.utils.HttpClientUtils.lambda$createHttpClient$3(HttpClientUtils.java:109)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:147)

at okhttp3.internal.http.RealInterceptorChain.proceed(RealInterceptorChain.java:121)

at okhttp3.RealCall.getResponseWithInterceptorChain(RealCall.java:257)

at okhttp3.RealCall$AsyncCall.execute(RealCall.java:201)

at okhttp3.internal.NamedRunnable.run(NamedRunnable.java:32)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

请帮忙解决以上要求,谢谢

解决方法

假设您不使用spark on k8s operator,那么主程序应该是:

k8s://https://kubernetes.default.svc.cluster.local

如果没有,您可以通过运行获取您的主地址:

$ kubectl cluster-info

Kubernetes master is running at https://kubernetes.docker.internal:6443

编辑:

在 spark-on-k8s cluster-mode 中应该提供 k8s://<api_server_host>:<k8s-apiserver-port>(注意添加端口是必须的!)

在 spark-on-k8s 中,“master”(在 Spark 中)的角色由 kubernetes 本身扮演 - 它负责为运行驱动程序和工作线程分配资源。

,异常的真正原因:

java.io.IOException: 方案没有文件系统:本地

是不是 Spark Standalone 集群的一个 Worker 想要 downloadUserJar,但就是无法识别 local URI 方案。

这是因为 Spark Standalone 不理解它,除非我弄错了,唯一支持这个 local URI 方案的集群环境是 YARN 上的 Spark 和 Kubernetes 上的 Spark。

这就是您可以通过更改主 URL 来解决此异常的原因。好吧,OP 希望将 Spark 应用程序部署到 Kubernetes(并遵循 Spark on Kubernetes 的规则),而主 URL 是 spark://192.XXX.XXX.XXX:7077,用于 Spark Standalone。

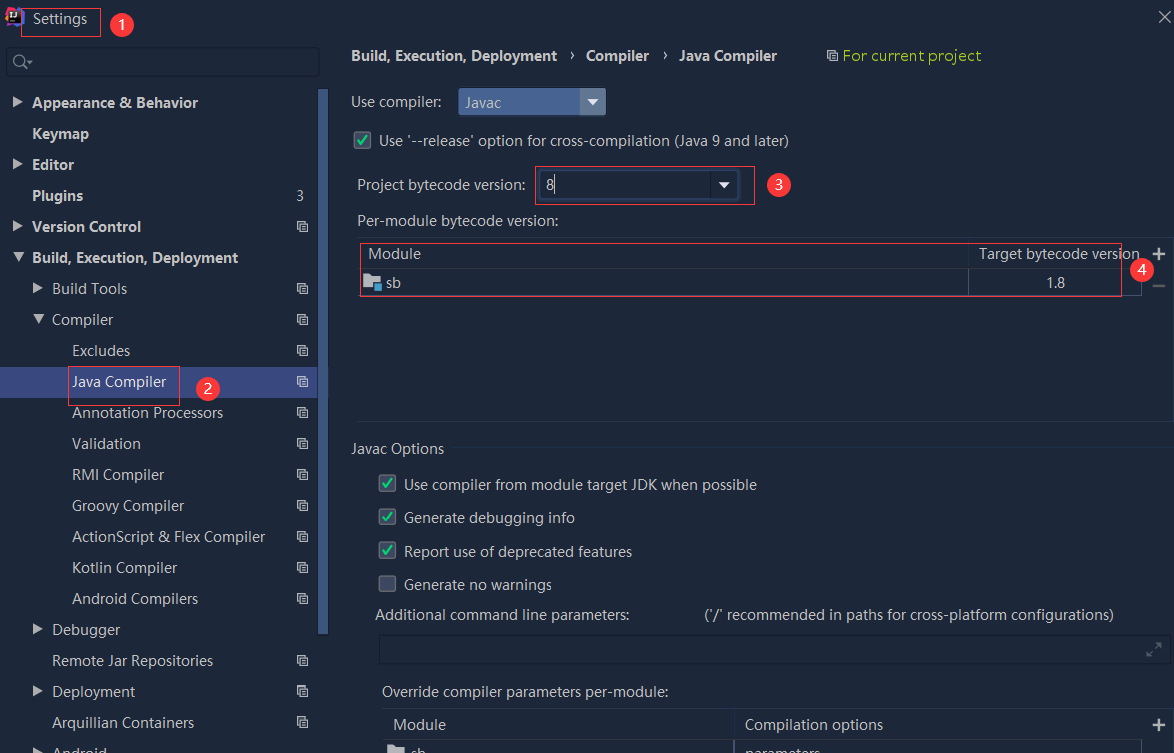

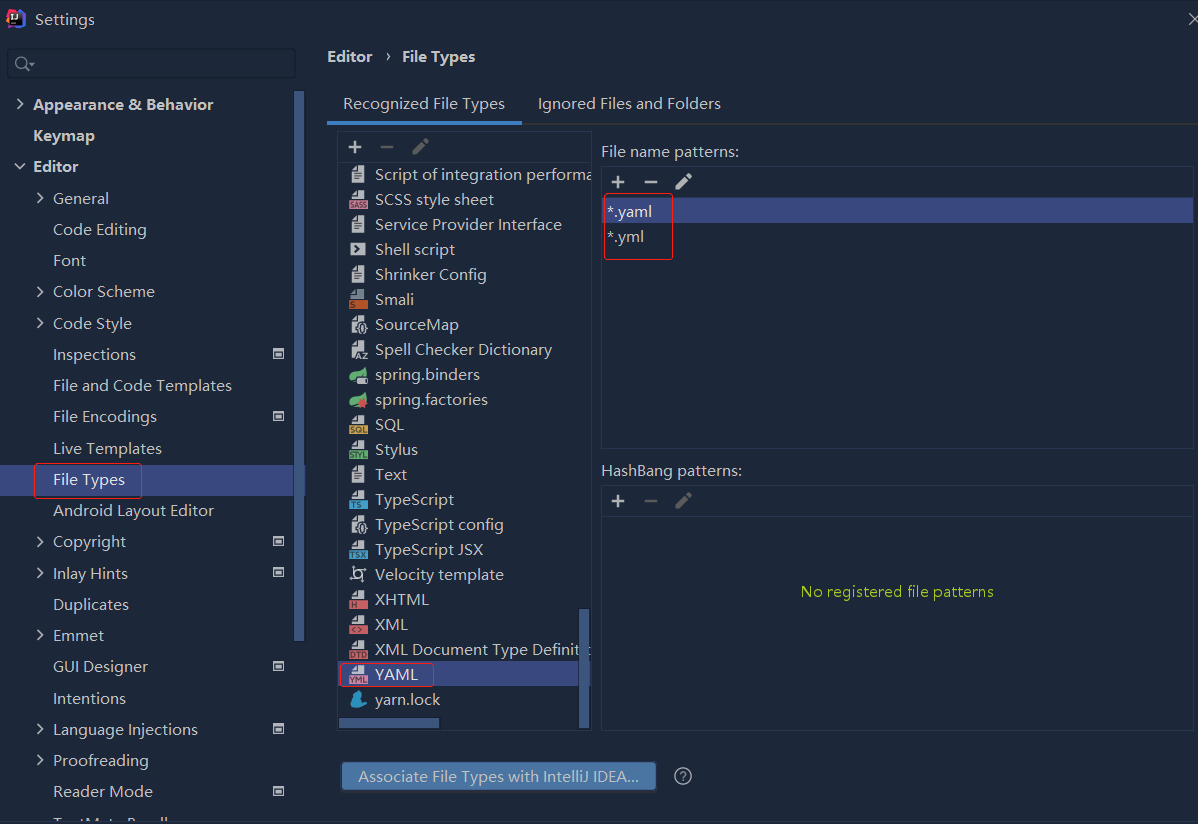

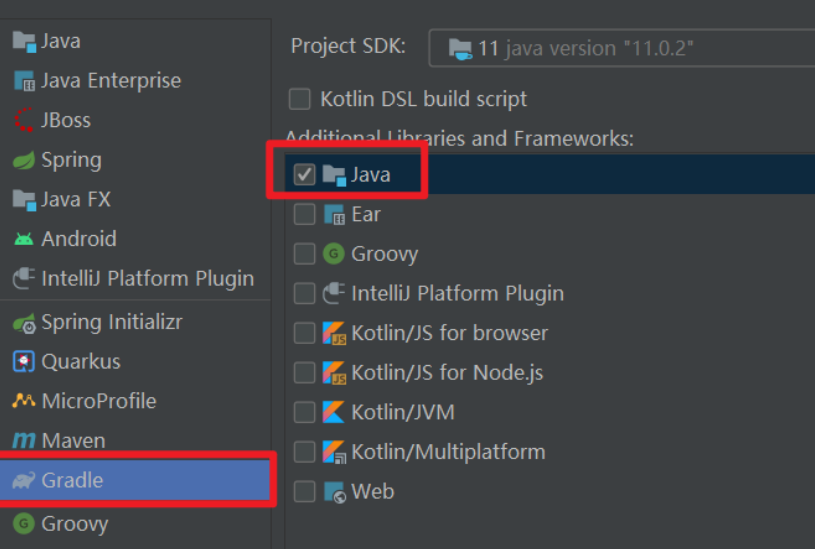

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...