问题描述

我正在尝试使用 spark-submit 运行 spark 应用程序。 我创建了以下 udf:

mainPage然后我是这样使用的:

from pyspark.sql.functions import udf

from pyspark.sql.types import StringType

from tldextract import tldextract

@udf(StringType())

def get_domain(url):

ext = tldextract.extract(url)

return ext.domain

并得到以下错误:

df = df.withColumn('domain',col=get_domain(df['url']))

我压缩了依赖项并使用了一个egg文件,但它仍然不起作用。

我的 spark cluser 由同一台服务器上的一个 master 和一个 worker 组成。

我怀疑它是 udf,因为当我在常规函数中而不是在 udf 中使用 Driver stacktrace:

21/01/03 16:53:41 INFO DAGScheduler: Job 1 failed: showString at NativeMethodAccessorImpl.java:0,took 2.842401 s

Traceback (most recent call last):

File "/home/michal/dv-etl/main.py",line 54,in <module>

main()

File "/home/michal/dv-etl/main.py",line 48,in main

df.show(truncate=False)

File "/opt/spark/python/lib/pyspark.zip/pyspark/sql/dataframe.py",line 442,in show

File "/opt/spark/python/lib/py4j-0.10.9-src.zip/py4j/java_gateway.py",line 1304,in __call__

File "/opt/spark/python/lib/pyspark.zip/pyspark/sql/utils.py",line 134,in deco

File "<string>",line 3,in raise_from

pyspark.sql.utils.PythonException:

An exception was thrown from the Python worker. Please see the stack trace below.

Traceback (most recent call last):

File "/opt/spark/python/lib/pyspark.zip/pyspark/worker.py",line 589,in main

func,profiler,deserializer,serializer = read_udfs(pickleSer,infile,eval_type)

File "/opt/spark/python/lib/pyspark.zip/pyspark/worker.py",line 447,in read_udfs

udfs.append(read_single_udf(pickleSer,eval_type,runner_conf,udf_index=i))

File "/opt/spark/python/lib/pyspark.zip/pyspark/worker.py",line 254,in read_single_udf

f,return_type = read_command(pickleSer,infile)

File "/opt/spark/python/lib/pyspark.zip/pyspark/worker.py",line 74,in read_command

command = serializer._read_with_length(file)

File "/opt/spark/python/lib/pyspark.zip/pyspark/serializers.py",line 172,in _read_with_length

return self.loads(obj)

File "/opt/spark/python/lib/pyspark.zip/pyspark/serializers.py",line 458,in loads

return pickle.loads(obj,encoding=encoding)

File "/opt/spark/python/lib/pyspark.zip/pyspark/cloudpickle.py",line 1110,in subimport

__import__(name)

ModuleNotFoundError: No module named 'tldextract'

时,它会起作用。

这是我的 tldextract 命令:

spark-submit谢谢!

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

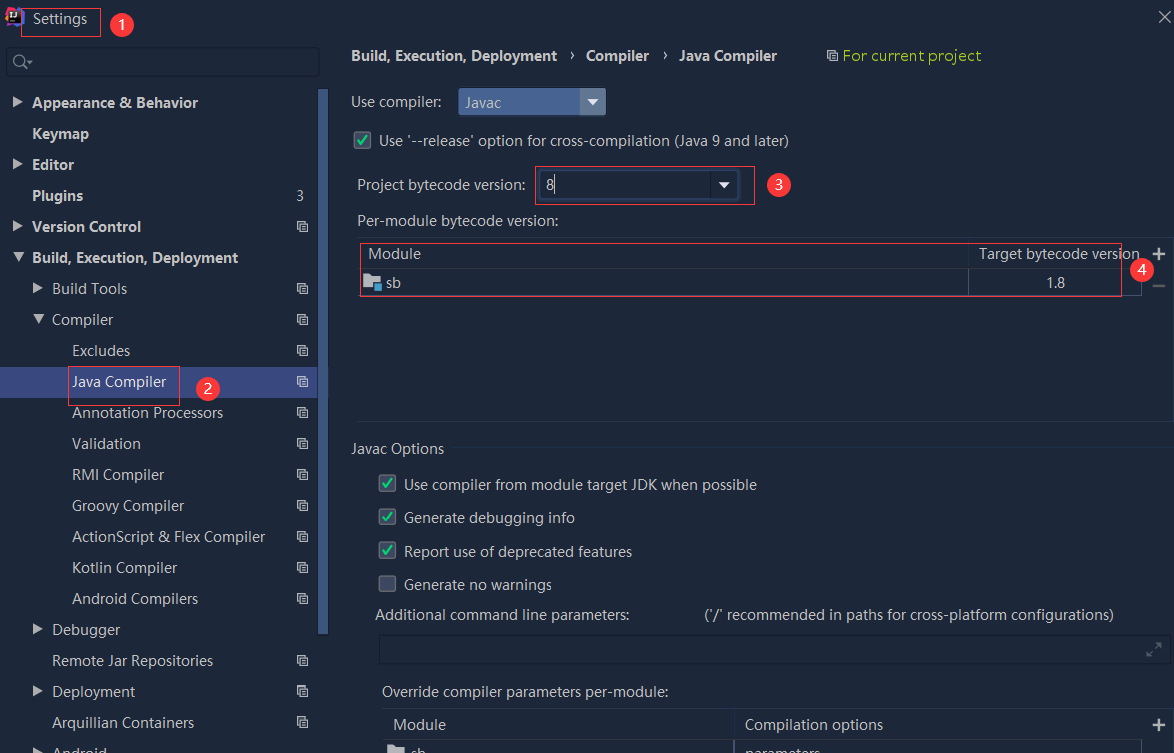

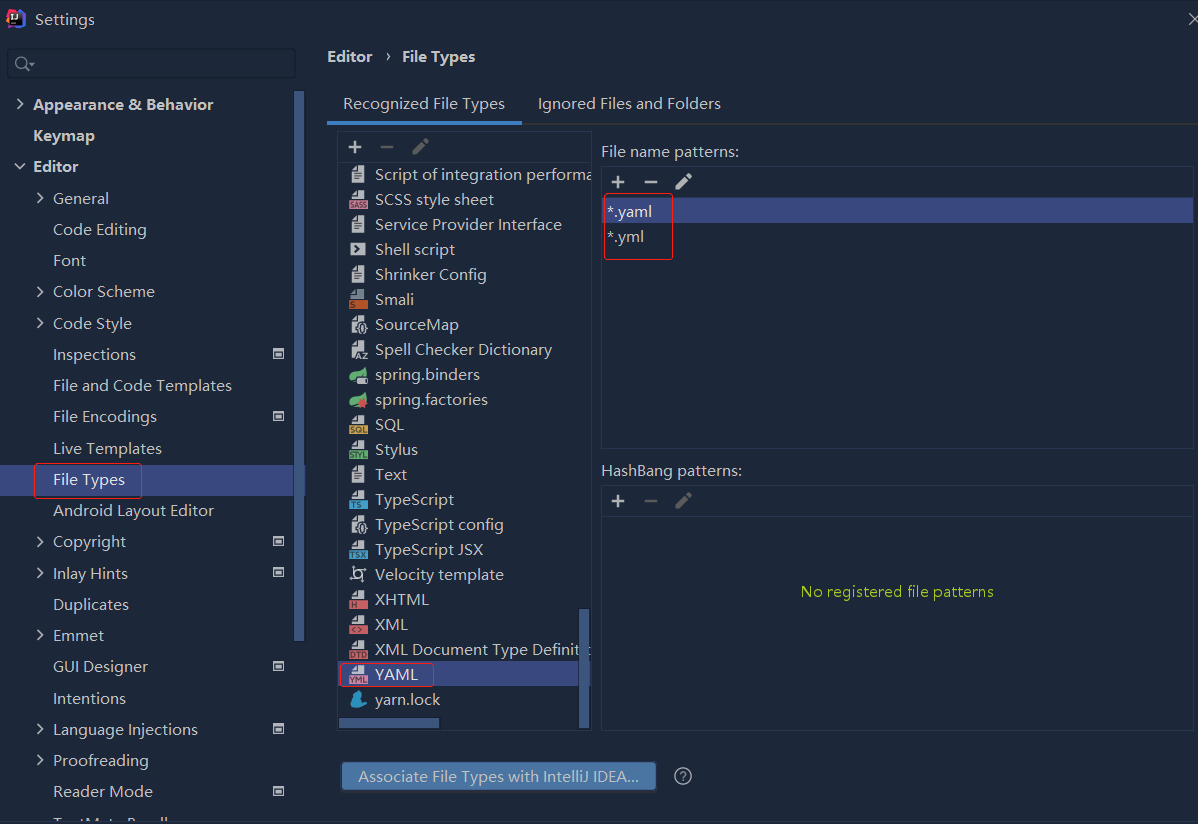

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

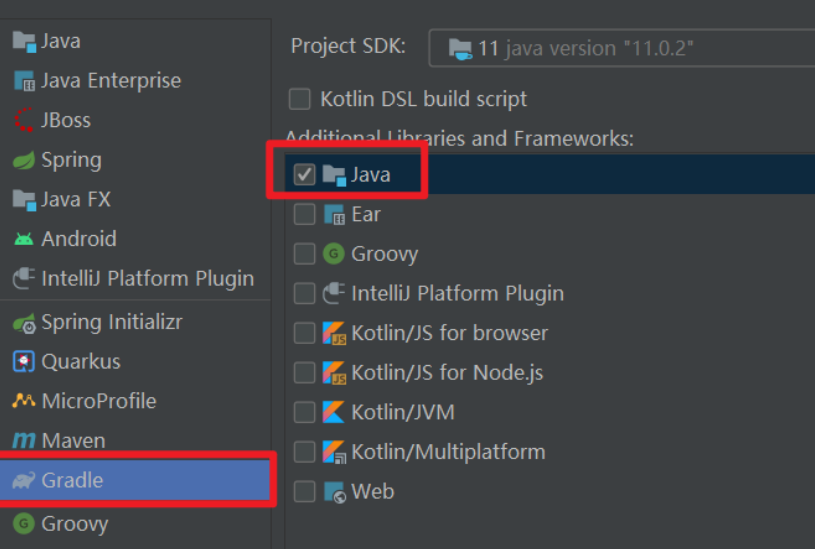

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...