问题描述

目前 condor 集群的用户必须:

chmod a+x /home/user/automl-meta-learning/results_plots/main.py

能够使用 condor_submit 运行他们的脚本。如何设置 condor 以便用户不再需要这样做?

这里有一个用户示例提交脚本:

####################

#

# Experiments script

# Simple HTCondor submit description file

#

# chmod a+x test_condor.py

# chmod a+x experiments_meta_model_optimization.py

# chmod a+x meta_learning_experiments_submission.py

# chmod a+x download_miniImagenet.py

# chmod a+x ~/meta-learning-lstm-pytorch/main.py

# chmod a+x /home/user/automl-meta-learning/automl-proj/meta_learning/datasets/rand_fc_nn_vec_mu_ls_gen.py

# chmod a+x /home/user/automl-meta-learning/automl-proj/experiments/meta_learning/supervised_experiments_submission.py

# chmod a+x /home/user/automl-meta-learning/results_plots/main.py

# condor_submit -i

# condor_submit job.sub

#

####################

# Executable = /home/user/automl-meta-learning/automl-proj/experiments/meta_learning/supervised_experiments_submission.py

Executable = /home/user/automl-meta-learning/automl-proj/experiments/meta_learning/meta_learning_experiments_submission.py

# Executable = /home/user/meta-learning-lstm-pytorch/main.py

# Executable = /home/user/automl-meta-learning/automl-proj/meta_learning/datasets/rand_fc_nn_vec_mu_ls_gen.py

## Output Files

Log = experiment_output_job.$(CLUSTER).log.out

Output = experiment_output_job.$(CLUSTER).out.out

Error = experiment_output_job.$(CLUSTER).err.out

# Use this to make sure 1 gpu is available. The key words are case insensitive.

REquest_gpus = 1

requirements = (CUDADeviceName != "Tesla K40m")

# requirements = (CUDADeviceName == "Quadro RTX 6000")

# requirements = ((CUDADeviceName = "Tesla K40m")) && (TARGET.Arch == "X86_64") && (TARGET.OpSys == "LINUX") && (TARGET.Disk >= RequestDisk) && (TARGET.Memory >= RequestMemory) && (TARGET.Cpus >= RequestCpus) && (TARGET.gpus >= Requestgpus) && ((TARGET.FileSystemDomain == MY.FileSystemDomain) || (TARGET.HasFileTransfer))

# requirements = (CUDADeviceName == "Tesla K40m")

# requirements = (CUDADeviceName == "GeForce GTX TITAN X")

# Note: to use multiple CPUs instead of the default (one CPU),use request_cpus as well

Request_cpus = 4

# Request_cpus = 16

# E-mail option

Notify_user = me@gmail.com

Notification = always

Environment = MY_CONDOR_JOB_ID= $(CLUSTER)

# "Queue" means add the setup until this line to the queue (needs to be at the end of script).

Queue

解决方法

.py 文件如果要直接执行,则需要设置执行位,这是 Linux/Unix 习惯用法,与 HTCondor 关系不大。也就是在命令行上,如果要运行

$ ./foo.py

foo.py 需要设置可执行位。如果你想解决这个问题,你可以将 foo.py 作为参数传递给 python,然后运行

$ python foo.py

然后不需要设置可执行位。要在 HTCondor 中模仿这一点,您可以将 /usr/bin/python 或 /usr/bin/python3 设置为您的可执行文件,并将 foo.py 设置为您的参数,例如

executable = /usr/bin/python

arguments = foo.py

这一切都假设您有一个共享的文件系统。如果您使用 HTCondor 的文件传输向工作节点发送数据,则还有几行。

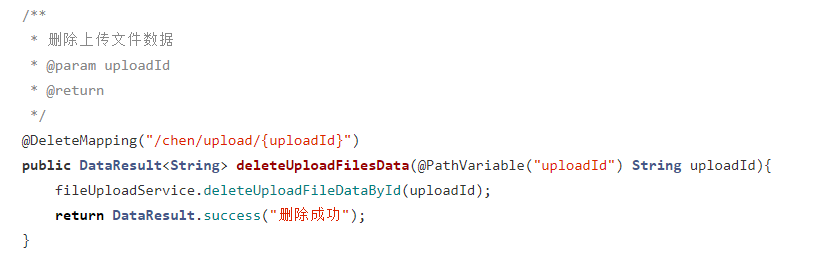

设置时间 控制面板

设置时间 控制面板 错误1:Request method ‘DELETE‘ not supported 错误还原:...

错误1:Request method ‘DELETE‘ not supported 错误还原:...