问题描述

我正在尝试使用numpy从头开始实现两层MLP,目标是将点分类为1或0。网络体系结构是2个输入(x1,x2),4个隐藏节点和2个输出节点。我还在两层都使用了S型激活功能。在执行代码时,无论输入如何,我都会不断使两个输出节点收敛于输出?我正在使用批处理学习,我的数据是[8,2]-即具有相应y标签的8(x1,x2)坐标。 这是数据:

data = np.array([[1,1],[0,1,[-1,-1,[0.5,0.5,0],[-0.5,-0.5,0]])

其中每一行的格式为[x1,x2,y]。由此,我得到4个边界区域来定义加号区域

这是我的代码:

def backpropagation(self,X,expected,output):

self.output_error = expected - output

self.output_delta = self.output_error * self.sigmoid_derivative(output)

self.g1_error = self.output_delta.dot(self.weights_2.T)

self.g1_delta = self.g1_error * self.sigmoid_derivative(self.g1)

self.weights_1 += X.T.dot(self.g1_delta) * self.learning_rate

self.weights_2 = self.g1.T.dot(self.output_delta) * self.learning_rate

X_data = data[:,0:2] # note: ":" slices up to but not including 2

y_data = data[:,2][np.newaxis].T # converting 1D array into 2D array and transposing to have y values in a column

network = MLP()

network_output = np.zeros((8,2),dtype = float)

epochs = 10000

for i in range(epochs):

#print("Input: \n" + str(X_data))

#print("Expected output: \n" + str(y_data))

network_output = network.forward_propagation(X_data)

print("Network output: \n" + str(network.forward_propagation(X_data)))

print("Loss: \n" + str(np.mean(np.square(y_data - network.forward_propagation(X_data)))))

network.train_MLP(X_data,y_data)

print("---------------------------------------")

我在10000个历元之后以0.1的学习率获得了此输出:

Network output:

[[0.27755633 0.27755633]

[0.27682435 0.27682435]

[0.27696575 0.27696575]

[0.27768152 0.27768152]

[0.27721829 0.27721829]

[0.2769205 0.2769205 ]

[0.27764048 0.27764048]

[0.2773513 0.2773513 ]]

Loss:

0.29962166309127225

我也尝试使用交叉熵损失,但由于尺寸不匹配,因此出现矩阵点积误差。这是该代码:

def backpropagation(self,output):

"""

# output error calculation

self.output_error = expected - output

#derivative of activation at output value times error at output

self.output_delta = self.output_error * self.sigmoid_derivative(output)

# output error contribution by hidden layer weights

self.g1_error = self.output_delta.dot(self.weights_2.T)

#derivative of activation at hidden layer output times error contribution by hidden layer

self.g1_delta = self.g1_error * self.sigmoid_derivative(self.g1)

#updating weights

self.weights_1 += X.T.dot(self.g1_delta) * self.learning_rate

#self.weights_2 += self.weights_2.T.dot(self.output_delta) * self.learning_rate

self.weights_2 = self.g1.T.dot(self.output_delta) * self.learning_rate

## weight_2 update looks correct

## weight_1

"""

self.delta_loss_output = - (np.divide(expected,output) - np.divide(1 - expected,1 - output))

self.delta_loss_g2 = self.delta_loss_output * self.sigmoid_derivative(output)

self.delta_loss_a_g2 = np.dot(self.weights_2.T,self.delta_loss_g2)

self.delta_loss_W2 = 1./self.g1.shape[1] * np.dot(self.delta_loss_g2,self.g1.T)

self.delta_loss_g1 = self.delta_loss_a_g2 * self.sigmoid_derivative(self.g1)

self.delta_loss_W1 = 1./X.shape[1] * np.dot(self.delta_loss_g1,X.T)

self.weights_1 = self.weights_1 - (self.learning_rate * self.delta_loss_W1)

self.weights_2 = self.weights_2 - (self.learning_rate * self.delta_loss_W2)

X_data = data[:,y_data)

print("---------------------------------------")

这是错误:

ValueError Traceback (most recent call last)

<ipython-input-56-cf9471ff57e1> in <module>

12 print("Network output: \n" + str(network.forward_propagation(X_data)))

13 print("Loss: \n" + str(np.mean(np.square(y_data - network.forward_propagation(X_data)))))

---> 14 network.train_MLP(X_data,y_data)

15

16 print("---------------------------------------")

<ipython-input-55-4f31d8641246> in train_MLP(self,y)

75 def train_MLP(self,y):

76 output = self.forward_propagation(X)

---> 77 self.backpropagation(X,y,output)

78

79

<ipython-input-55-4f31d8641246> in backpropagation(self,output)

62 self.delta_loss_output = - (np.divide(expected,1 - output))

63 self.delta_loss_g2 = self.delta_loss_output * self.sigmoid_derivative(output)

---> 64 self.delta_loss_a_g2 = np.dot(self.weights_2.T,self.delta_loss_g2)

65 self.delta_loss_W2 = 1./self.g1.shape[1] * np.dot(self.delta_loss_g2,self.g1.T)

66

ValueError: shapes (2,4) and (8,2) not aligned: 4 (dim 1) != 8 (dim 0)

我什至不确定这些实现中的任何一个是否正确。如果有人能发现这些实现的任何问题,并指出正确的方向,我将不胜感激。我在这里迷失了方向。谢谢。

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

设置时间 控制面板

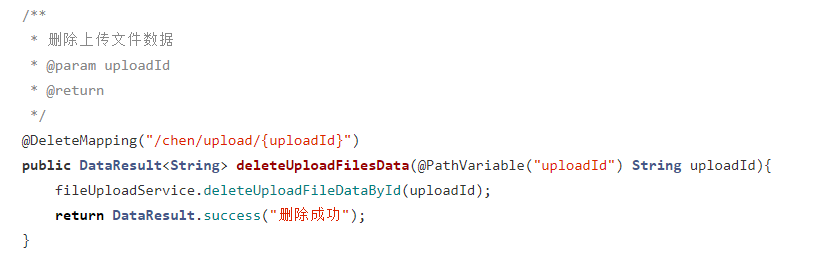

设置时间 控制面板 错误1:Request method ‘DELETE‘ not supported 错误还原:...

错误1:Request method ‘DELETE‘ not supported 错误还原:...