问题描述

我有一个1GB的二进制示例文件,该文件已加载到内存中。在python 3.7和Windows上运行基准测试时,相对于mmap,readinto的性能严重下降。以下代码运行一个基准。第一个例程使用简单的readinto将文件的前N个字节读取到缓冲区中,而第二个例程使用mmap将N个字节拉入内存并读取它。

import numpy as np

import time

import mmap

import os

import matplotlib.pyplot as plt

def mmap_perf():

filepath = "test.bin"

filesize = os.path.getsize(filepath)

MEGABYTES = 10**6

batch_size = 10 * MEGABYTES

mview = memoryview(bytearray(filesize))

batch_sizes = []

load_durations = []

for i_part in range(1,filesize // batch_size):

start_time = time.time()

with open(filepath,"br") as fp:

# start = i_part * batch_size

fp.seek(0)

fp.readinto(mview[0:batch_size * i_part])

duration_readinto = time.time() - start_time

start_time = time.time()

with open(filepath,"br") as fp:

length = (i_part * batch_size // mmap.ALLOCATIONGRANULARITY + 1) * \

mmap.ALLOCATIONGRANULARITY

with mmap.mmap(fp.fileno(),offset=0,length=length,access=mmap.ACCESS_READ) as mp:

mview[0:i_part * batch_size] = mp[0:i_part * batch_size]

duration_mmap = time.time() - start_time

msg = "{2}MB\nreadinto: {0:.4f}\nmmap: {1:.4f}"

print(msg.format(duration_readinto,duration_mmap,i_part * batch_size // MEGABYTES))

batch_sizes.append(batch_size * i_part // MEGABYTES)

load_durations.append((duration_readinto,duration_mmap))

load_durations = np.asarray(load_durations)

plt.plot(batch_sizes,load_durations)

plt.show()

情节如下:

即使从1GB的文件中加载10MB的小批量文件,我也无法完全理解mmap的丢失。

解决方法

对于这样的顺序读取工作负载,通过系统调用读入可以很大程度上受益于操作系统预取,而您可能必须为 mmap 设置 MAP_POPULATE 才能享受同样的好处。如果您测试随机读取工作负载,您会看到完全不同的对比。

设置时间 控制面板

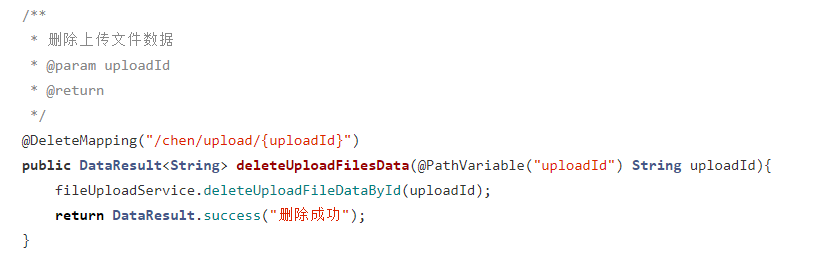

设置时间 控制面板 错误1:Request method ‘DELETE‘ not supported 错误还原:...

错误1:Request method ‘DELETE‘ not supported 错误还原:...