问题描述

我已经花了两天了,但仍然无法弄清楚。

整个部署都在裸机上进行。

为简单起见,我将群集从HA最小化到1个主节点和2个工作线程。

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

worker1 Ready <none> 99m v1.19.2

worker2 Ready <none> 99m v1.19.2

master Ready master 127m v1.19.2

我正在运行Nginx-ingress,但我认为这无关紧要,因为相同的配置也应适用于例如HaProxy。

$ kubectl -n ingress-nginx get pod

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-g645g 0/1 Completed 0 129m

ingress-nginx-admission-patch-ftg7p 0/1 Completed 2 129m

ingress-nginx-controller-587cd59444-cxm7z 1/1 Running 0 129m

我可以看到群集上没有外部IP:

$ kubectl get service -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cri-o-metrics-exporter cri-o-metrics-exporter ClusterIP 192.168.11.163 <none> 80/TCP 129m

default kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 130m

ingress-nginx ingress-nginx-controller NodePort 192.168.30.224 <none> 80:32647/TCP,443:31706/TCP 130m

ingress-nginx ingress-nginx-controller-admission ClusterIP 192.168.212.9 <none> 443/TCP 130m

kube-system kube-dns ClusterIP 192.168.0.10 <none> 53/UDP,53/TCP,9153/TCP 130m

kube-system metrics-server ClusterIP 192.168.178.171 <none> 443/TCP 129m

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 192.168.140.142 <none> 8000/TCP 129m

kubernetes-dashboard kubernetes-dashboard ClusterIP 192.168.100.126 <none> 443/TCP 129m

ConfigMap示例:

apiVersion: v1

kind: ConfigMap

metadata:

name: dashboard-ingress-nginx

namespace: kubernetes-dashboard

data:

ssl-certificate: my-cert

Ingress conf示例:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: dashboard-ingress-ssl

namespace: kubernetes-dashboard

annotations:

nginx.ingress.kubernetes.io/secure-backends: "true"

nginx.ingress.kubernetes.io/ssl-passthrough: "true"

nginx.ingress.kubernetes.io/whitelist-source-range: 10.96.0.0/16 #the IP to be allowed

spec:

tls:

- hosts:

- kube.my.domain.internal

secretName: my-cert

rules:

- host: kube.my.domain.internal

http:

paths:

- path: /

backend:

serviceName: kubernetes-dashboard

servicePort: 443

如果将我的浏览器重定向到域,例如https://kube.my.domain.internal我看到403被禁止。可能是由于RBAC规则导致我无法查看仪表板吗?

我发现了相关的问题,但是尽管看起来其他用户无法使用的配置ingress configuration for dashboard。我还尝试按照此处Restricting Access By IP (Allow/Block Listing) Using NGINX-Ingress Controller in Kubernetes的说明将大量IP列入白名单,但结果仍然相同。

但是,我也无法理解为什么Nginx-ingress仅在希望在两个节点(工作人员)上启动时才在一个节点上启动。我在任何节点上都没有标签。

我还阅读了有关MetalLB Bare-metal considerations的信息,但就我而言,我并没有尝试将网络连接到专用网络之外,而只是试图将节点从集群外部连接到集群中。我可能是错的,但我认为目前不需要这样做。

更新:我已经设法通过kubectl代理启动了仪表板,如官方页面Web UI (Dashboard)所述,但是由于我想将集群升级到HA,因此这不是最佳解决方案。如果运行代理的节点出现故障,则仪表板将变得不可访问。

Update2 :在遵循metallb/Layer 2 Configuration的文档之后,我得出以下几点:

$ kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cri-o-metrics-exporter cri-o-metrics-exporter-77c9cf9746-5xw4d 1/1 Running 0 30m 172.16.9.131 workerNode <none> <none>

ingress-nginx ingress-nginx-admission-create-cz9h9 0/1 Completed 0 31m 172.16.9.132 workerNode <none> <none>

ingress-nginx ingress-nginx-admission-patch-8fkhk 0/1 Completed 2 31m 172.16.9.129 workerNode <none> <none>

ingress-nginx ingress-nginx-controller-8679c5678d-fmc2q 1/1 Running 0 31m 172.16.9.134 workerNode <none> <none>

kube-system calico-kube-controllers-574d679d8c-7jt87 1/1 Running 0 32m 172.16.25.193 masterNode <none> <none>

kube-system calico-node-sf2cn 1/1 Running 0 9m11s 10.96.95.52 workerNode <none> <none>

kube-system calico-node-zq9vf 1/1 Running 0 32m 10.96.96.98 masterNode <none> <none>

kube-system coredns-7588b55795-5pg6m 1/1 Running 0 32m 172.16.25.195 masterNode <none> <none>

kube-system coredns-7588b55795-n8z2p 1/1 Running 0 32m 172.16.25.194 masterNode <none> <none>

kube-system etcd-masterNode 1/1 Running 0 32m 10.96.96.98 masterNode <none> <none>

kube-system kube-apiserver-masterNode 1/1 Running 0 32m 10.96.96.98 masterNode <none> <none>

kube-system kube-controller-manager-masterNode 1/1 Running 0 32m 10.96.96.98 masterNode <none> <none>

kube-system kube-proxy-6d5sj 1/1 Running 0 9m11s 10.96.95.52 workerNode <none> <none>

kube-system kube-proxy-9dfbk 1/1 Running 0 32m 10.96.96.98 masterNode <none> <none>

kube-system kube-scheduler-masterNode 1/1 Running 0 32m 10.96.96.98 masterNode <none> <none>

kube-system metrics-server-76bb4cfc9f-5tzfh 1/1 Running 0 31m 172.16.9.130 workerNode <none> <none>

kubernetes-dashboard dashboard-metrics-scraper-5f644f6df-8sjsx 1/1 Running 0 31m 172.16.25.197 masterNode <none> <none>

kubernetes-dashboard kubernetes-dashboard-85b6486959-thhnl 1/1 Running 0 31m 172.16.25.196 masterNode <none> <none>

metallb-system controller-56f5f66c6f-5vvhf 1/1 Running 0 31m 172.16.9.133 workerNode <none> <none>

metallb-system speaker-n5gxx 1/1 Running 0 31m 10.96.96.98 masterNode <none> <none>

metallb-system speaker-n9x9v 1/1 Running 0 8m51s 10.96.95.52 workerNode <none> <none>

$ kubectl get service -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cri-o-metrics-exporter cri-o-metrics-exporter ClusterIP 192.168.74.27 <none> 80/TCP 31m

default kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 33m

ingress-nginx ingress-nginx-controller NodePort 192.168.201.230 <none> 80:30509/TCP,443:31554/TCP 32m

ingress-nginx ingress-nginx-controller-admission ClusterIP 192.168.166.218 <none> 443/TCP 32m

kube-system kube-dns ClusterIP 192.168.0.10 <none> 53/UDP,9153/TCP 32m

kube-system metrics-server ClusterIP 192.168.7.75 <none> 443/TCP 31m

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 192.168.51.178 <none> 8000/TCP 31m

kubernetes-dashboard kubernetes-dashboard ClusterIP 192.168.50.70 <none> 443/TCP 31m

但是我看不到公共IP,所以我可以通过NAT到达群集。

解决方法

我进行BareMetal设置的方法是与METALLB一起安装ingress-nxginx并使用NAT将主机(端口80和443)上收到的流量转发到{{1 }}。

ingress-nginx0_cluster_setup_metallb_conf.yaml:

# MetalLB installation

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.3/manifests/namespace.yaml

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.9.3/manifests/metallb.yaml

# On first install only

kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)"

kubectl apply -f $YAML_FILES/0_cluster_setup_metallb_conf.yaml

最后,经过这么多时间,我设法弄清楚了。我是k8的入门者,因此该解决方案可能会对其他初学者有所帮助。

我决定在运行仪表板的同一名称空间上启动入口。用户可以选择其他名称空间,只需确保将您的名称空间与kubernetes-dashboard服务连接即可。文档可以在Understanding namespaces and DNS处找到。

将与NGINX入口一起使用的示例的完整代码:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kubernetes-dashboard-ingress

namespace: kubernetes-dashboard

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/ssl-passthrough: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

tls:

- hosts:

- "dashboard.example.com"

secretName: kubernetes-dashboard-secret

rules:

- host: "dashboard.example.com"

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kubernetes-dashboard

port:

number: 443

请记住要遵循入口控制器的注释(在示例NGINX上),因为它们将来可能会在以后的版本中更新。例如,自版本image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1起,注释secure-backends被backend-protocol取代。

在示例中,还提供了外部负载均衡器配置必须为SSL Pass-Through。

更新:如果其他人是k8s的初学者,那么我认为不太明确的一点是,如果用户决定使用MetalLB,则需要在入口文件上指定type: LoadBalancer。

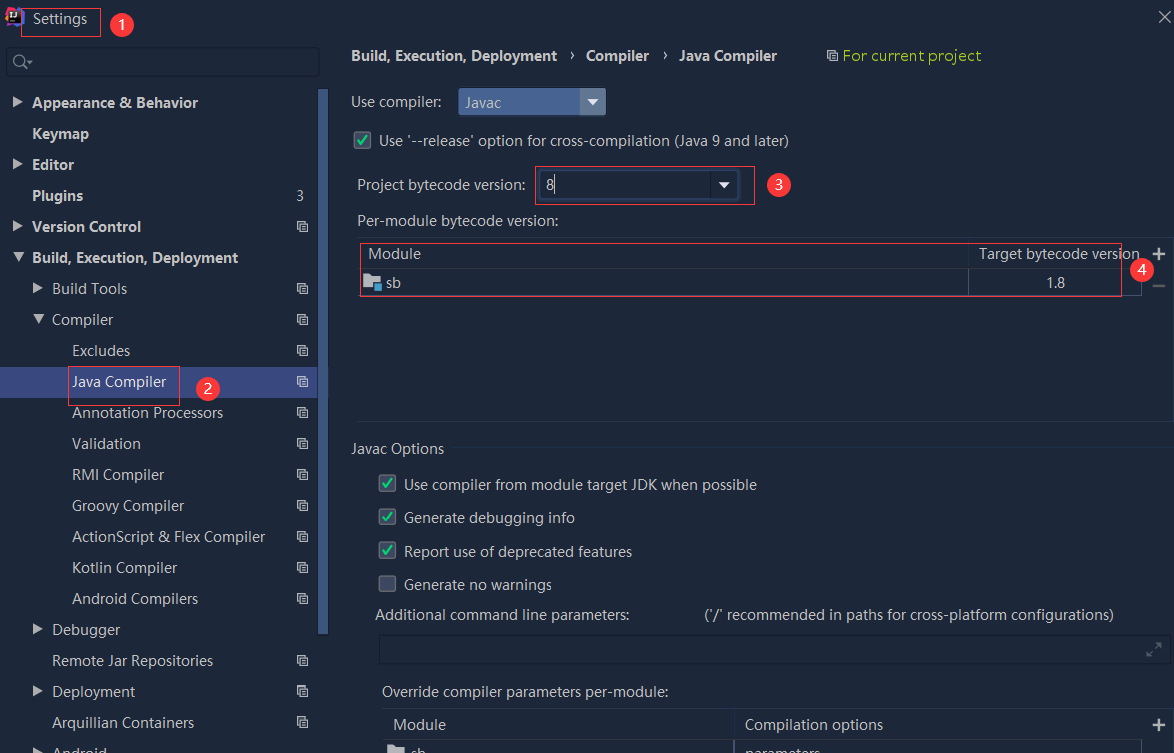

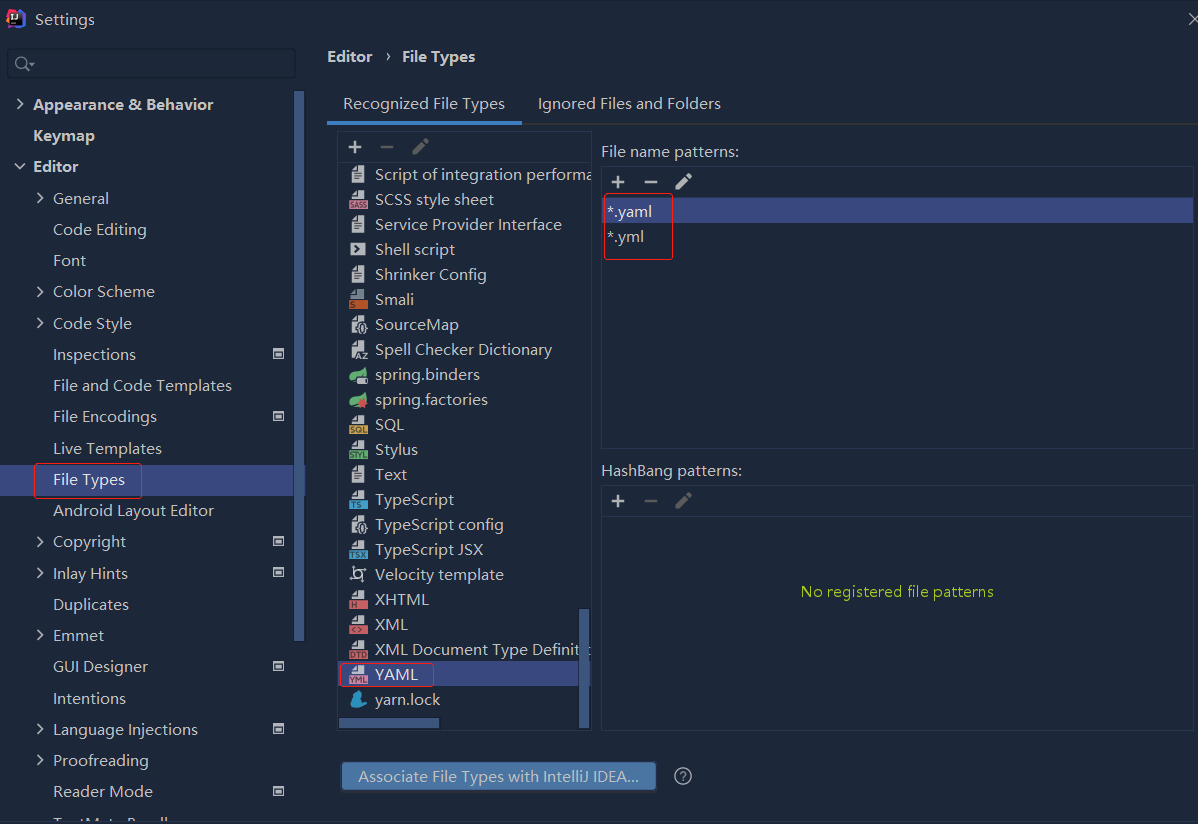

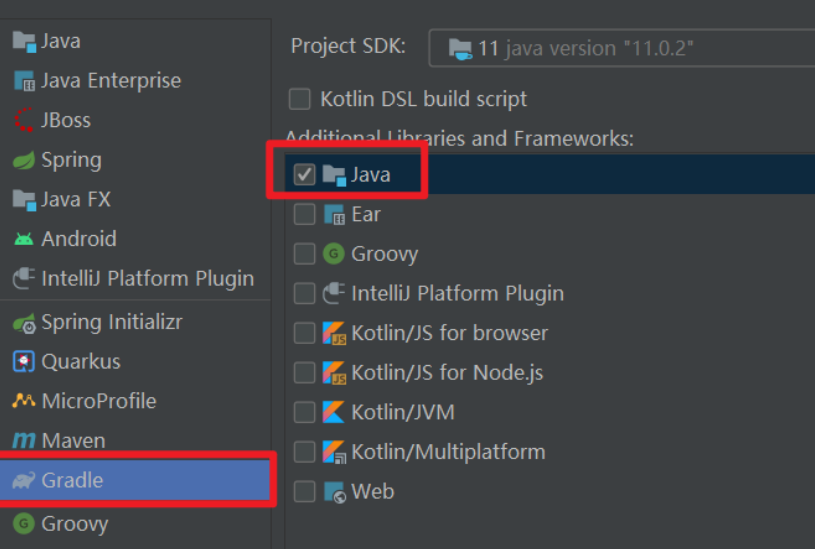

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...