问题描述

我正在 Fortran 90 中制作一个模块来在给定的矩阵上运行 PARPACK。我有一个现有的 ARPACK 代码,它按预期正常运行。我尝试将其转换为 PARPACK,但它遇到了内存清除错误。我对编码和 Fortran 还很陌生,请原谅我犯的任何错误。

代码:

!ARPACK module

module parpack

implicit none

contains

subroutine parp

! use mpi

include '/usr/lib/x86_64-linux-gnu/openmpi/include/mpif.h'

integer comm,myid,nprocs,rc,nloc,status(MPI_STATUS_SIZE)

integer,parameter :: pres=8

integer nev,ncv,maxn,maxnev,maxncv

parameter (maxn=10**7,maxnev=maxn-1,maxncv=maxn)

! Arrays for SNAUPD

integer iparam(11),ipntr(14)

logical,allocatable :: select(:)

real(kind=pres),allocatable :: workd(:),workl(:),worktmp1(:),worktmp2(:)

! Scalars for SNAUPD

character bmat*1,which*2

integer ido,n,info,ierr,ldv

integer i,j,ishfts,maxitr,mode1,nconv

integer(kind=pres) lworkl

real(kind=pres) tol

! Arrays for SNEUPD

real(kind=pres),allocatable :: d(:,:),resid(:),v(:,workev(:),z(:,:)

! Scalars for SNEUPD

logical rvec,first

real sigmar,sigmai

!==============================================

real(kind=pres),allocatable :: mat(:,:)

open (11,file = 'matrix.dat',status = 'old')

read (11,*) n

!=============================================

! Dimension of the problem

nev = n/10

ncv = nev+2

ldv = n

bmat = 'I'

which = 'LM'

! Additional environment variables

ido = 0

tol = 0.0E+0

info = 0

lworkl = 3*ncv**2+6*ncv

! Algorithm Mode specifications:

ishfts = 1

maxitr = 300

mode1 = 1

iparam(1) = ishfts

iparam(3) = maxitr

iparam(7) = mode1

! Distribution to nodes

!=============================================

! Matrix allocation

allocate (mat(n,n))

! PDNAUPD

allocate (workd(5*n))

allocate (workl(lworkl))

allocate (resid(n))

allocate (worktmp1(n))

allocate (worktmp2(n))

! PDNEUPD

allocate (d(n,3))

allocate (v(ldv,ncv))

allocate (workev(3*n))

allocate (z(ldv,ncv))

allocate (select(ncv))

!===========================================

! Read Matrix from the provided file

mat = 0

read(11,*) mat

mat = transpose(mat)

!===========================================

! MPI Calling

call MPI_INIT(ierr)

comm = MPI_COMM_WORLD

call MPI_COMM_RANK(comm,ierr)

call MPI_COMM_SIZE(comm,ierr)

nloc = n/nprocs

! if ( mod(n,nprocs) .gt. myid ) nloc = nloc + n

!===============================================

20 continue

call pdnaupd(comm,ido,bmat,which,nev,tol,resid,v,ldv,iparam,ipntr,workd,workl,lworkl,info) !Top level solver

call MPI_BARRIER(comm,ierr)

print *,iparam(5) !for testing

!===============================================

if (ido .eq. -1 .or. ido .eq. 1) then

worktmp1 = 0

if (myid .ne. 0) then !It is slave

call MPI_SEND(workd(ipntr(1)),MPI_DOUBLE_PRECISION,comm,ierr)

else !It is host

worktmp1(1:nloc) = workd(ipntr(1):ipntr(1)+nloc-1)

i = nprocs

if (i .gt. 1) then

do i=1,nprocs-1

call MPI_RECV(worktmp1(i*nloc+1),i,status,ierr)

end do

endif

endif

call MPI_BARRIER(comm,ierr)

if (myid .eq. 0) then !It is host

! Matrix multiplication

worktmp2 = 0

call matmultiply(n,mat,worktmp1,worktmp2)

workd(ipntr(2):ipntr(2)+nloc-1) = worktmp2(1:nloc)

i = nprocs

if (i .gt. 1) then

do i=1,nprocs-1

call MPI_SEND(worktmp2(i*nloc+1),100*i,ierr)

end do

endif

else !It is slave

call MPI_RECV(workd(ipntr(2)),100*myid,ierr)

endif

go to 20

! call matmultiply(n,workd(ipntr(1):ipntr(1)+n-1),workd(ipntr(2):ipntr(2)+n-1))

! go to 20

endif

! print *,info !for testing

!===============================================================

! Post-processing for eigenvalues

rvec = .true.

if (myid .eq. 0) then

call pdneupd ( comm,rvec,'A',select,d,d(1,2),z,sigmar,sigmai,&

workev,&

workd,info)

endif

! print *,info !for testing

close(11)

call MPI_FINALIZE(ierr)

return

end subroutine

!==============================================================================================

! Additional Function definitions

subroutine matmultiply(n,w)

integer n,j

integer,parameter :: pres=8

real(kind = pres) mat(n,n),temp(n)

real(kind = pres) v(n),w(n)

temp = 0

do j = 1,n

do i = 1,n

temp(j) = temp(j) + mat(i,j)*v(i)

end do

end do

w = temp

return

end subroutine

end module

对于大量多余的行和评论,我深表歉意,我还没有整理好以进行定稿。

当我使用 ./a.out 在单个线程上运行代码时,我得到以下输出:

Invalid MIT-MAGIC-COOKIE-1 key 1 0 1629760560

1 0 1629760560

1 0 1629760560

1 0 1629760560

.

.

. <A long chain as the code is exhausting all iterations>

.<first of the numbers is ido,which starts with 1 instead of -1 for some reason,second being

.info and third being iparam(5) which is a random number until the final iteration>

.

99 1 1

munmap_chunk(): invalid pointer

Program received signal SIGABRT: Process abort signal.

Backtrace for this error:

#0 0x7f5a863d0d01 in ???

#1 0x7f5a863cfed5 in ???

#2 0x7f5a8620420f in ???

#3 0x7f5a8620418b in ???

#4 0x7f5a861e3858 in ???

#5 0x7f5a8624e3ed in ???

#6 0x7f5a8625647b in ???

#7 0x7f5a862566cb in ???

#8 0x560f05ac1819 in ???

#9 0x560f05abd7bc in checker

at /home/srivatsank/Desktop/fortran/lap_vs_arp/ptest/ptest.f90:45

#10 0x560f05abd8d9 in main

at /home/srivatsank/Desktop/fortran/lap_vs_arp/ptest/ptest.f90:3

Aborted (core dumped)

ptest 中的第 45 行是 call parp

ptest 中的第 3 行是 use parpack(name of the module)

主要代码如下:

program checker

use parpack

use arpack

! use lapack

implicit none

!Program to test LAPACK and ARPACK

! 1. Variable definition

integer a,i

real,allocatable :: mat(:,:)

real t0,t1

a=2

! Loop

! do 20 a = 1,3

! Open File

open(unit=10,status = 'replace')

! 2. Generate Symmetric matrices

n = 10**a

allocate (mat(n,n))

call RANDOM_NUMBER(mat)

! 3. Save symmetric matrices to r.dat

write (10,*) n

do 30 i=1,n

write(10,*) mat(i,:)

30 end do

deallocate(mat)

close(10)

! 4. Test time taken by each of the routines

! call cpu_time(t0)

! call arp

! call cpu_time(t1)

! print *,'n:','ARPACK time taken:',t1-t0

call cpu_time(t0)

call parp

call cpu_time(t1)

print *,'PARPACK time taken:',t1-t0

!20 end do

end program checker

当邮件程序试图退出子程序时,内存错误发生在子程序的最后。我已经通过将语句打印为子例程中的最后一行来验证这一点。

并且在运行 mpirun -np 4 a.out 时,代码只是进入 pdneupd 进程并永久保存在那里。有人可以帮忙吗?

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

设置时间 控制面板

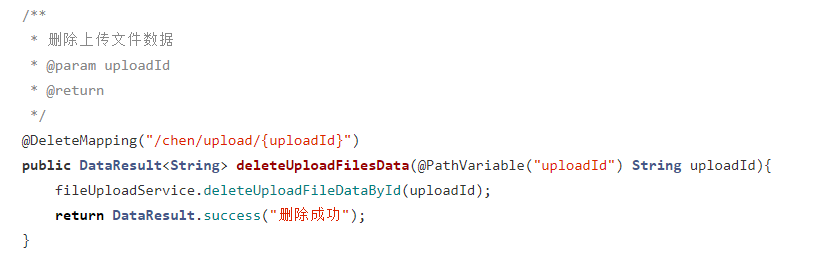

设置时间 控制面板 错误1:Request method ‘DELETE‘ not supported 错误还原:...

错误1:Request method ‘DELETE‘ not supported 错误还原:...