问题描述

我正在使用 Expo 构建一个录音应用程序。 这是我开始录音的功能,正常使用。

String testString = "aman://jaspreet:raman!@127.0.0.1:5031/test";

URI uri = URI.create(testString);

String scheme = uri.getScheme(); // "aman"

String userInfo = uri.getUserInfo(); // "jaspreet:raman!"

String host = uri.getHost(); // "127.0.0.1"

String port = uri.getPort(); // "5031"

String path = uri.getPath(); // "test"

这是我的“停止”功能,记录停止并存储...

const [recording,setRecording] = React.useState();

async function startRecording() {

try {

console.log('Requesting permissions..');

await Audio.requestPermissionsAsync();

await Audio.setAudioModeAsync({

allowsRecordingIOS: true,playsInSilentModeIOS: true,});

console.log('Starting recording..');

const recording = new Audio.Recording();

await recording.prepareToRecordAsync(Audio.RECORDING_OPTIONS_PRESET_HIGH_QUALITY);

await recording.startAsync();

setRecording(recording);

console.log('Recording started');

} catch (err) {

console.error('Failed to start recording',err);

}

}

现在我想用播放按钮播放这个保存的声音。我怎样才能获得这个保存的声音?

async function stopRecording() {

console.log('Stopping recording..');

setRecording(undefined);

await recording.stopAndUnloadAsync();

const uri = recording.getURI();

console.log('Recording stopped and stored at',uri);

}

我想将此存储的 uri 作为文件位置传递。这种方式可以吗?

return (

<View style={styles.container}>

<Button

title={recording ? 'Stop Recording' : 'Start Recording'}

onPress={recording ? stopRecording : startRecording}

/>

</View>

);

这是小吃链接:https://snack.expo.io/ZN9MBtpLd

解决方法

录制实现的小吃是here

Screenshot 的结果

首先,为什么您在获得录音后将状态设置为 undefined。

这样做 -

开始写这个

let recording = new Audio.Recording();

// Don't write this inside you funtion. Write it outside the function

然后在函数内部

const [RecordedURI,SetRecordedURI] = useState("");

const [isRecording,SetisRecording] = useState(false);

完整实现在这里

import React,{ useState,useRef,useEffect } from 'react';

import { View,StyleSheet,Button,Text } from 'react-native';

import { Audio } from 'expo-av';

let recording = new Audio.Recording();

export default function App() {

const [RecordedURI,SetRecordedURI] = useState('');

const [AudioPerm,SetAudioPerm] = useState(false);

const [isRecording,SetisRecording] = useState(false);

const [isPLaying,SetisPLaying] = useState(false);

const Player = useRef(new Audio.Sound());

useEffect(() => {

GetPermission();

},[]);

const GetPermission = async () => {

const getAudioPerm = await Audio.requestPermissionsAsync();

SetAudioPerm(getAudioPerm.granted);

};

const startRecording = async () => {

if (AudioPerm === true) {

try {

await recording.prepareToRecordAsync(

Audio.RECORDING_OPTIONS_PRESET_HIGH_QUALITY

);

await recording.startAsync();

SetisRecording(true);

} catch (error) {

console.log(error);

}

} else {

GetPermission();

}

};

const stopRecording = async () => {

try {

await recording.stopAndUnloadAsync();

const result = recording.getURI();

SetRecordedURI(result); // Here is the URI

recording = new Audio.Recording();

SetisRecording(false);

} catch (error) {

console.log(error);

}

};

const playSound = async () => {

try {

const result = await Player.current.loadAsync(

{ uri: RecordedURI },{},true

);

const response = await Player.current.getStatusAsync();

if (response.isLoaded) {

if (response.isPlaying === false) {

Player.current.playAsync();

SetisPLaying(true);

}

}

} catch (error) {

console.log(error);

}

};

const stopSound = async () => {

try {

const checkLoading = await Player.current.getStatusAsync();

if (checkLoading.isLoaded === true) {

await Player.current.stopAsync();

SetisPLaying(false);

}

} catch (error) {

console.log(error);

}

};

return (

<View style={styles.container}>

<Button

title={isRecording ? 'Stop Recording' : 'Start Recording'}

onPress={isRecording ? () => stopRecording() : () => startRecording()}

/>

<Button

title="Play Sound"

onPress={isPLaying ? () => stopSound : () => playSound()}

/>

<Text>{RecordedURI}</Text>

</View>

);

}

const styles = StyleSheet.create({

container: {

flex: 1,justifyContent: 'center',backgroundColor: '#ecf0f1',padding: 8,},});

这是一个有趣的问题,我也遇到了同样的问题。我无法从录制的 URI 播放。

此外,我已经从官方文档中了解到,但他们只提供了有限的示例。

但我想出了一个有趣的解决方案,它对我有效。 Audio.Sound.createAsync() 也支持 URI 支持。

您只需传递 {uri: recording. getURI() || URIFROMFileSystem} 即可完美运行。

我的例子

const { sound } = await Audio.Sound.createAsync({

uri: "file:///Users/xyz/Library/Developer/CoreSimulator/Devices/1BBRFGFCBC414-6685-4818-B625-01038771B105/data/Containers/Data/Application/18E8D28E-EA03-4733-A0CF-F3E21A23427D/Library/Caches/ExponentExperienceData/%2540anonymous%252Fchat-app-c88a6b2e-ad36-45b8-9e5e-1fb6001826eb/AV/recording-87738E73-A38E-49E8-89D1-9689DC5F316B.caf",});

setSound(sound);

console.log("Playing Sound");

await sound.playAsync();

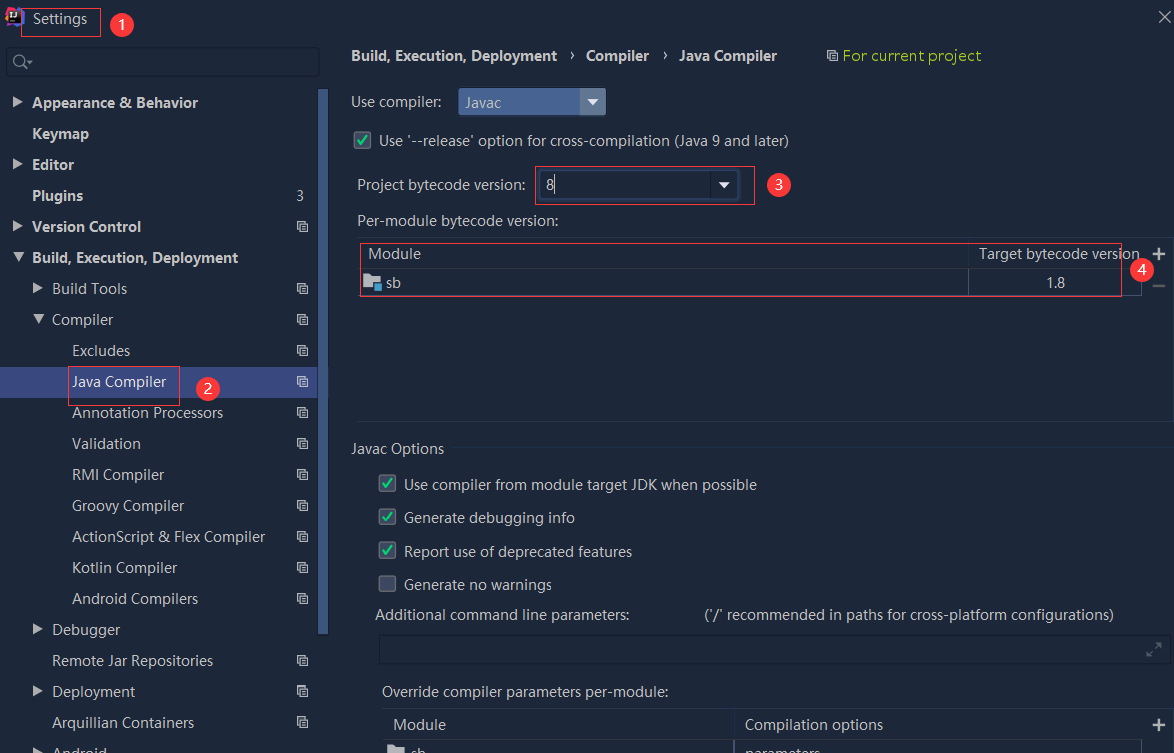

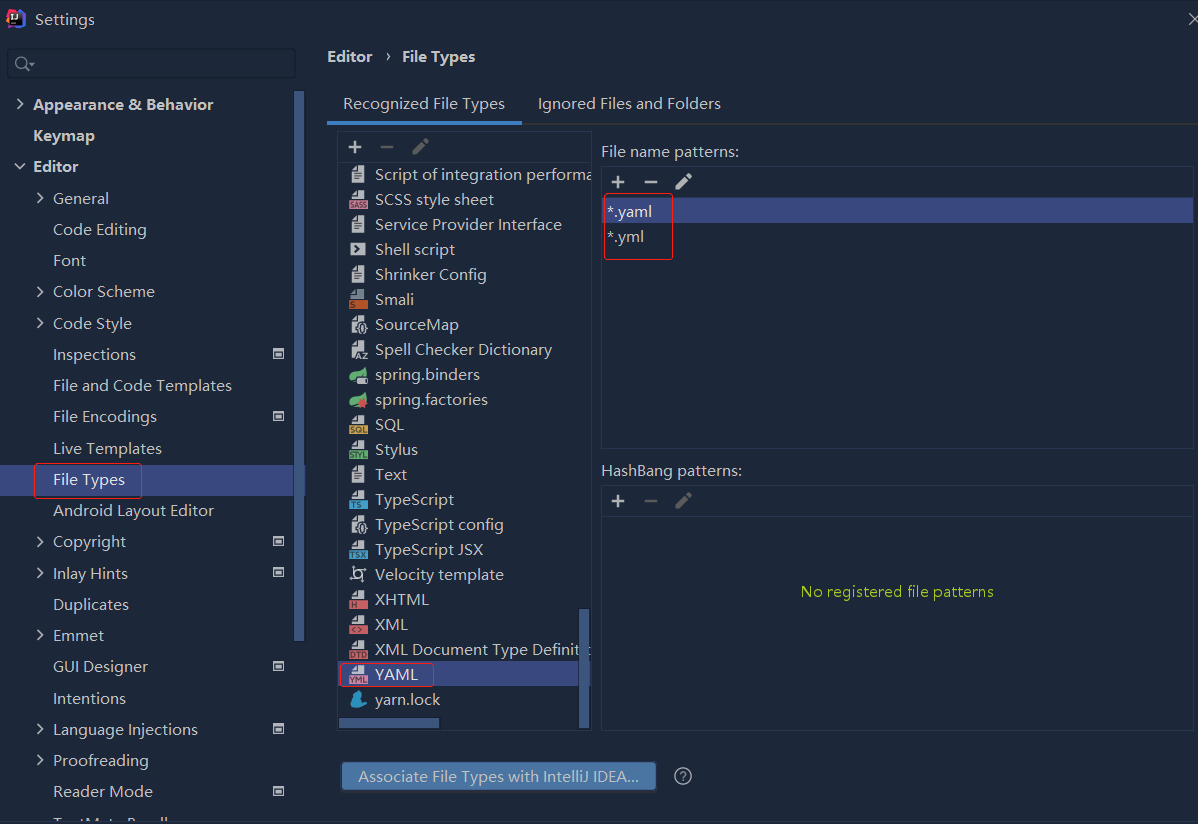

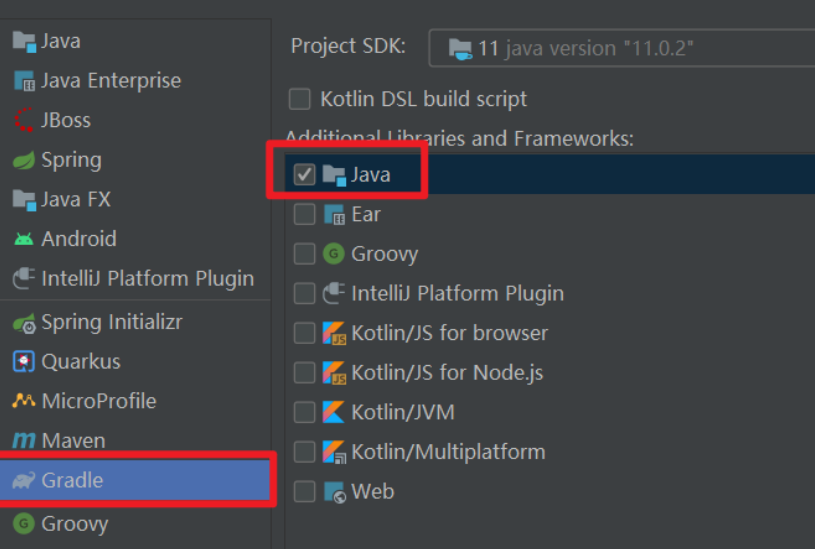

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...