问题描述

我在分词器的 batch_encode_plus 方法中遇到了一个奇怪的问题。我最近从变压器版本 3.3.0 切换到 4.5.1。 (我正在为 NER 创建数据束)。

我有 2 个需要编码的句子,并且我有一个句子已经被标记化的情况,但是由于两个句子的长度不同,所以我需要 pad [PAD] 较短的句子才能让我的批量统一长度。

下面是我用 3.3.0 版本的转换器做的代码

from transformers import AutoTokenizer

pretrained_model_name = 'distilbert-base-cased'

tokenizer = AutoTokenizer.from_pretrained(pretrained_model_name,add_prefix_space=True)

sentences = ["He is an uninvited guest.","The host of the party didn't sent him the invite."]

# here we have the complete sentences

encodings = tokenizer.batch_encode_plus(sentences,max_length=20,padding=True)

batch_token_ids,attention_masks = encodings["input_ids"],encodings["attention_mask"]

print(batch_token_ids[0])

print(tokenizer.convert_ids_to_tokens(batch_token_ids[0]))

# And the output

# [101,1124,1110,1126,8362,1394,5086,1906,3648,119,102,0]

# ['[CLS]','He','is','an','un','##in','##vi','##ted','guest','.','[SEP]','[PAD]','[PAD]']

# here we have the already tokenized sentences

encodings = tokenizer.batch_encode_plus(batch_token_ids,padding=True,truncation=True,is_split_into_words=True,add_special_tokens=False,return_tensors="pt")

batch_token_ids,encodings["attention_mask"]

print(batch_token_ids[0])

print(tokenizer.convert_ids_to_tokens(batch_token_ids[0]))

# And the output

tensor([ 101,0])

['[CLS]',[PAD]','[PAD]']

但是如果我尝试在 Transformer 版本 4.5.1 中模仿相同的行为,我会得到不同的输出

from transformers import AutoTokenizer

pretrained_model_name = 'distilbert-base-cased'

tokenizer = AutoTokenizer.from_pretrained(pretrained_model_name,encodings["attention_mask"]

print(batch_token_ids[0])

print(tokenizer.convert_ids_to_tokens(batch_token_ids[0]))

# And the output

#[101,0]

#['[CLS]','[PAD]']

# here we have the already tokenized sentences,Note we cannot pass the batch_token_ids

# to the batch_encode_plus method in the newer version,so need to convert them to token first

tokens1 = tokenizer.tokenize(sentences[0],add_special_tokens=True)

tokens2 = tokenizer.tokenize(sentences[1],add_special_tokens=True)

encodings = tokenizer.batch_encode_plus([tokens1,tokens2],encodings["attention_mask"]

print(batch_token_ids[0])

print(tokenizer.convert_ids_to_tokens(batch_token_ids[0]))

# And the output (not the desired one)

tensor([ 101,108,1107,191,1182,21359,1181,102])

['[CLS]','#','in','v','##i','te','##d','[SEP]']

不知道如何处理这个问题,或者我在这里做错了什么。

解决方法

您需要一个非快速标记器来使用整数标记列表。

tokenizer = AutoTokenizer.from_pretrained(pretrained_model_name,add_prefix_space=True,use_fast=False)

use_fast 标志在以后的版本中默认启用。

来自 HuggingFace 文档,

batch_encode_plus(batch_text_or_text_pairs: ...)

batch_text_or_text_pairs (List[str],List[Tuple[str,str]],List[List[str]]、List[Tuple[List[str]、List[str]]]和用于非快速 分词器,还有 List[List[int]],List[Tuple[List[int],List[int]]])

,我写在这里是因为我无法对问题本身发表评论。我建议查看每个标记化(token1 和 token2)的输出并将其与 batch_token_ids 进行比较。奇怪的是输出不包含来自第二个句子的标记。也许那里有问题。

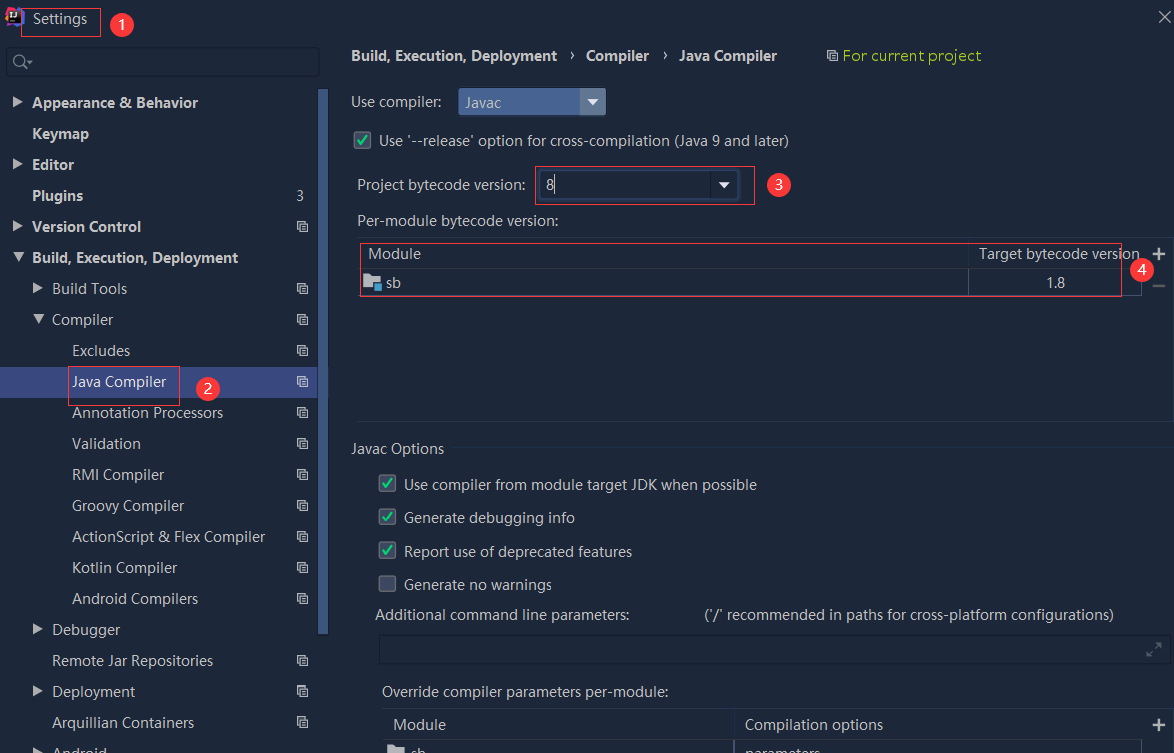

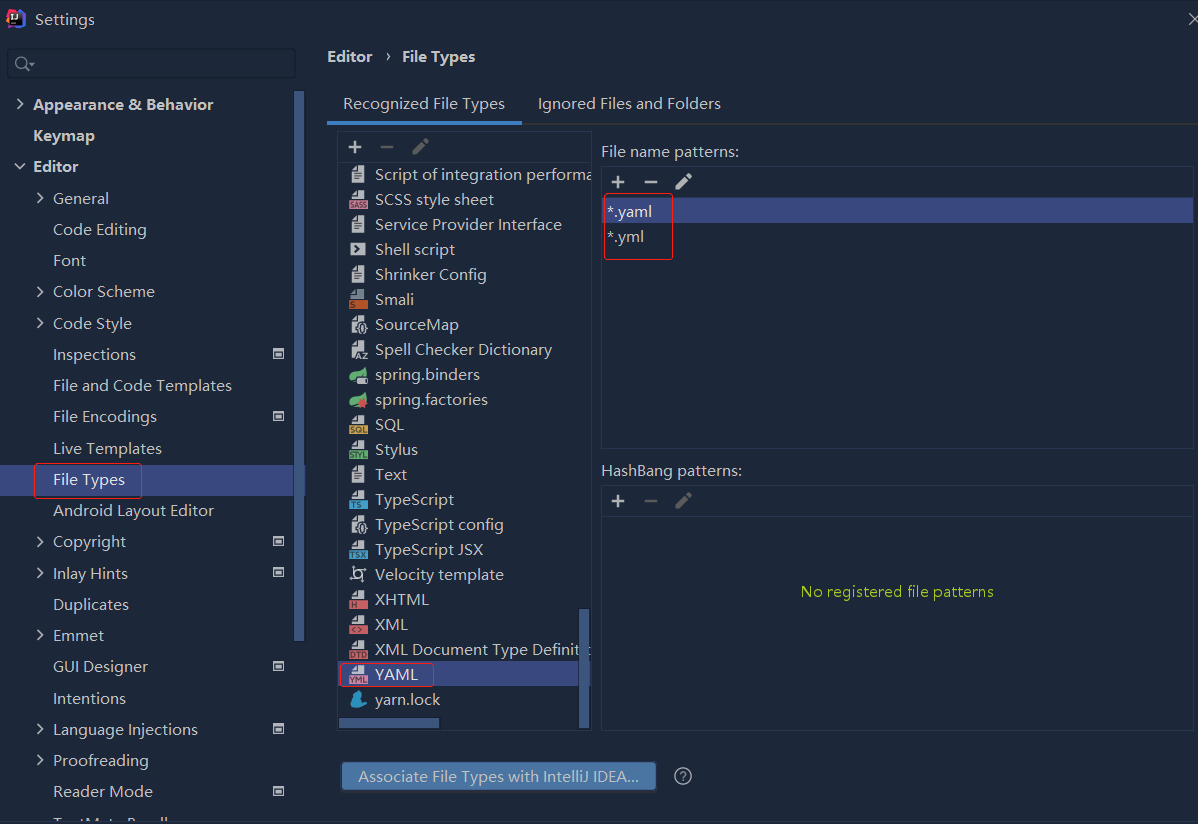

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

依赖报错 idea导入项目后依赖报错,解决方案:https://blog....

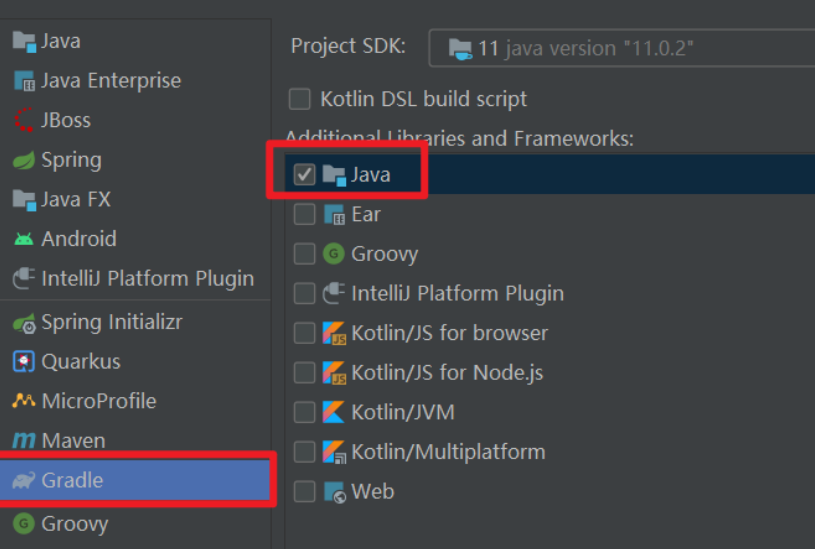

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...

错误1:gradle项目控制台输出为乱码 # 解决方案:https://bl...