本文使用ceph-deploy工具,能快速搭建出一个ceph集群。

一、环境准备

修改主机名

[root@admin-node ~]# cat /etc/redhat-release

CentOS Linux release 7.4.1708 (Core)

| IP |

主机名 | 角色 |

10.10.10.20 |

admin-node | ceph-deploy |

| 10.10.10.21 | node1 | mon |

| 10.10.10.22 | node2 | osd |

| 10.10.10.23 | node3 | osd |

设置DNS解析(我们这里修改/etc/hosts文件)

每个节点都要配置

[root@admin-node ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.10.10.20 admin-node

10.10.10.21 node1

10.10.10.22 node2

10.10.10.23 node3

配置yum源

每个节点都要配置

[root@admin-node ~]# mv /etc/yum.repos.d{,.bak}

[root@admin-node ~]# mkdir /etc/yum.repos.d

[root@admin-node ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[ceph]

name=Ceph packages for $basearch

baseurl=http://download.ceph.com/rpm-jewel/el7/$basearch

enabled=1

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-noarch]

name=Ceph noarch packages

baseurl=http://download.ceph.com/rpm-jewel/el7/noarch

enabled=1

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=http://download.ceph.com/rpm-jewel/el7/SRPMS

enabled=0

priority=2

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

关闭防火墙和Selinux

每个节点都要配置

[root@admin-node ~]# systemctl stop firewalld.service

[root@admin-node ~]# systemctl disable firewalld.service

[root@admin-node ~]# setenforce 0

[root@admin-node ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

设置节点之间面秘钥登入

每个节点都要配置

[root@admin-node ~]# ssh-keygen

[root@admin-node ~]# ssh-copy-id 10.10.10.21

[root@admin-node ~]# ssh-copy-id 10.10.10.22

[root@admin-node ~]# ssh-copy-id 10.10.10.23

使用chrony同步时间

每个节点都要配置

[root@admin-node ~]# yum install chrony -y

[root@admin-node ~]# systemctl restart chronyd

[root@admin-node ~]# systemctl enable chronyd

[root@admin-node ~]# chronyc source -v (查看时间是否同步,*表示同步完成)

二、安装ceph-luminous

安装ceph-deploy

只在admin-node节点安装

[root@admin-node ~]# yum install ceph-deploy -y

在管理节点上创建一个目录,用于保存 ceph-deploy 生成的配置文件和密钥对

只在admin-node节点安装

[root@admin-node ~]# mkdir /etc/ceph

[root@admin-node ~]# cd /etc/ceph/

清除配置(若想从新安装可以执行以下命令)

只在admin-node节点安装

[root@admin-node ceph]# ceph-deploy purgedata node1 node2 node3

[root@admin-node ceph]# ceph-deploy forgetkeys

创建集群

只在admin-node节点安装

[root@admin-node ceph]# ceph-deploy new node1

修改ceph的配置,将副本数改为2

只在admin-node节点安装

[root@admin-node ceph]# vi ceph.conf

[global]

fsid = 183e441b-c8cd-40fa-9b1a-0387cb8e8735

mon_initial_members = node1

mon_host = 10.10.10.21

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

filestore_xattr_use_omap = true

osd journal size = 1024

filestore xattr use omap = true

osd pool default size = 2

osd pool default min size = 1

osd pool default pg num = 333

osd pool default pgp num = 333

osd crush chooseleaf type = 1

安装ceph

只在admin-node节点安装

[root@admin-node ceph]# ceph-deploy install admin-node node1 node2 node3

配置初始 monitor(s)、并收集所有密钥

只在admin-node节点安装

[root@admin-node ceph]# ceph-deploy mon create-initial

[root@admin-node ceph]# ls

ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph-deploy-ceph.log

ceph.bootstrap-mgr.keyring ceph.client.admin.keyring ceph.mon.keyring

ceph.bootstrap-osd.keyring ceph.conf rbdmap

[root@admin-node ceph]# ceph -s (查看集群状态)

cluster 8d395c8f-6ac5-4bca-bbb9-2e0120159ed9

health HEALTH_ERR

no osds

monmap e1: 1 mons at {node1=10.10.10.21:6789/0}

election epoch 3,quorum 0 node1

osdmap e1: 0 osds: 0 up,0 in

flags sortbitwise,require_jewel_osds

pgmap v2: 64 pgs,1 pools,0 bytes data,0 objects

0 kB used,0 kB / 0 kB avail

64 creating

创建OSD

[root@node2 ~]# lsblk(node2,node3做osd)

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

fd0 2:0 1 4K 0 disk

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─cl-root 253:0 0 17G 0 lvm /

└─cl-swap 253:1 0 2G 0 lvm [SWAP]

sdb 8:16 0 50G 0 disk /var/local/osd0

sdc 8:32 0 5G 0 disk

sr0 11:0 1 4.1G 0 rom

[root@node2 ~]# mkfs.xfs /dev/sdb

[root@node2 ~]# mkdir /var/local/osd0

[root@node2 ~]# mount /dev/sdb /var/local/osd0

[root@node2 ~]# chown ceph:ceph /var/local/osd0

[root@node3 ~]# mkdir /var/local/osd1

[root@node3 ~]# mkfs.xfs /dev/sdb

[root@node3 ~]# mount /dev/sdb /var/local/osd1/

[root@node3 ~]# chown ceph:ceph /var/local/osd1

[root@admin-node ceph]# ceph-deploy osd prepare node2:/var/local/osd0 node3:/var/local/osd1(在admin-node节点执行)

将admin-node上的密钥和配合文件拷贝到各个节点

只在admin-node节点安装

[root@admin-node ceph]# ceph-deploy admin admin-node node1 node2 node3

确保对 ceph.client.admin.keyring 有正确的操作权限

只在OSD节点执行

[root@node2 ~]# chmod +r /etc/ceph/ceph.client.admin.keyring

管理节点执行 ceph-deploy 来准备 OSD

[root@admin-node ceph]# ceph-deploy osd prepare node2:/var/local/osd0 node3:/var/local/osd1

激活 OSD

[root@admin-node ceph]# ceph-deploy osd activate node2:/var/local/osd0 node3:/var/local/osd1

检查集群的健康状况

[root@admin-node ceph]# ceph health

HEALTH_OK

[root@admin-node ceph]# ceph health

HEALTH_OK

[root@admin-node ceph]# ceph -s

cluster 69f64f6d-f084-4b5e-8ba8-7ba3cec9d927

health HEALTH_OK

monmap e1: 1 mons at {node1=10.10.10.21:6789/0}

election epoch 3,quorum 0 node1

osdmap e14: 3 osds: 3 up,3 in

flags sortbitwise,require_jewel_osds

pgmap v29: 64 pgs,0 objects

15459 MB used,45950 MB / 61410 MB avail

64 active+clean

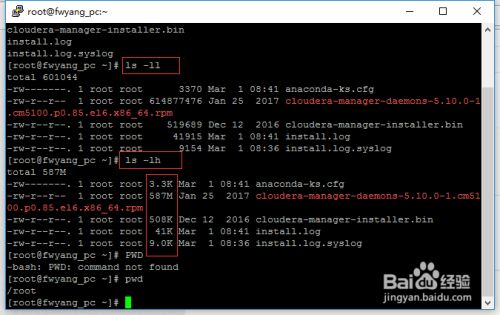

最简单的查看方法可以使用ls -ll、ls-lh命令进行查看,当使用...

最简单的查看方法可以使用ls -ll、ls-lh命令进行查看,当使用...