之前的基本环境准备我就不再说了,请参照我之前的搭建hadoop的文章

配置 flume 环境变量

export FLUME_HOME=/opt/apache-flume-1.7.0-bin export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$FLUME_HOME/bin:$HOME/bin然后 记得 source ~/.bash_profile

根据需求,配置不同的 source/channel/sink,添加配置文件到 conf/中

- flume_exec_hdfs.conf

logAgent.sources = logSource logAgent.channels = fileChannel logAgent.sinks = hdfsSink logAgent.sources.logSource.type = exec logAgent.sources.logSource.command = tail -F /aura/data/flume-search/logs logAgent.sources.logSource.channels = fileChannel logAgent.sinks.hdfsSink.type = hdfs logAgent.sinks.hdfsSink.hdfs.path = hdfs://bigdata:9000/flume/record/%Y-%m-%d/%H%M logAgent.sinks.hdfsSink.hdfs.rollCount= 10000 logAgent.sinks.hdfsSink.hdfs.rollSize= 0 logAgent.sinks.hdfsSink.hdfs.batchSize= 1000 logAgent.sinks.hdfsSink.hdfs.filePrefix= transaction_log logAgent.sinks.hdfsSink.hdfs.rollInterval= 600 logAgent.sinks.hdfsSink.hdfs.roundUnit = minute logAgent.sinks.hdfsSink.hdfs.fileType = DataStream logAgent.sinks.hdfsSink.hdfs.useLocalTimeStamp = true logAgent.sinks.hdfsSink.channel = fileChannel logAgent.channels.fileChannel.type = memory logAgent.channels.logSource.capacity=1000 logAgent.channels.logSource.transactionCapacity=100

- flume_avro_hdfs.conf

logAgent.sources = logSource logAgent.channels = fileChannel logAgent.sinks = hdfsSink logAgent.sources.logSource.type = avro logAgent.sources.logSource.bind = 127.0.0.1 logAgent.sources.logSource.port = 44444 logAgent.sources.logSource.channels = fileChannel logAgent.sinks.hdfsSink.type = hdfs logAgent.sinks.hdfsSink.hdfs.path = hdfs://bigdata:9000/flume/record/%Y-%m-%d/%H%M logAgent.sinks.hdfsSink.hdfs.rollCount= 10000 logAgent.sinks.hdfsSink.hdfs.rollSize= 0 logAgent.sinks.hdfsSink.hdfs.batchSize= 1000 logAgent.sinks.hdfsSink.hdfs.filePrefix= transaction_log logAgent.sinks.hdfsSink.hdfs.rollInterval= 600 logAgent.sinks.hdfsSink.hdfs.roundUnit = minute logAgent.sinks.hdfsSink.hdfs.fileType = DataStream logAgent.sinks.hdfsSink.hdfs.useLocalTimeStamp = true logAgent.sinks.hdfsSink.channel = fileChannel logAgent.channels.fileChannel.type = memory logAgent.channels.logSource.capacity=1000 logAgent.channels.logSource.transactionCapacity=100

- flume_dir_hdfs.conf

logAgent.sources = logSource logAgent.channels = fileChannel logAgent.sinks = hdfsSink logAgent.sources.logSource.type = spooldir logAgent.sources.logSource.spoolDir =/aura/data/flume-search logAgent.sources.logSource.channels = fileChannel logAgent.sinks.hdfsSink.type = hdfs logAgent.sinks.hdfsSink.hdfs.path = hdfs://bigdata:9000/flume/record/%Y-%m-%d/%H%M logAgent.sinks.hdfsSink.hdfs.rollCount= 10000 logAgent.sinks.hdfsSink.hdfs.rollSize= 0 logAgent.sinks.hdfsSink.hdfs.batchSize= 1000 logAgent.sinks.hdfsSink.hdfs.filePrefix= transaction_log logAgent.sinks.hdfsSink.hdfs.rollInterval= 600 logAgent.sinks.hdfsSink.hdfs.roundUnit = minute logAgent.sinks.hdfsSink.hdfs.fileType = DataStream logAgent.sinks.hdfsSink.hdfs.useLocalTimeStamp = true logAgent.sinks.hdfsSink.channel = fileChannel logAgent.channels.fileChannel.type = memory logAgent.channels.logSource.capacity=1000 logAgent.channels.logSource.transactionCapacity=100bin/flume-ng agent -n logAgent -c conf -f conf/flume_exec_hdfs.conf -Dflume.root.logger=INFO,console

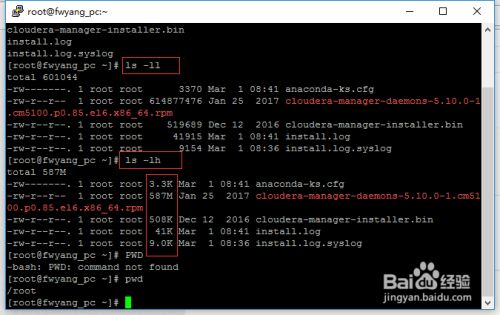

最简单的查看方法可以使用ls -ll、ls-lh命令进行查看,当使用...

最简单的查看方法可以使用ls -ll、ls-lh命令进行查看,当使用...