我使用带有虚拟机cloudera-quickstart-vm-5.4.2-0-virtualBox的MAC OS X Yosemite。 当我input“hdfs dfs -put testfile.txt”将一个文本文件放入HDFS时,我得到一个DataStreamerexception 。 我注意到,主要的问题是我有的节点数是null。 我在下面复制完整的错误信息,我想知道我该怎么做才能解决这个问题。

> WARN hdfs.DFSClient: DataStreamer > Exceptionorg.apache.hadoop.ipc.remoteexception(java.io.IOException): > File /user/cloudera/testfile.txt._copYING_ Could only be replicated to > 0 nodes instead of minReplication (=1). There are 0 datanode(s) > running and no node(s) are excluded in this operation. at > org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1541) > at > org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3286) > at > org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:667) > at > org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.addBlock(AuthorizationProviderProxyClientProtocol.java:212) > at > org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:483) > at > org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) > at > org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:619) > at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1060) at > org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2044) at > org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2040) at > java.security.AccessController.doPrivileged(Native Method) at > javax.security.auth.Subject.doAs(Subject.java:415) at > org.apache.hadoop.security.UserGroupinformation.doAs(UserGroupinformation.java:1671) > at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2038) at > org.apache.hadoop.ipc.Client.call(Client.java:1468) at > org.apache.hadoop.ipc.Client.call(Client.java:1399) at > org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232) > at com.sun.proxy.$Proxy14.addBlock(UnkNown Source) at > org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:399) > at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at > sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) > at > sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) > at java.lang.reflect.Method.invoke(Method.java:606) at > org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187) > at > org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102) > at com.sun.proxy.$Proxy15.addBlock(UnkNown Source) at > org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1544) > at > org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1361) > at > org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:600)put: > File /user/cloudera/testfile.txt._copYING_ Could only be replicated to > 0 nodes instead of minReplication (=1). There are 0 datanode(s) > running and no node(s) are excluded in this > operation.[cloudera@quickstart ~]$ hdfs dfs -put testfile.txt15/10/18 > 03:51:51 WARN hdfs.DFSClient: DataStreamer > Exceptionorg.apache.hadoop.ipc.remoteexception(java.io.IOException): > File /user/cloudera/testfile.txt._copYING_ Could only be replicated to > 0 nodes instead of minReplication (=1). There are 0 datanode(s) > running and no node(s) are excluded in this operation. at > org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1541) > at > org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3286) > at > org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:667) > at > org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.addBlock(AuthorizationProviderProxyClientProtocol.java:212) > at > org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:483) > at > org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) > at > org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:619) > at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1060) at > org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2044) at > org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2040) at > java.security.AccessController.doPrivileged(Native Method) at > javax.security.auth.Subject.doAs(Subject.java:415) at > org.apache.hadoop.security.UserGroupinformation.doAs(UserGroupinformation.java:1671) > at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2038) at > org.apache.hadoop.ipc.Client.call(Client.java:1468) at > org.apache.hadoop.ipc.Client.call(Client.java:1399) at > org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232) > at com.sun.proxy.$Proxy14.addBlock(UnkNown Source) at > org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:399) > at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at > sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) > at > sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) > at java.lang.reflect.Method.invoke(Method.java:606) at > org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187) > at > org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102) > at com.sun.proxy.$Proxy15.addBlock(UnkNown Source) at > org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.locateFollowingBlock(DFSOutputStream.java:1544) > at > org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1361) > at > org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:600)put: > File /user/cloudera/testfile.txt._copYING_ Could only be replicated to > 0 nodes instead of minReplication (=1). There are 0 datanode(s) > running and no node(s) are excluded in this > operation.[cloudera@quickstart ~]$

OpenShift,Python 2.7和静态文件与htaccess

.htaccess – 无声地重写/redirect到内部子文件夹

Apache上的PHP stdout

CruiseControl.Net Dashboard + Apache

从档案中提供静态文件

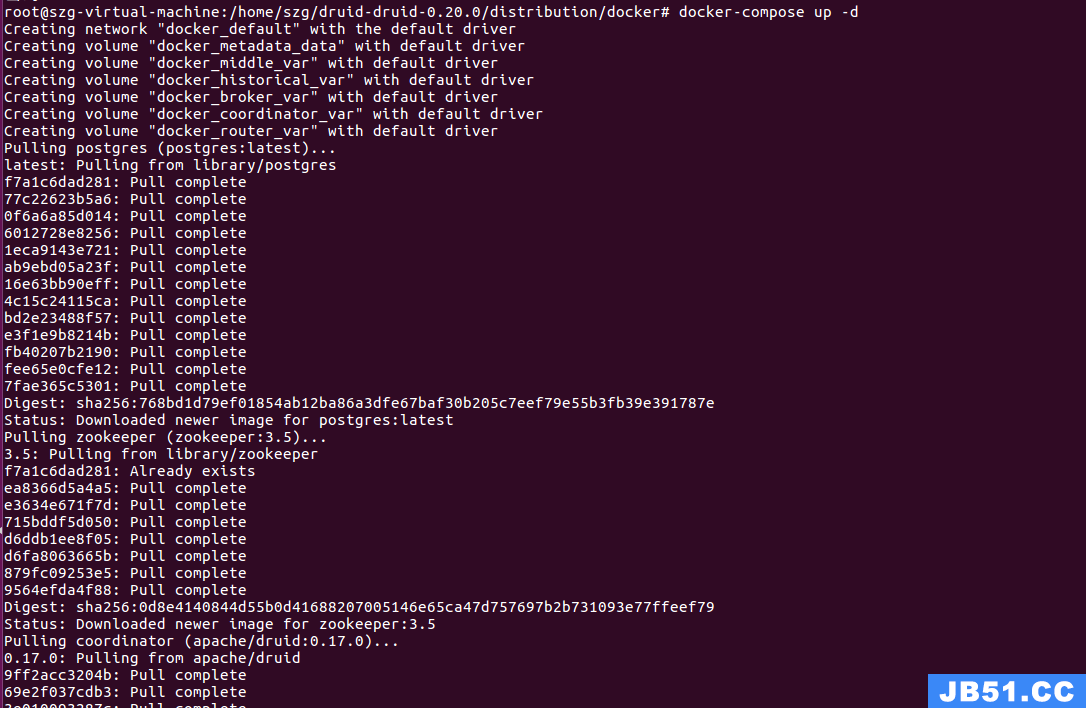

1.按照停止服务中所述停止Hadoop 服务

for x in `cd /etc/init.d ; ls hadooP*` ; do sudo service $x stop ; done

2.从 /var/lib/hadoop-hdfs/cache/

sudo rm -r /var/lib/hadoop-hdfs/cache/

3.格式化Namenode

sudo -u hdfs hdfs namenode -format Note: Answer with a capital Y Note: Data is lost during format process.

4.启动Hadoop服务

for x in `cd /etc/init.d ; ls hadooP*` ; do sudo service $x start ; done

5.确保您的系统不在低磁盘空间上运行。 如果在日志文件中存在有关磁盘空间不足的警告,也可以确认。

6.创建/ tmp目录

Remove the old /tmp if it exists: $ sudo -u hdfs hadoop fs -rm -r /tmp Create a new /tmp directory and set permissions: $ sudo -u hdfs hadoop fs -mkdir /tmp $ sudo -u hdfs hadoop fs -chmod -R 1777 /tmp

7.创建用户目录:

$ sudo -u hdfs hadoop fs -mkdir /user/<user> $ sudo -u hdfs hadoop fs -chown <user> /user/<user> where <user> is the Linux username